Selective Hourglass Mapping for Universal Image Restoration Based on Diffusion Model

Official PyTorch Implementation of DiffUIR.

Project Page | Paper | Personal HomePage

[2024.12.02] Added onedrive checkpoint link.

[2024.09.17] More instruction was given for test.py and visual.py for better usage.

[2024.03.18] The 14 datasets link is released.

[2024.03.17] The whole training and testing codes are released!!!

[2024.03.16] The 4 versions of pretrained weights of DiffUIR are released.

[2024.02.27] 🎉🎉🎉 Our DiffUIR paper was accepted by CVPR 2024 🎉🎉🎉

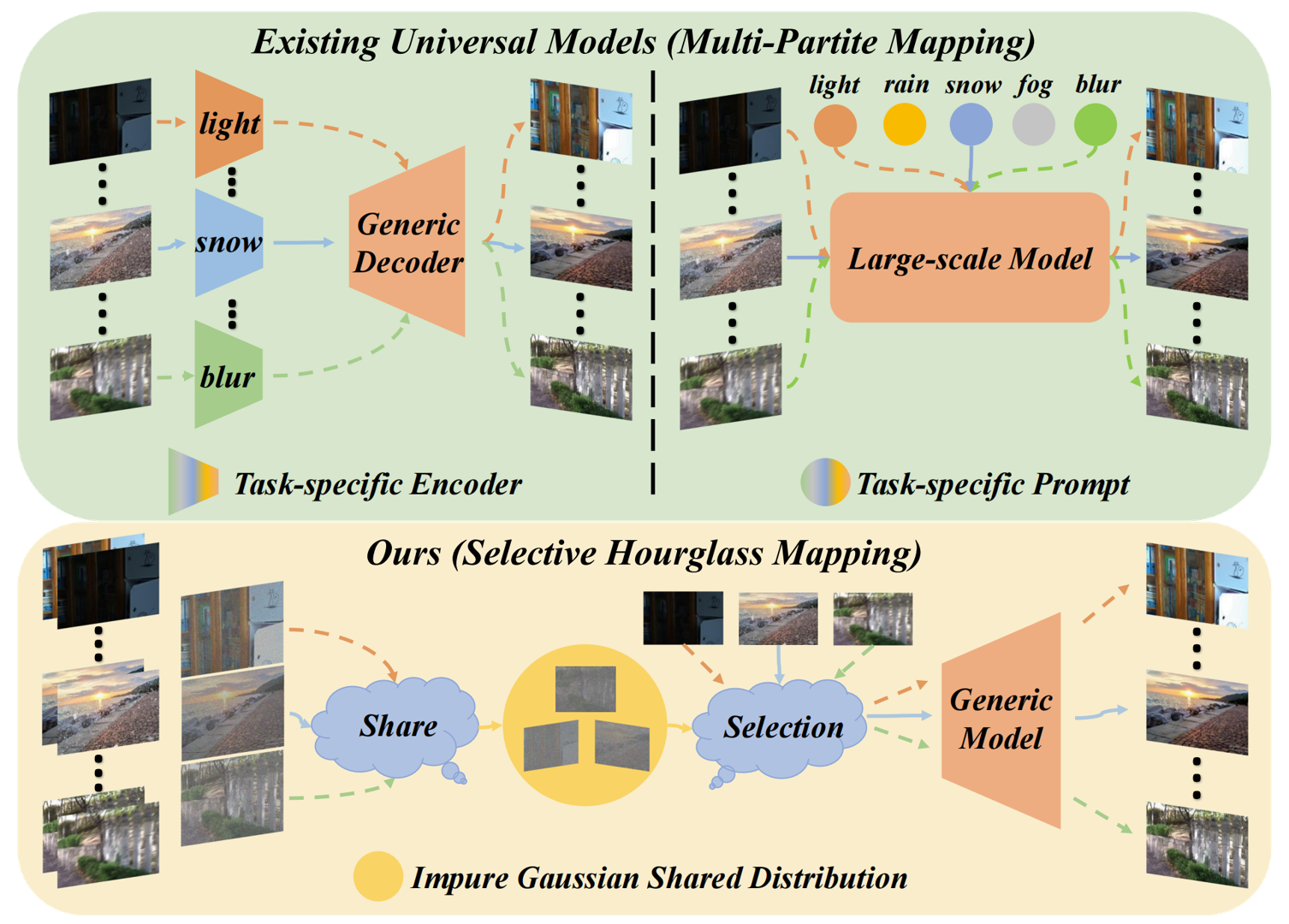

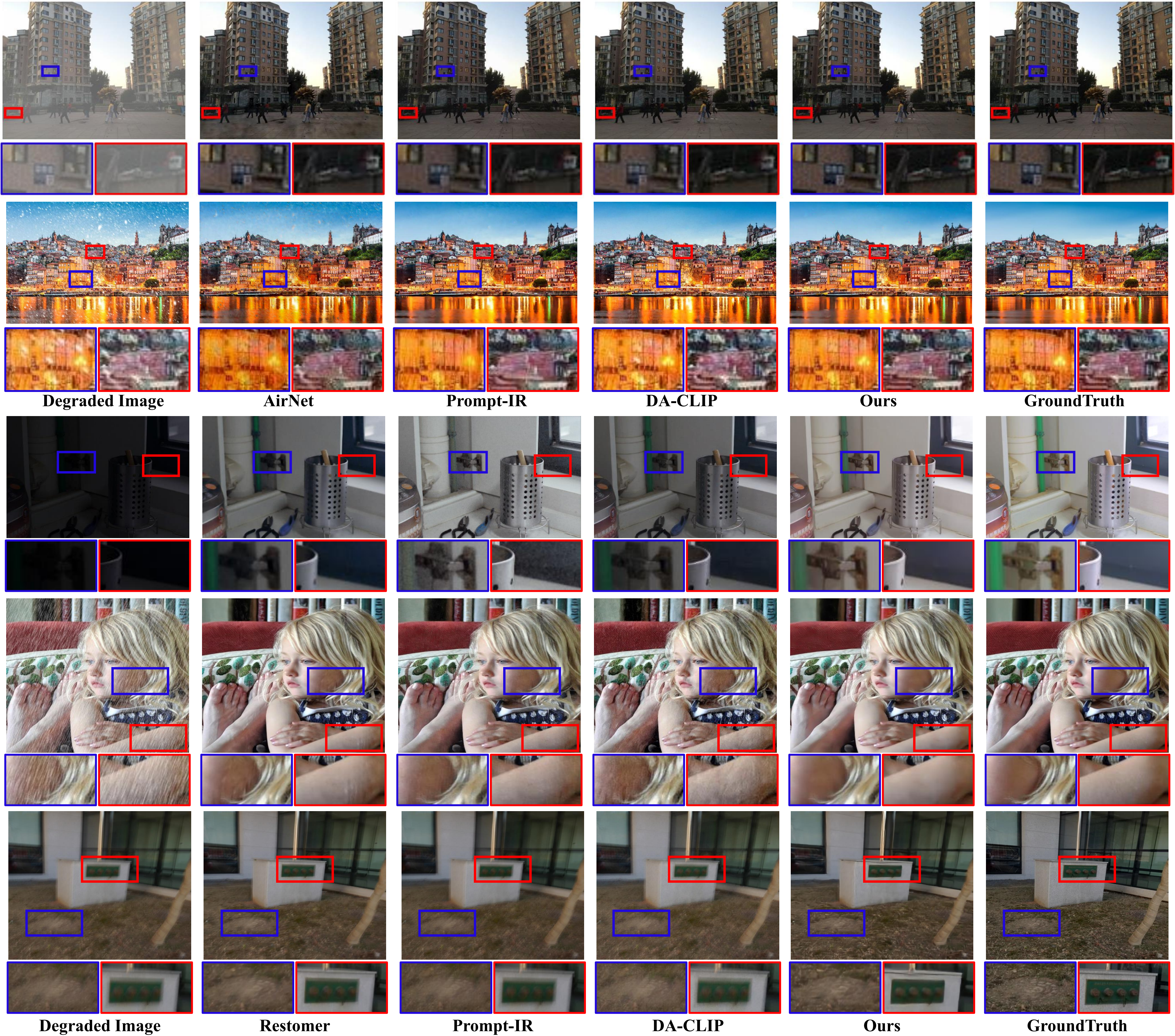

The main challenge of universal image restoration tasks is handling different degradation images at once. In this work, we propose a selective hourglass mapping strategy based on conditional diffusion model to learn the shared information between different tasks. Specifically, we integrate a shared distribution term to the diffusion algorithm elegantly and naturally, achieving to map the different distributions to a shared one and could further guide the shared distribution to the task-specific clean image. By only modify the mapping strategy, we outperform large-scale universal methods with at least five times less computational costing.

- Python 3.79

- Pytorch 1.12

conda create -n diffuir python=3.7

conda activate diffuir

conda install pytorch torchvision torchaudio cudatoolkit=11.3 -c pytorch -c nvidia

pip install opencv-python

pip install scikit-image

pip install tensorboard

pip install matplotlib

pip install tqdm

Preparing the train and test datasets following our paper Dataset Construction section as:

Datasets/Restoration

|--syn_rain

| |--train

|--input

|--target

| |--test

|--Snow100K

|--Deblur

|--LOL

|--RESIDE

|--OTS_ALPHA

|--haze

|--clear

|--SOTS/outdoor

|--real_rain

|--real_dark

|--UDC_val_test

Then get into the data/universal_dataset.py file and modify the dataset paths.

| Degradation | blurry | low-light | rainy | snowy | hazy |

|---|---|---|---|---|---|

| Datasets | Gopro | LOL | syn_rain | Snow100K | RESIDE(5vss) |

| Degradation | blurry | low-light | rainy | snowy | hazy |

|---|---|---|---|---|---|

| Datasets | gopro+HIDE+real_R+real_J | LOL | combine | Snow100K | SOTS |

| Degradation | real_dark | real_rain | UDC | real_snow |

|---|---|---|---|---|

| Datasets | dark | rain | UDC | Snow100K |

We train the five image restoration tasks at once, you can change train.py-Line42 to change the task type.

Note that the result of our paper can be reimplemented on RTX 4090, using 3090 or other gpus may cause performance drop.

python train.py

Note that the dataset of SOTS can not calculate the metric online as the number of input and gt images is different.

Please use eval/SOTS.m.

The pretrained weight of model-300.pt is used to test with timestep 3, check double times whether you loaded, the ``result_folder'' !!!.

Notably, change the 'task' id in test.py Line 43 to your task, low-light enhancement for 'light_only', deblur for 'blur', dehaze for 'fog', derain for 'rain', desnow for 'snow'

python test.py

For Under-Camera real-world dataset, as the resolution is high, we split the image into several pathes and merge them after model.

The pretrained weight of model-130.pt is used to test with timestep 4.

python test_udc.py

Here you can test our model in your personal image. Note that if you want to test low-light image, please use the code src/visualization-Line1286-1288

python visual.py

| Model | DiffUIR-T (0.89M) | DiffUIR-S (3.27M) | DiffUIR-B (12.41M) | DiffUIR-L (36.26M) |

|---|---|---|---|---|

| Arch | 32-1111 Tiny | 32-1224 Small | 64-1224 Base | 64-1248 Large |

We provide different model versions of our DiffUIR, you can replace the code in train.py Line 58-65 with the model size mentioned above(64-1224)

| Model | DiffUIR-T (0.89M) | DiffUIR-S (3.27M) | DiffUIR-B (12.41M) | DiffUIR-L (36.26M) |

|---|---|---|---|---|

| Arch | 32-1111 Tiny | 32-1224 Small | 64-1224 Base | 64-1248 Large |

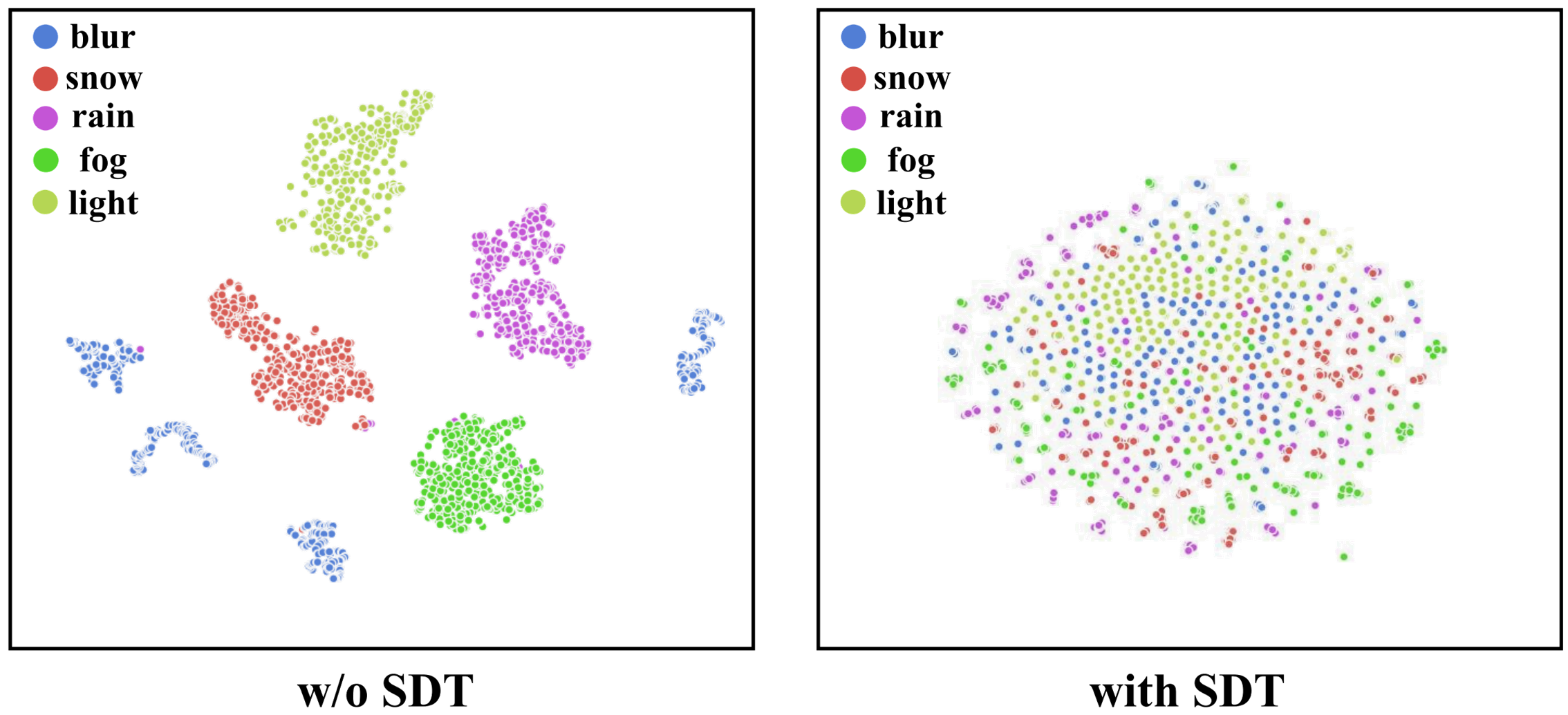

The distribution before and after our SDT, SDT map the different distributions to a shared one.

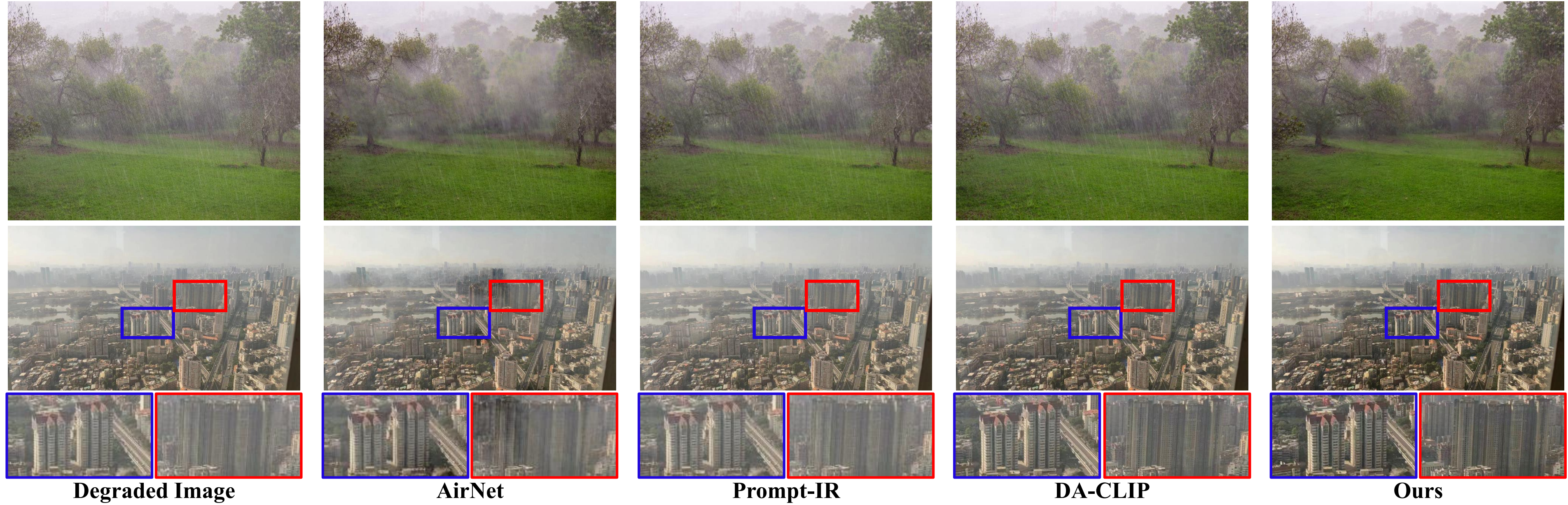

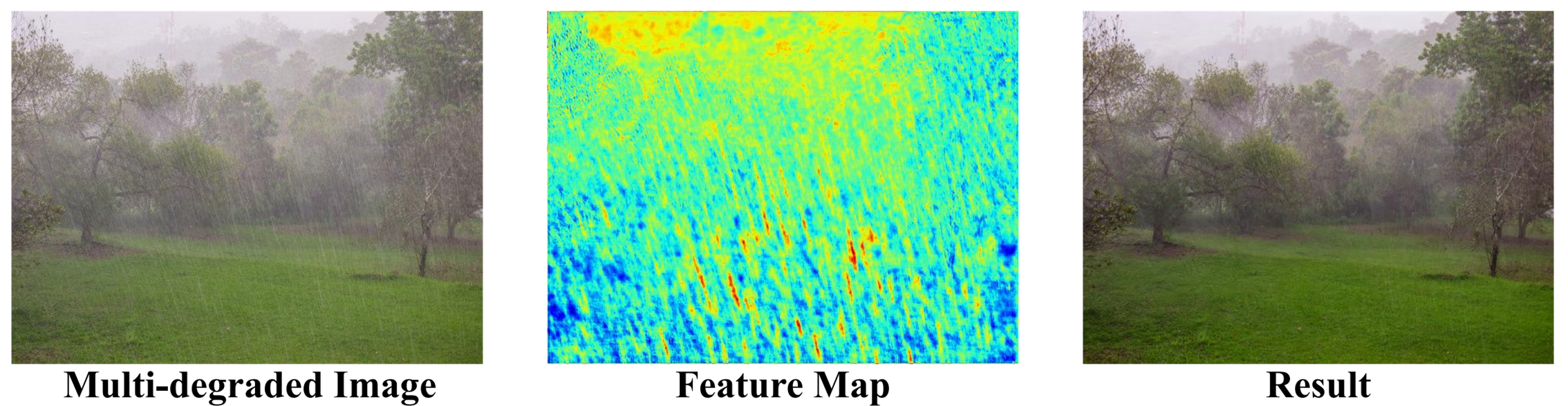

The attention of the feature map, our method could focus on the degradation type (rain and fog), validating that we learn the useful shared information.

If you find this project helpful in your research, welcome to cite the paper.

@inproceedings{zheng2024selective,

title={Selective Hourglass Mapping for Universal Image Restoration Based on Diffusion Model},

author={Zheng, Dian and Wu, Xiao-Ming and Yang, Shuzhou and Zhang, Jian and Hu, Jian-Fang and Zheng, Wei-shi},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024}

}

Thanks to Jiawei Liu for opening source of his excellent works RDDM. Our work is inspired by these works and part of codes are migrated from RDDM.

Please contact Dian Zheng if there is any question (1423606603@qq.com or zhengd35@mail2.sysu.edu.cn).