arabic-did

Arabic Dialect Identification Using Different DL Models

This repo is concerned with sentence level Arabic dialect identification using deep learning models. The included models are VDCNN, AWD-LSTM, & BERT using Pytorch. The hyperparameters have been automatically optimized using Tune. An attempt of explaining the model's output was done using LIME. Experiments have been logged using Comet.ml and can be viewed here. You can also view the main experiments from the links in the tables below.

Results

Shami Corpus

The following table summarized the performance of each architecture on the Shami Corpus. The reported numbers are the best macro-average performance achieved using automatic HPO (HyperOpt) except for the pretrained BERT. The input token mode was treated as a hyperparameter in that search. It is to be noted that the training and validation set are shuffled each HyperOpt iteration to prevent it from overfitting on the validation set. You can view details about the experiment that achieved the reported numbers by visiting the link associated with each model. This achieves state-of-the-art results on that dataset.

| Model | Input Token Mode | Validation Dataset Perf | Test Dataset Perf | Experiment Link |

|---|---|---|---|---|

| VDCNN | char | 90.219 % | 89.247 % | Link |

| AWD-LSTM | char | 89.784% | 89.403 % | Link |

| BERT | subword | 81.823% | 80.715 % | Link |

| BERT (Pretrained) | subword | 81.336% | 80.474 % | Link |

Arabic Online Commentary Dataset

The following table includes the performance results of applying the best model, AWD-LSTM, in the previous table along with its hyperparameters on the AOC dataset with the same train\dev\test splits done here; no form of manual or automatic optimization was done on AOC except for training the parameters of the AWD-LSTM model. This achieves state-of-the-art results on that dataset. Each result is linked with a Comet.ml experiment.

| Model | Dev Micro Avg | Dev Macro Avg | Test Micro Avg | Test Macro Avg |

|---|---|---|---|---|

| AWD_LSTM | 84.741% | 79.743% | 83.380% | 75.721% |

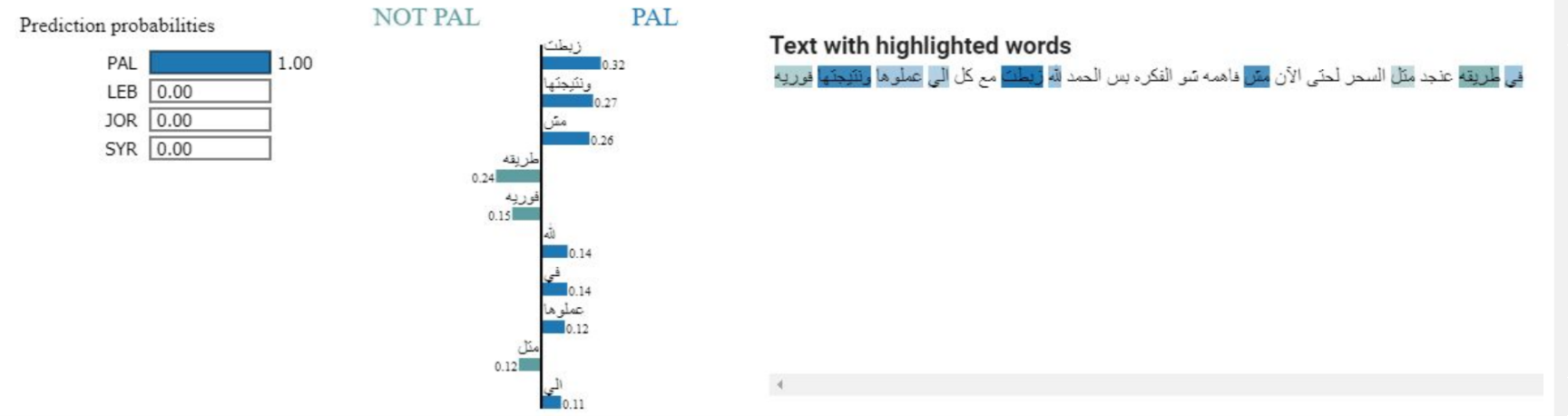

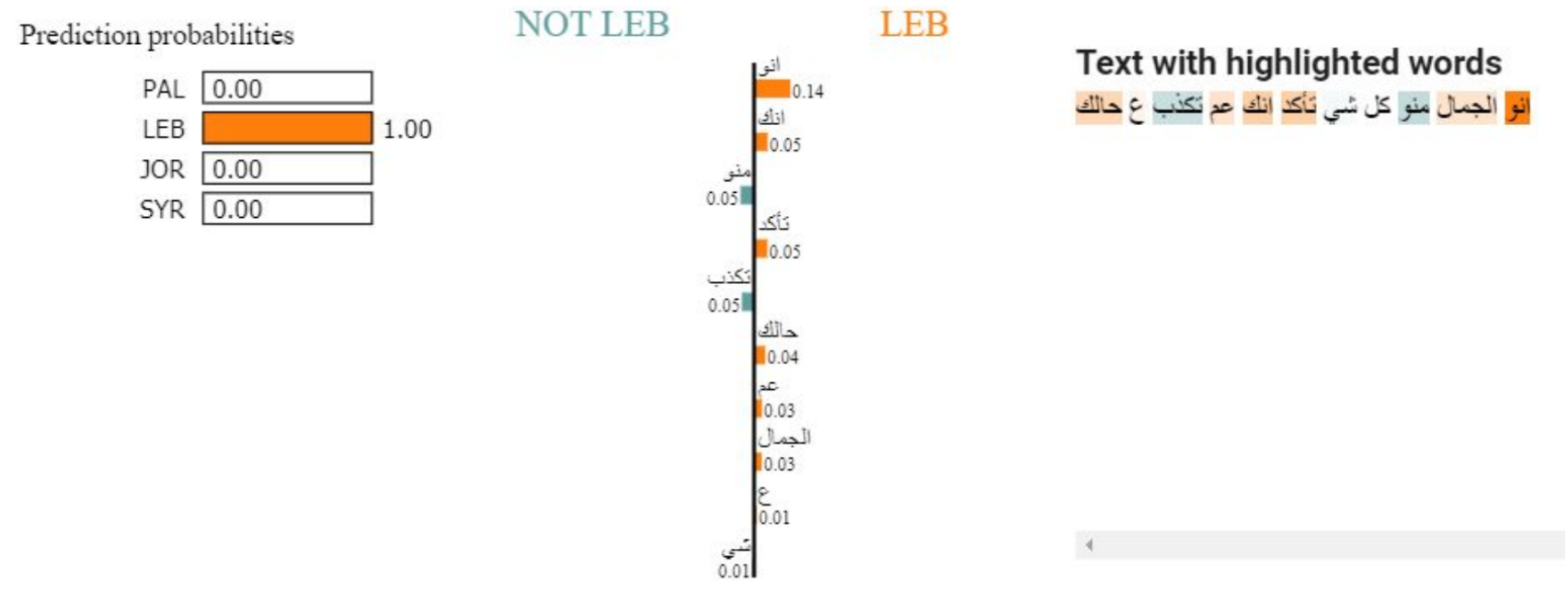

Explainability

An attempt was made to explain why the model outputs its prediction. LIME was used, and here are some examples of the results: