This node aims to enhance the model's output diversity by introducing noise during the sampling process, based on the CADS method outlined in this paper specifically for ComfyUI.

Forked from asagi4/ComfyUI-CADS, this implementation also acknowledges the A1111 approach as an instrumental reference.

After initializing other nodes that set a unet wrapper function, apply this node. It maintains existing wrappers while integrating new functionalities.

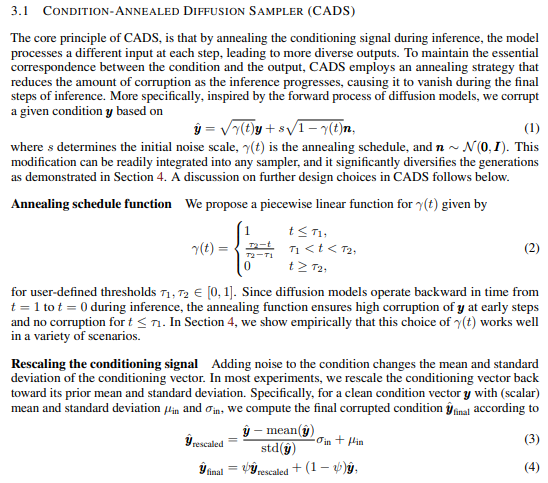

t1: Original prompt conditioning.t2: Noise injection (Gaussian noise) adjusted bynoise_scale.noise_scale: Modulates the intensity oft2. Inactive at0.noise_type: The type of the noise distribution.reverse_process: Reverses the noise injection sequence.rescale_psi: Normalizes the noised conditioning. Inactive at0.apply_to: Targets noise application, withuncondas the default.key: Determines which prompt undergoes noise addition.

The transition between t1 and t2 is dynamically managed based on their values, ensuring a smooth integration of the original and noised conditions.

Recommendations from the paper:

t1set to0.2introduces excessive noise, while0.9is minimal.- Suggested

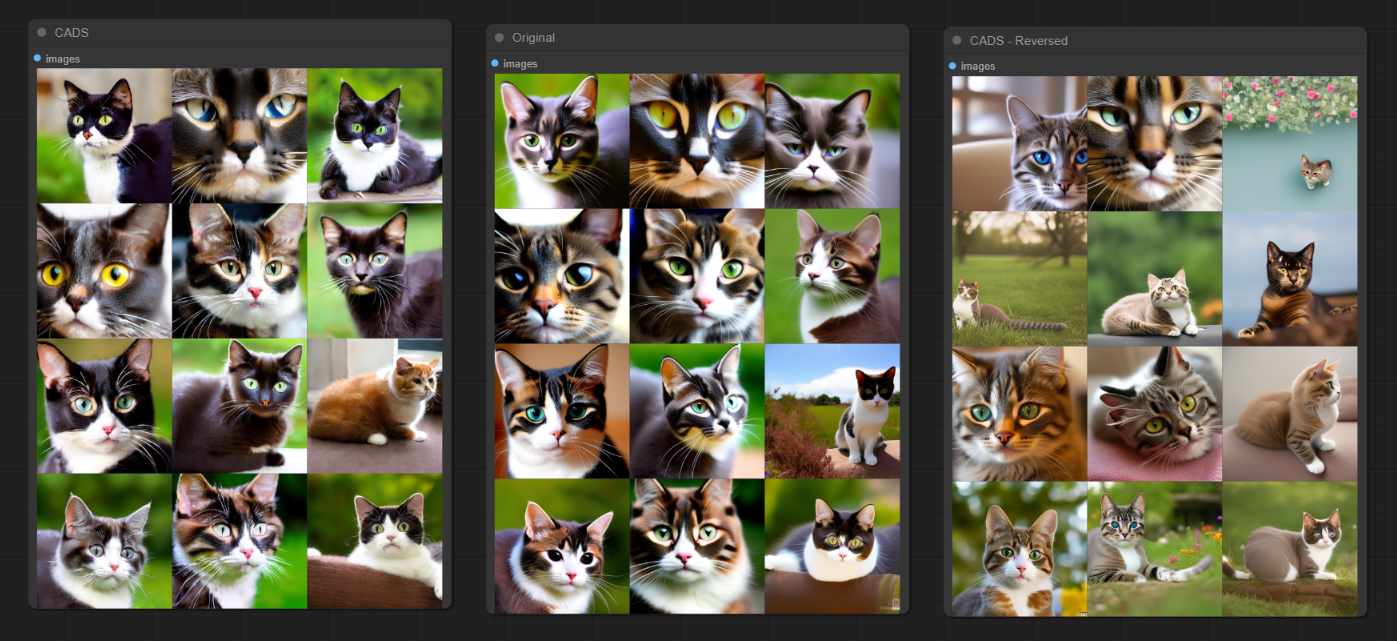

noise_scaleranges from0.025to0.25. rescale_psi: A higher value, optimally1, reduces divergence risks and can improve output quality. However, empirical evidence suggests that the optimal setting forrescale_psican be task-dependent. While1tends to generate results closer to the original distribution, setting it to0can sometimes provide better outcomes, though this is not consistently the case.reverse_process: Dictates the diversity control mechanism. Setting it toTrueinitiates noise application, fostering overall image diversity. Conversely,Falsestarts with the prompt, integrating noise subsequently, which primarily alters details.

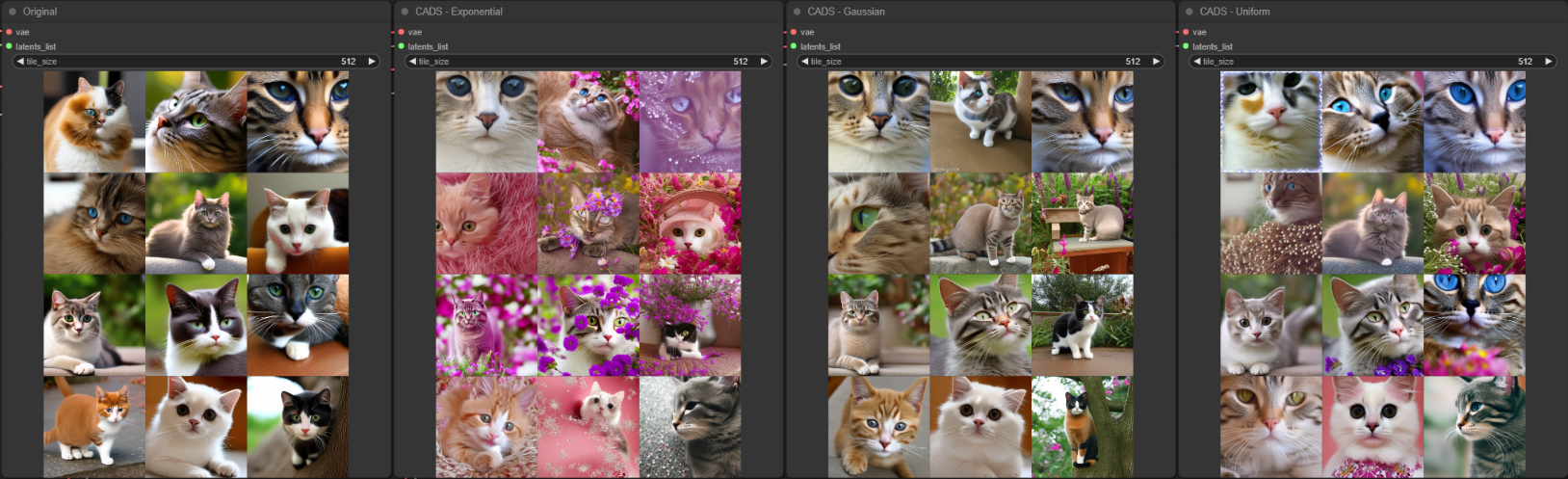

In addition to the normal distribution used for conditioning corruption in the paper, this implementation offers alternative options to cater to varying levels of desired output diversity:

Gaussian: Employs normal distribution, preserving a degree of the original composition's structure.Uniform: Generates more diversity than Gaussian by ensuring an even spread from the mean, potentially leading to nonsensical results.Exponential: Delivers extreme diversity by markedly diverging from the mean, which may also result in nonsensical outcomes.

Initially applied to cross-attention, noise application has been shifted to regular conditioning y for improved relevance. Alter the key parameter to revert to the previous mechanism. Presently, the term attention is observed within the model's structure.