Resources and notebooks to accompany the 'Duplicate Detection with GenAI' paper available here.

The examples here use the publicly available Musicbrainz 200K dataset. This can be seen at the Liepzig University benchmark datasets for entity resolution webpage.

The actual dataset itself can be downloaded using the following links:

- Musicbrainz 200K dataset: musicbrainz-200-A01.csv

You will need to install the packages listed in the requirements.txt file in order to run the example notebooks. You will also need to install the FAISS package. If you're using conda then you can do it like this:

To install the GPU version:

conda install -c conda-forge faiss-gpuTo install the CPU version:

conda install -c conda-forge faiss-cpuThere are a number of notebooks that cover the following things:

- Steps of the proposed method

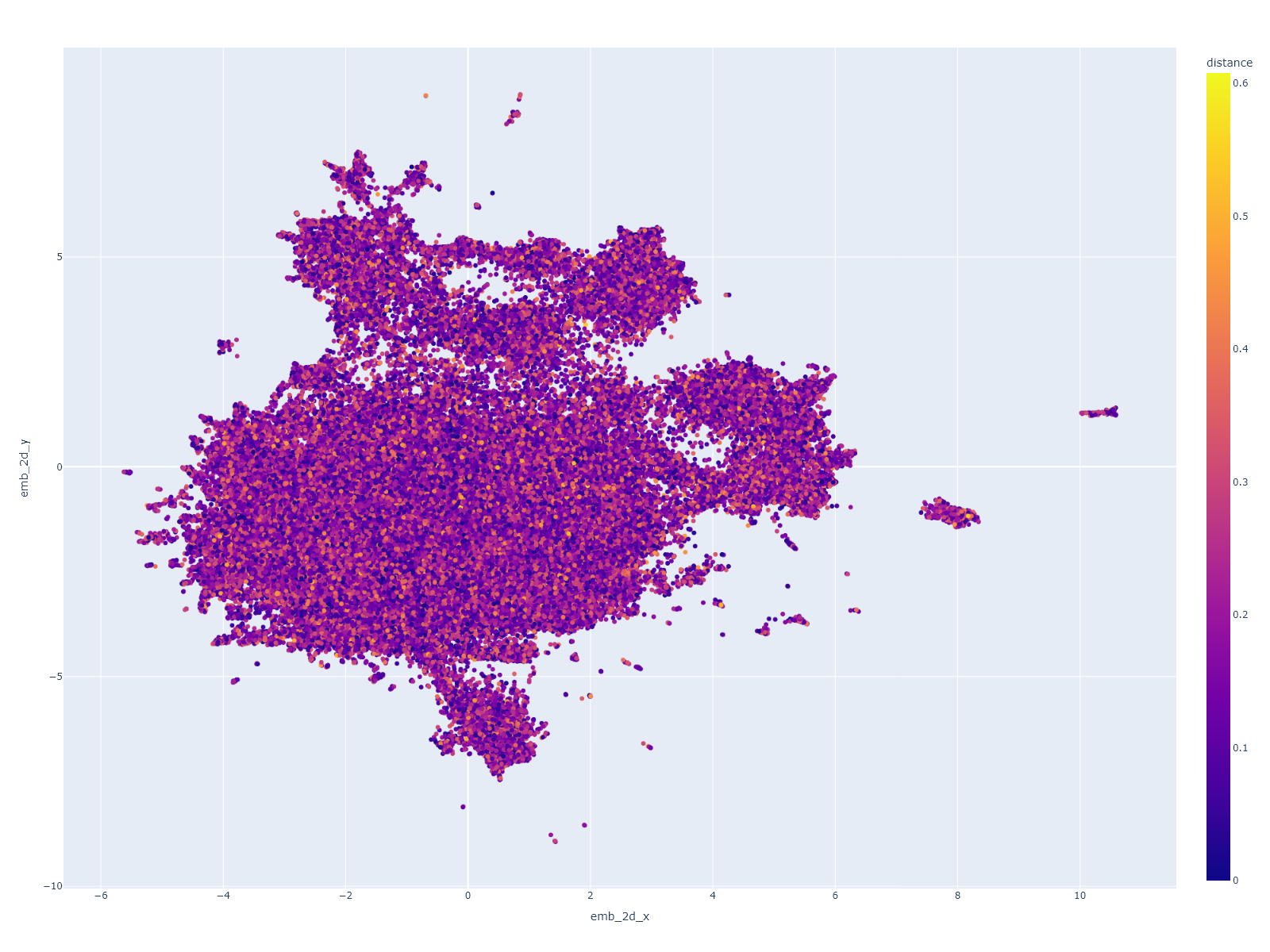

- Visualisation of embeddings

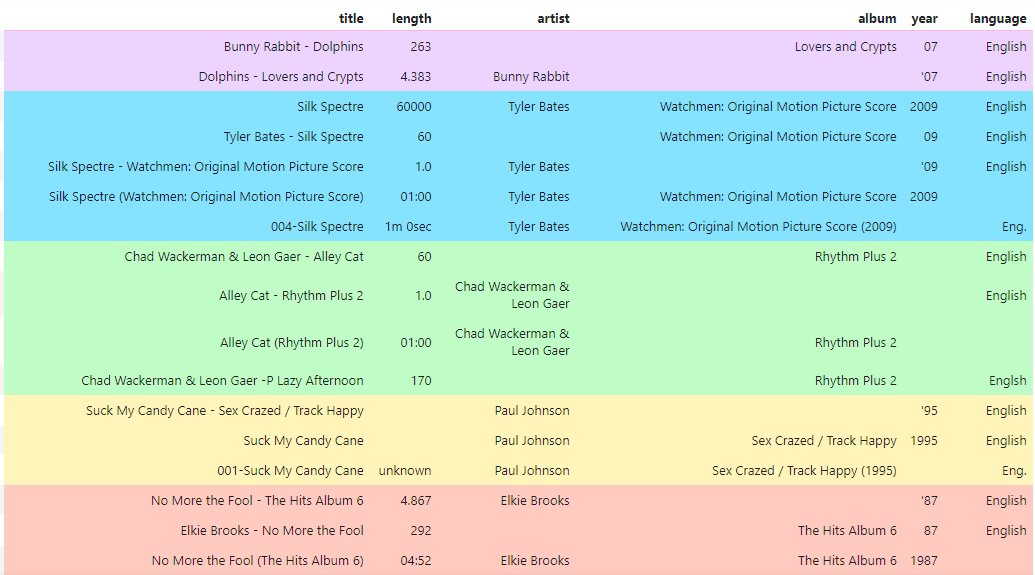

- Visualisation of match groups

- Evaluate results of experiments

- Show results of experiments

The steps that are involved in the proposed method consist of the following steps:

- Create Embedding Vectors using "Match Sentences"

- Create Faiss Index and identify potential duplicate candidate cluster groups

This is in the following notebook:

- Create embeddings for Musicbrainz 200K dataset: create_embeddings_200k.ipynb

Use the following notebook to create a Faiss Index and identify potential duplicate candidate cluster groups.

- Create Faiss index and do clustering for Musicbrainz 200K dataset: clustering_200k_with_faiss_index.ipynb

This is in the following notebook:

- Visualise embeddings for Musicbrainz 200K dataset: visualize_embeddings_musicbrainz_200k.ipynb

Only the match groups for the Musicbrainz 200K dataset are visualised since that's the dataset that we ran our main experiments with.

- Visualise match groups for the Musicbrainz 200K clustering: visualize_match_group_results_200k.ipynb

This is in the following notebook:

- Evaluate results for the Musicbrainz 200K experiments: evaluate_200k_clustering.ipynb

This is in the following notebook:

- Show experiment results for the Musicbrainz 200K experiments: experimental_results_200k.ipynb

The Duplicate Detection (DuDe) toolkit is a great resource for testing different strategies for different datasets:

https://hpi.de/naumann/projects/data-integration-data-quality-and-data-cleansing/dude.html

In order to get it to work with the Musicbrainz 200K dataset I found I had to transform the data into a format that it could process. I have provided 2 notebooks that I used to do this. This will create a formatted CSV file that you can use as a dataset input together with a 'goldstandard' dataset that you can use to generate statistics to see how well your strategies are working:

- Convert Musicbrainz 200k: load_and_convert_200k.ipynb

- Create 'goldstandard': create_gold_standard_200k.ipynb