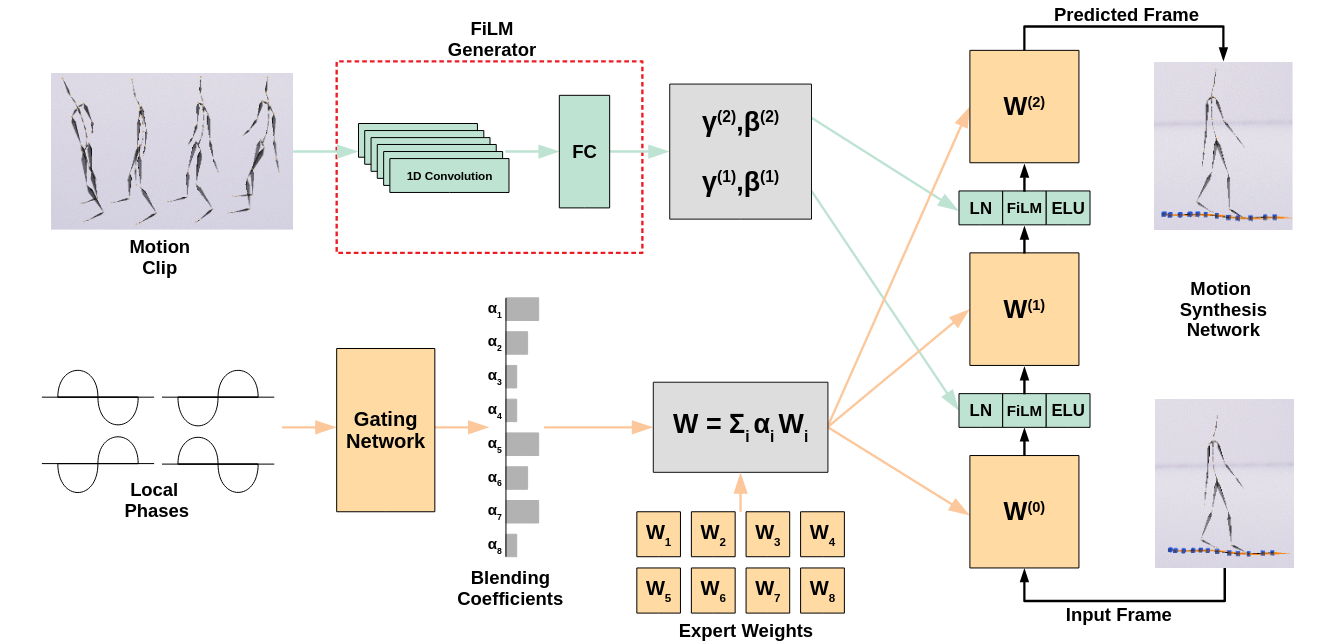

This repository provides skeleton code for the paper Real-Time Style Modelling of Human Locomotion via Feature-Wise Transformations and Local Motion Phases.

Two Unity demos are provided, one showing the data labelling process and another showing the animation results. Additionally python code for training a neural network using local motion phases is given.

This code is meant as a starting point for those looking to go deeply into animation with neural networks. The code herein is uncleaned and somewhat untested. This repo is not actively supported. It is strongly encouraged to consider using the much more active repository AI4Animation, the work on DeepPhase supersedes this work achieving better results with a more general solution.

This code works with the 100STYLE dataset. More information about the dataset can be found here. Processed data labelled with local phases can be found here.

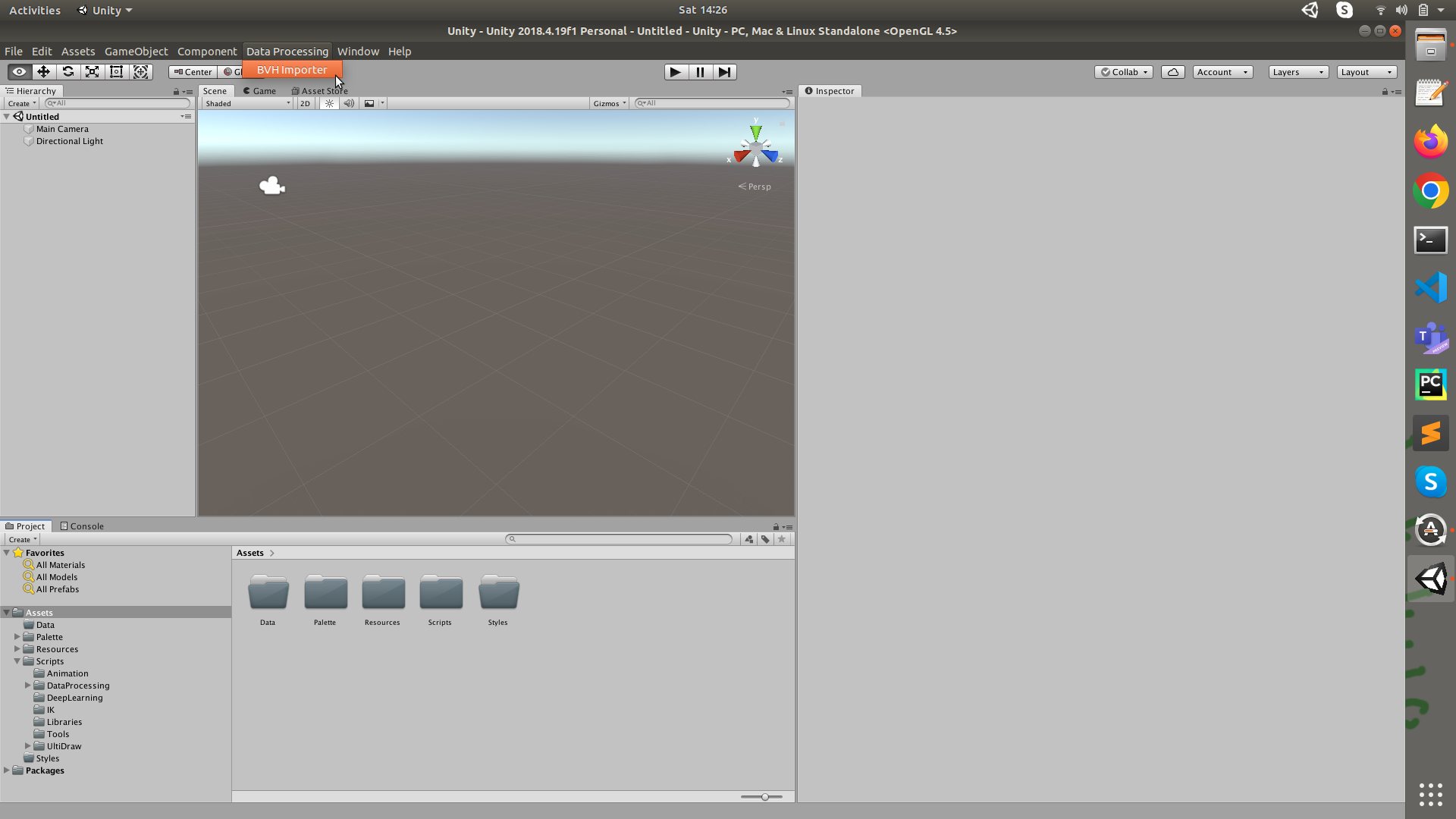

This demo gives an example for importing, labelling and exporting the bvh for the swimming style (forwards walking).

- The demos require Unity. The version used was 2018.4.19f1.

- You may experience issues with Eigen. Is so try navigating to Plugins/Eigen copy the command in

Commandand run it. - I couldn't figure out how to get the demo working on mac and it is untested on windows. It should work on linux.

-

Open the Unity project.

-

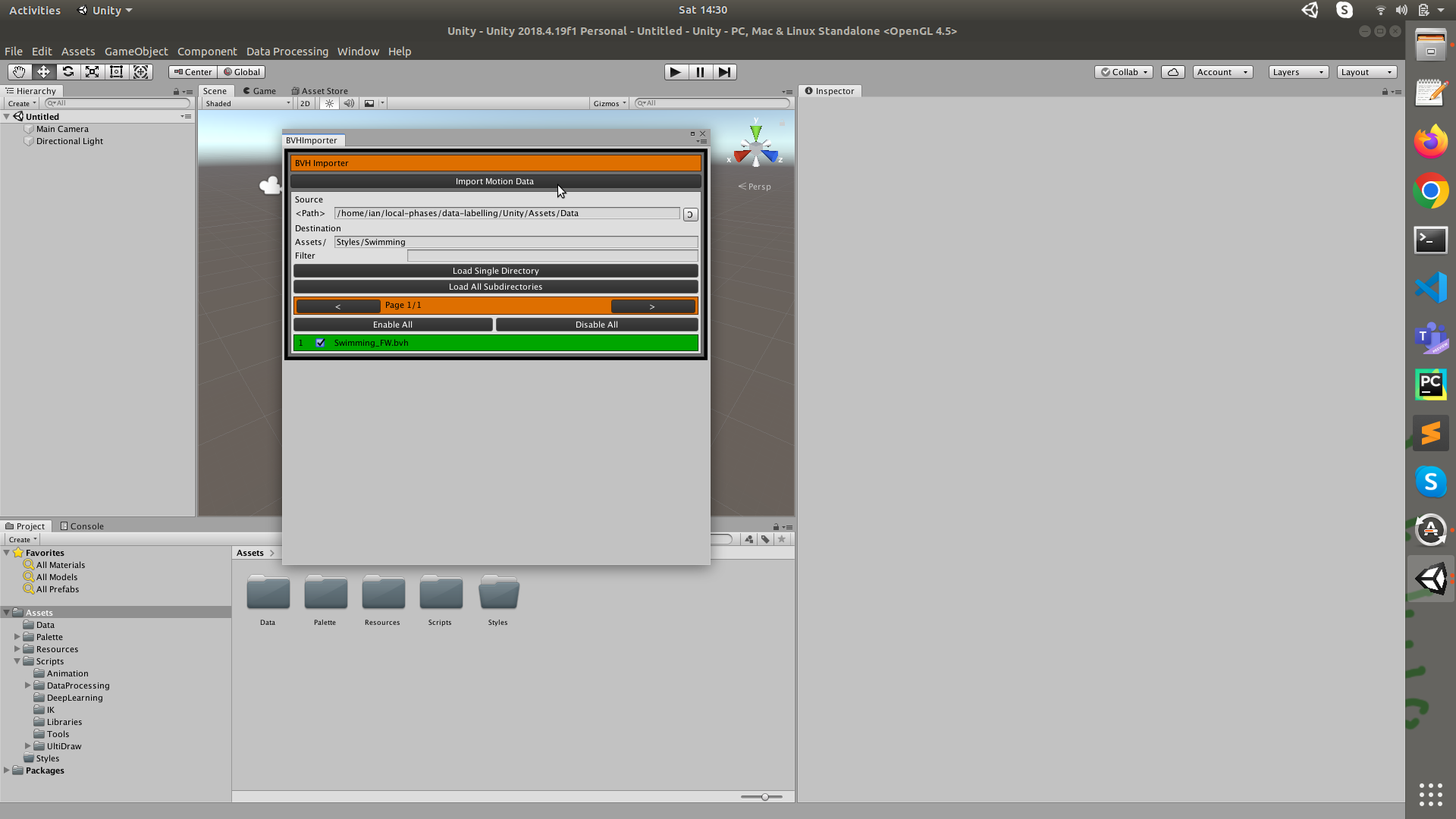

Set the Source to

Assets/Dataand the destination toAssets/Styles/Swimming. (These paths can be changed for custom data.) -

Hit load single directory and click enable all. You should see the file

Swimming_FW.bvhin green. -

Wait for data to import. You should then see

Swimming_FW.bvh.assetinAssets/Styles/Swimming.

-

In

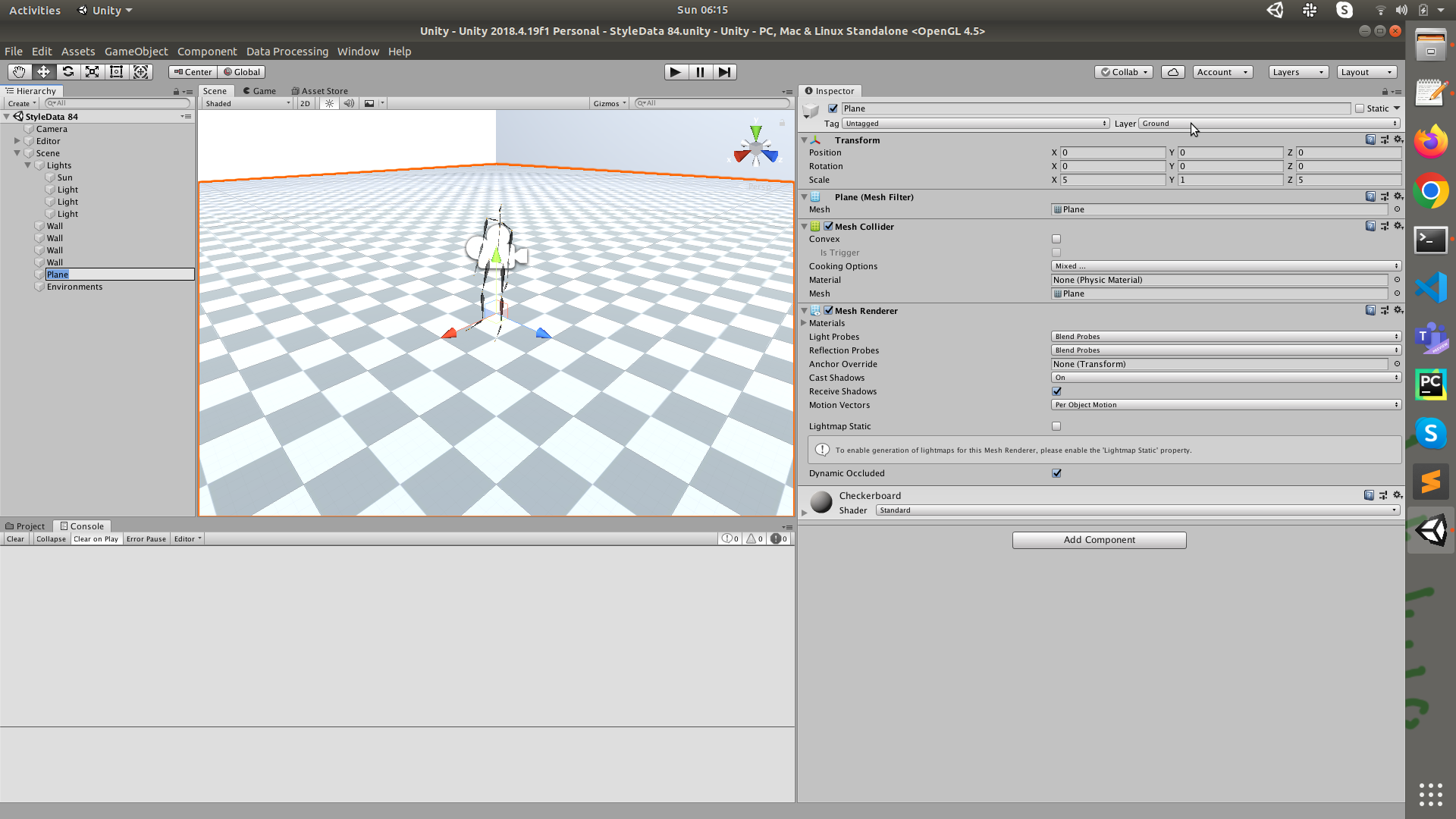

Assets/Styles/Swimmingopen the scene StyleData 84. (If materials and textures are missing for the walls and floor drag and drop from the resources directory. I.e. drag the grey material onto the inspector for the walls and the checkerboard texture onto the plane.) -

For the ground plane in the inspector add a new layer called "Ground" if it is not present (otherwise the trajectory will not project onto the ground).

-

Select the editor and add the component

Motion Editorusing the inspector if it is not present. -

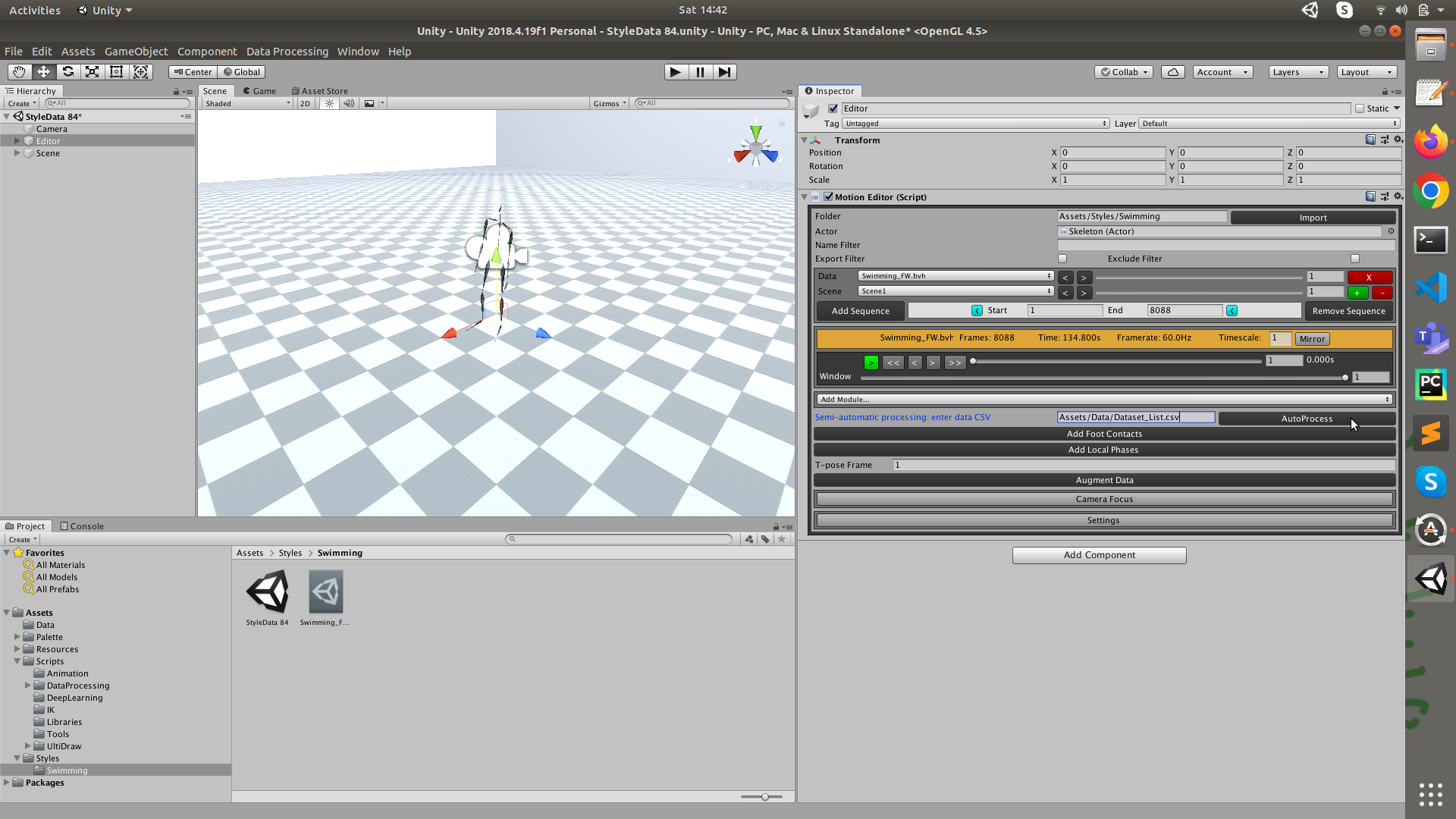

Set the folder to

Assets/Styles/Swimmingand click Import (you can import more than one swimming bvh to this directory and files will appear as a drop down in thedatafield.) -

Set the semi-automatic processing path to

Assets/Data/Dataset_List.csv

-

Click AutoProcess. Wait for the auto labelling to complete (around 15 seconds). This should add the Trajectory Module, Phase Module, Gait Module and Style Module.

-

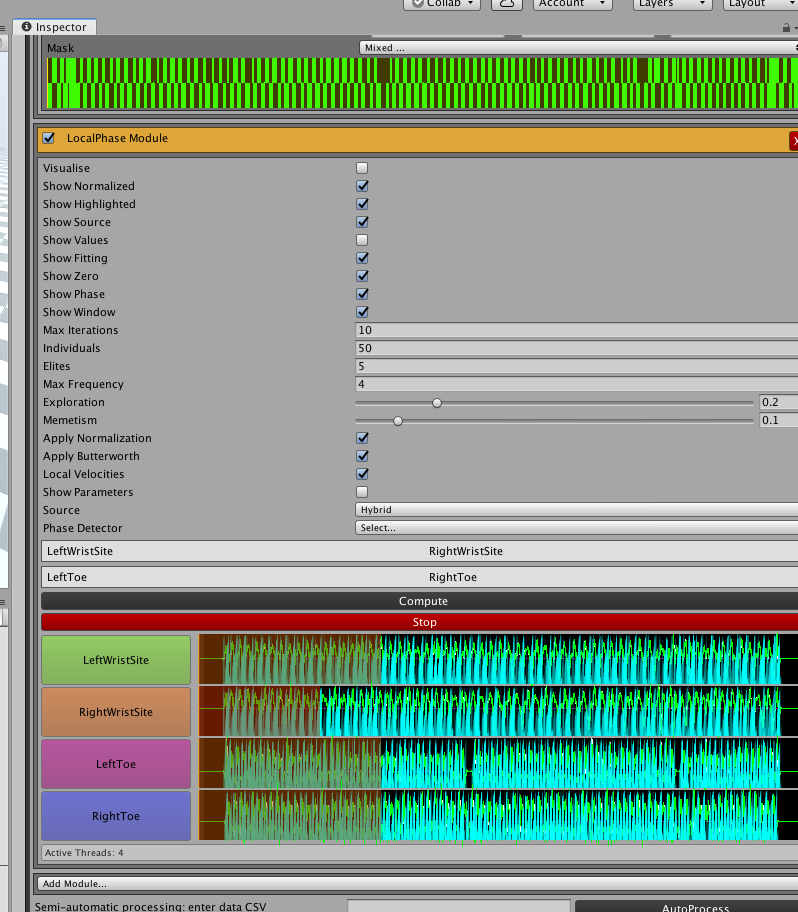

Next press Add Foot Contacts. The default settings should be okay, or you can play around to see if there are better settings. Green bars represent detected foot contacts.

-

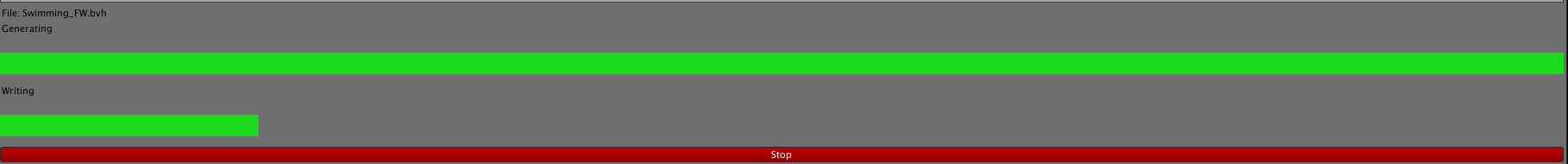

Finally press Add Local phases. This labels local phases using contacts where available and a PCA-heuristic method where not (as described in the paper). Wait for the phase fitting progress bar to get to end.

-

In settings at the bottom of the Motion Editor, tick the export button.

-

Data Processing > MotionExporter and Data Processing > MotionClipExporter export in/out frame pairs or multi-frame clips of motion respectively. Select Data Processing > MotionExporter.

-

Set the export directory to the location that you want to export labelled data to.

-

Scroll to bottom and select Export Data From Subdirectories. The data will begin exporting.

-

This exports txt files which can be processed further for neural network training. The txts from doing this labelling process for all styles in 100STYLE can now be found here.

This demo shows some results after training a neural network with data labelled with the process described above.

-

Open the Unity project.

-

Open the demo scence at Demo/Style_Demo_25B. As with the data labelling demo, if textures and materials are not showing correctly add the textures to the walls and the ground. Additionally add the Y Bot joints and body textures.

-

Add a new layer called "Ground" for the ground plane (as in the data labelling pipeline above).

-

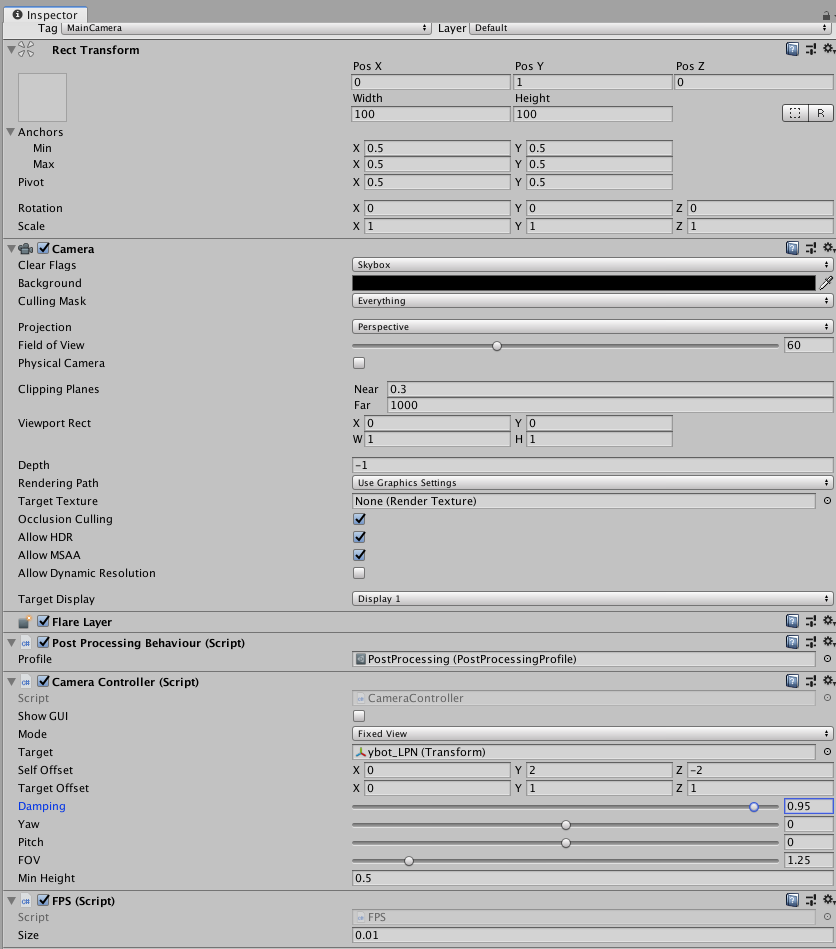

Add any missing scripts to the camera. The scripts on the camera should include

post-processing behvior,camera controllerandfps, configured as shown below.

-

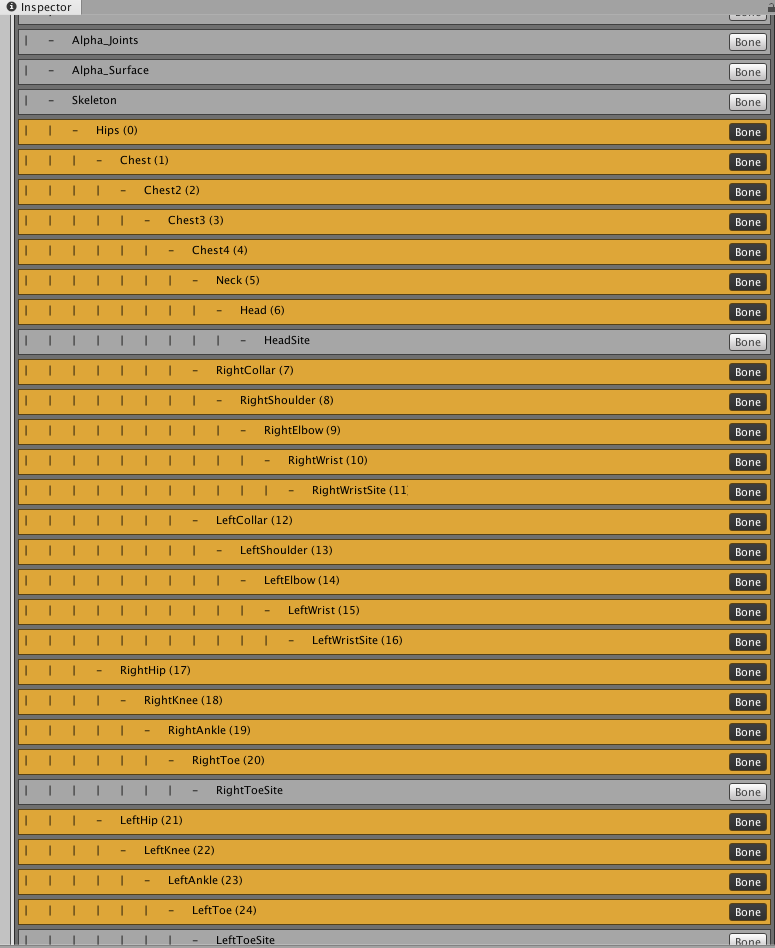

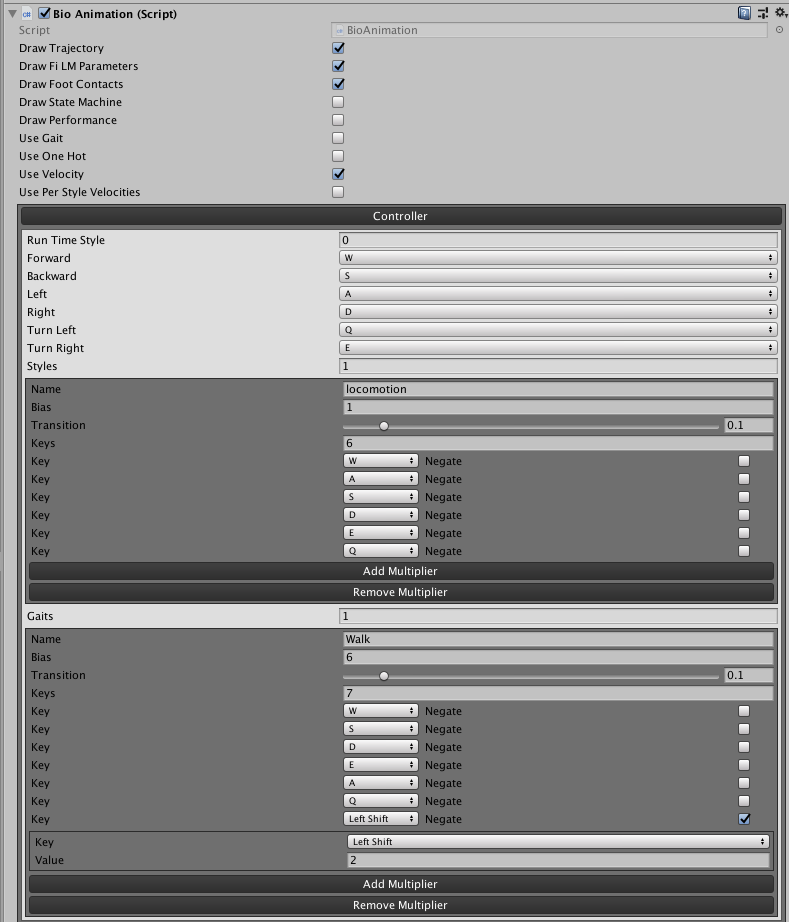

Add any missing scripts to ybot_lpn. The scripts on the character should include

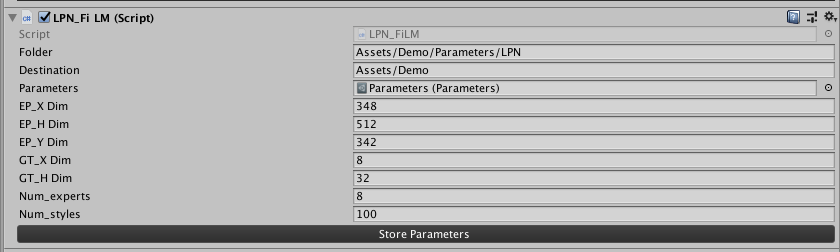

actor,input handler,bio animation, andlpn_film. -

Configure the

lpn_filmscript as shown and click Store Parameters

-

Press the play button and the demo should run. If you build into a standalone executable the demo will run much faster (File > Build Settings). You can change the style of motion and interpolate between styles in the bottom left. The character is controlled with WASDEQ and left shift.

- The network parameters were found on an old server, the results mostly seems reasonable but style 23 seems to have some error.

- Style 57 is a good example of a motion that would be difficult for methods that extract phases based only on contacts with the ground or objects in the environment.

- Styles 0-94 are used to train both the Gated Experts Network and the FiLM Generator. Styles 95-99 come from finetuning only the FiLM Generator..

- You will get likley be able to achieve more robust and more general results with DeepPhase.

The code uses tensorflow 1.X, numpy and PyYAML are also required. This part of the repo is untested, I have grabbed the files I think are needed but not tried running the code. If there is some missing import (or similar trivial error) let me know and I will look for it.

In file names LPN is short for Local Phase Network and refers to the main system in the paper

-

The training uses the tfrecords file format.

-

Labelled data in txts is found here. To convert to tfrecords run

frame_tfrecords_noidle.pyandclip_tfrecords_noidle.pyto get tfrecords for frames and clips of motion. -

Run

Normalize_Data_Subset.pyto get input and output normalization statistics.

-

The system is trained by running

LPN_100Style25B_BLL_Train.py. This uses the config fileLPN_100Style25B_BLL.yml. You will need to setdata_pathandstats_pathto point to extracted tfrecords and normalization statistics directories. Alsosave_pathfor where you want to save the model. If finetuning also setft_model_path. -

After training,

LPN_Finetuning_Weights.pysaves the gating network and expert weights as binaries for use with Unity. -

LPN_FiLM_Parameters.pysaves the FiLM parameters as binaries for use with Unity. -

LPN_get_unity_mean_std.pysaves normalization statistics for use with Unity. -

(Optional)

LPN_test_error.pycalculates quantitvate test error -

(Optional)

LPN_FiLM_Finetuning.pyfinetunes only the FiLM generator on a new style. This usesLPN_FiLM_Finetuning.yml, where you will need to set the paths to point to your directories.

If you use this code or data in your research please cite the following:

@article{mason2022local,

author = {Mason, Ian and Starke, Sebastian and Komura, Taku},

title = {Real-Time Style Modelling of Human Locomotion via Feature-Wise Transformations and Local Motion Phases},

year = {2022},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {5},

number = {1},

doi = {10.1145/3522618},

journal = {Proceedings of the ACM on Computer Graphics and Interactive Techniques},

month = {may},

articleno = {6}

}

The 100STYLE dataset is released under a Creative Commons Attribution 4.0 International License.

Large parts of the code inherit from AI4Animation which comes with the following restriction: This project is only for research or education purposes, and not freely available for commercial use or redistribution.

The Y Bot character in the demos comes from Adobe mixamo. At the time of publication the following applies:

Mixamo is available free for anyone with an Adobe ID and does not require a subscription to Creative Cloud.

The following restrictions apply:

Mixamo is not available for Enterprise and Federated IDs.

Mixamo is not available for users who have a country code from China. The current release of Creative Cloud in China does not include web services.