Paper Links: TopFormer: Token Pyramid Transformer for Mobile Semantic Segmentation (CVPR 2022)

by Wenqiang Zhang*, Zilong Huang*, Guozhong Luo, Tao Chen, Xinggang Wang†, Wenyu Liu†, Gang Yu, Chunhua Shen.

(*) equal contribution, (†) corresponding author.

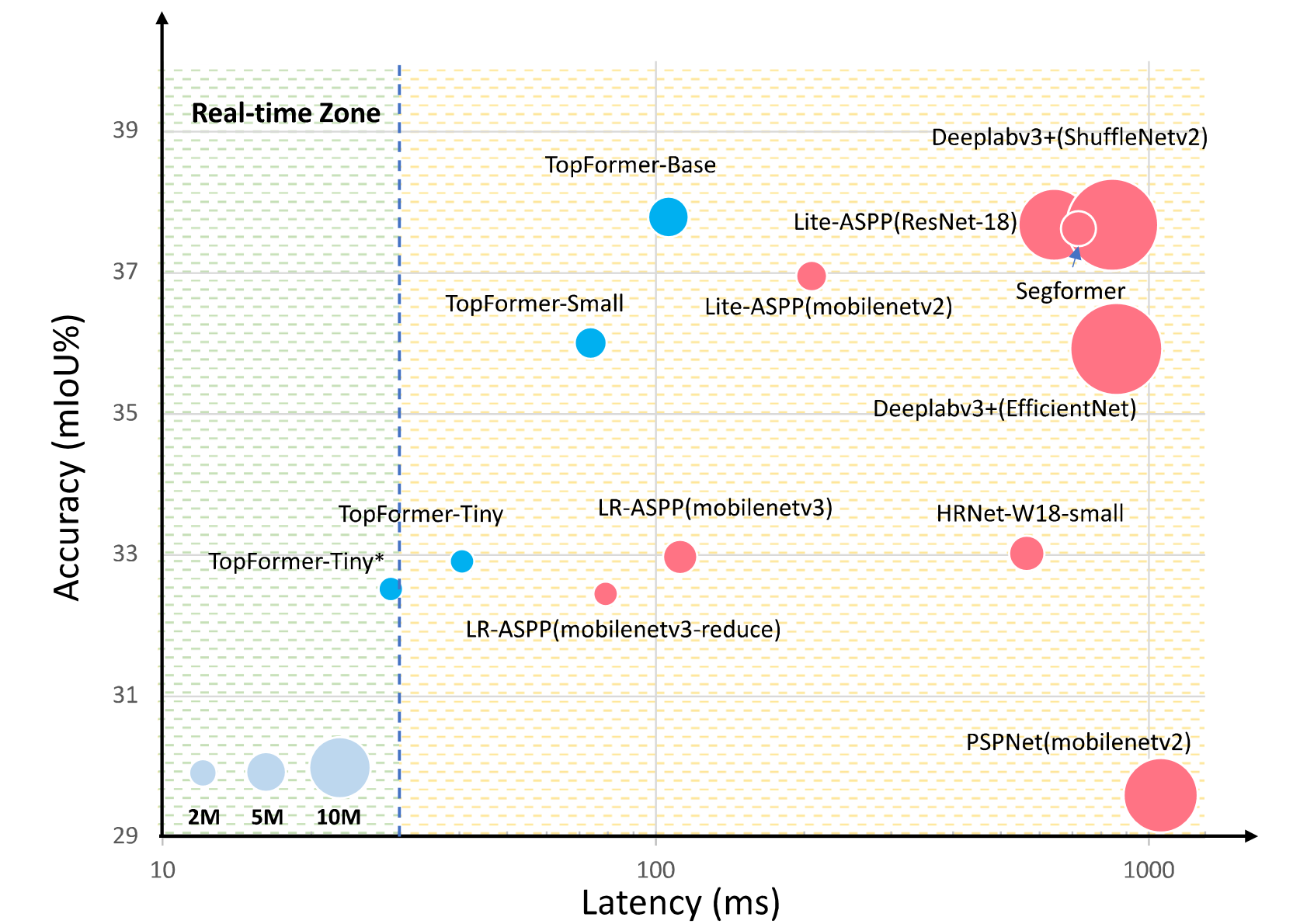

Although vision transformers (ViTs) have achieved great success in computer vision, the heavy computational cost makes it not suitable to deal with dense prediction tasks such as semantic segmentation on mobile devices. In this paper, we present a mobile-friendly architecture named Token Pyramid Vision TransFormer(TopFormer). The proposed TopFormer takes Tokens from various scales as input to produce scale-aware semantic features, which are then injected into the corresponding tokens to augment the representation. Experimental results demonstrate that our method significantly outperforms CNN- and ViT-based networks across several semantic segmentation datasets and achieves a good trade-off between accuracy and latency.

The latency is measured on a single Qualcomm Snapdragon 865 with input size 512×512×3, only an ARM CPU core is used for speed testing. *indicates the input size is 448×448×3.

- pytorch 1.5+

- mmcv-full==1.3.14

The classification models pretrained on ImageNet can be downloaded from Baidu Drive/Google Drive.

ADE20K

| Model | Params(M) | FLOPs(G) | mIoU(ss) | Link |

|---|---|---|---|---|

| TopFormer-T_448x448_2x8_160k | 1.4 | 0.5 | 32.5 | Baidu Drive, Google Drive |

| TopFormer-T_448x448_4x8_160k | 1.4 | 0.5 | 33.4 | Baidu Drive, Google Drive |

| TopFormer-T_512x512_2x8_160k | 1.4 | 0.6 | 33.6 | Baidu Drive, Google Drive |

| TopFormer-T_512x512_4x8_160k | 1.4 | 0.6 | 34.6 | Baidu Drive, Google Drive |

| TopFormer-S_512x512_2x8_160k | 3.1 | 1.2 | 36.5 | Baidu Drive, Google Drive |

| TopFormer-S_512x512_4x8_160k | 3.1 | 1.2 | 37.0 | Baidu Drive, Google Drive |

| TopFormer-B_512x512_2x8_160k | 5.1 | 1.8 | 38.3 | Baidu Drive, Google Drive |

| TopFormer-B_512x512_4x8_160k | 5.1 | 1.8 | 39.2 | Baidu Drive, Google Drive |

ssindicates single-scale.- The password of Baidu Drive is

topf

Please see MMSegmentation for dataset prepare.

For training, run:

sh tools/dist_train.sh local_configs/topformer/<config-file> <num-of-gpus-to-use> --work-dir /path/to/save/checkpoint

To evaluate, run:

sh tools/dist_test.sh local_configs/topformer/<config-file> <checkpoint-path> <num-of-gpus-to-use>

To test the inference speed in mobile device, please refer to tnn_runtime.

The implementation is based on MMSegmentation.

if you find our work helpful to your experiments, please cite with:

@article{zhang2022topformer,

title = {TopFormer: Token Pyramid Transformer for Mobile Semantic Segmentation},

author = {Zhang, Wenqiang and Huang, Zilong and Luo, Guozhong and Chen, Tao and Wang, Xinggang and Liu, Wenyu and Yu, Gang and Shen, Chunhua.},

booktitle = {Proc. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)},

year = {2022}

}