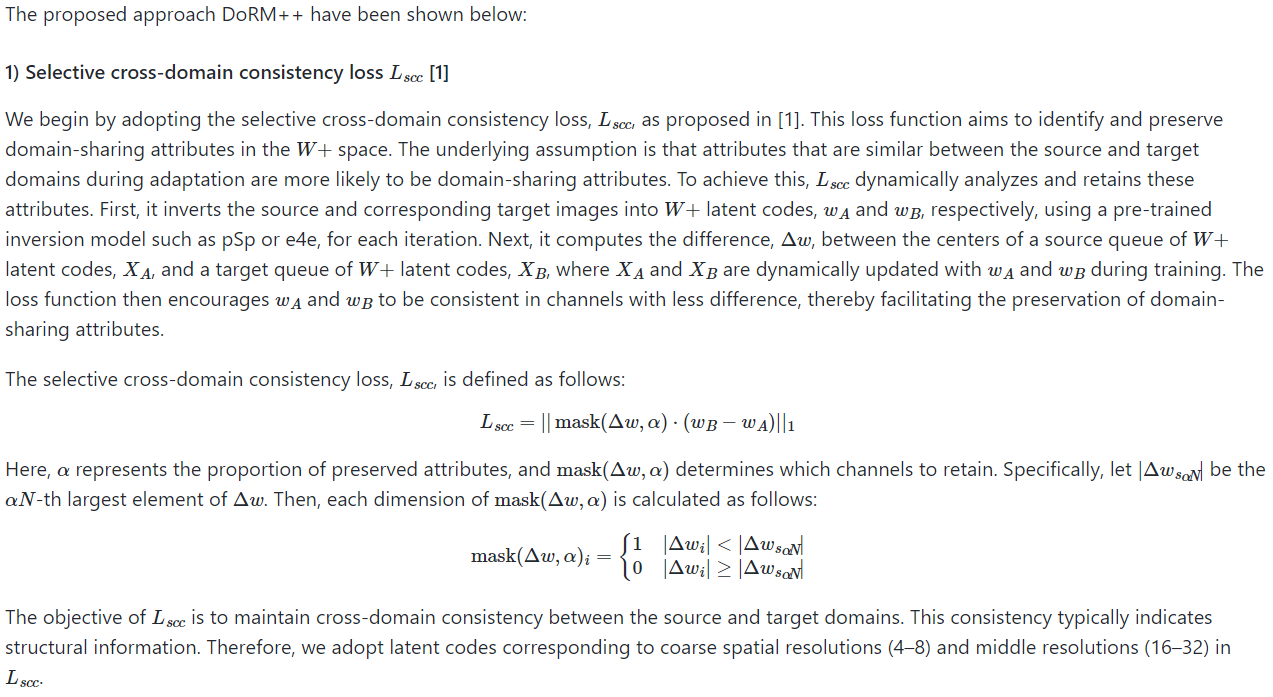

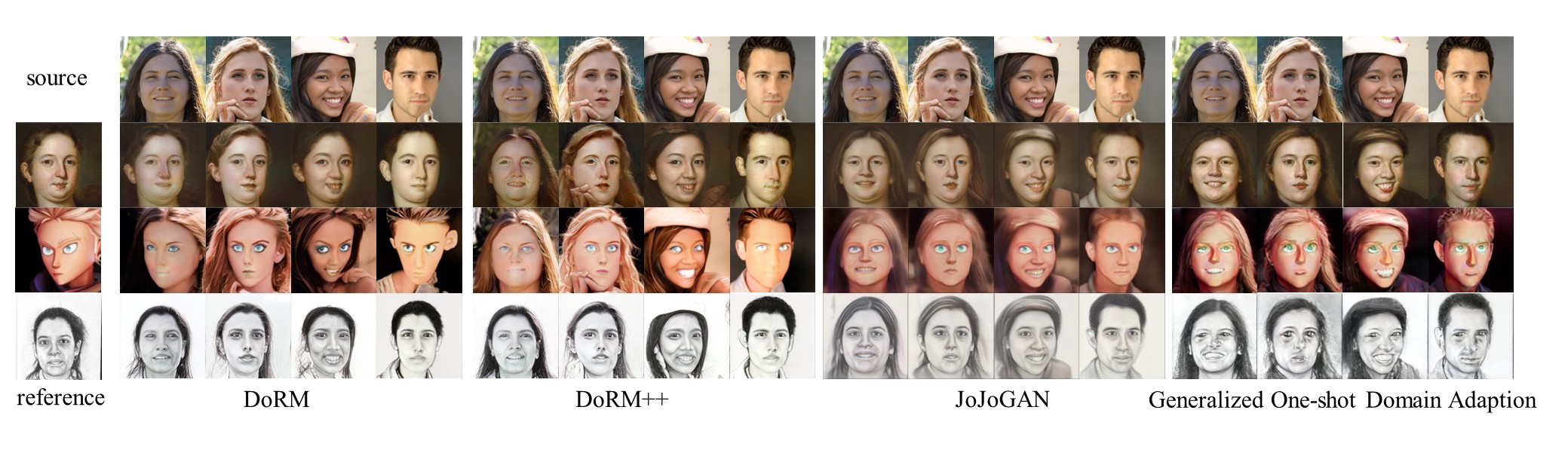

In this section, we illustrate the qualitative results of DoRM and DoRM++ on 10-shot GDA. Note that the Scalable Vector Graphics of images can be found in the corresponding PDF file.

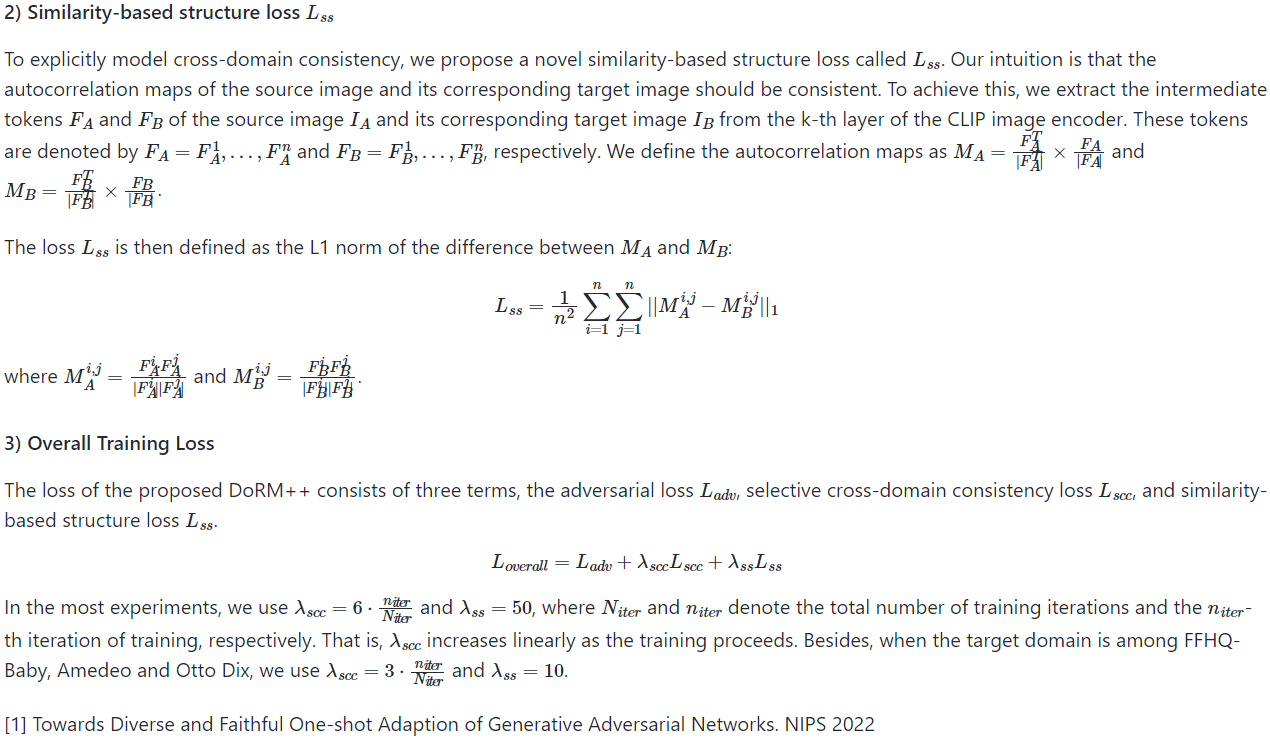

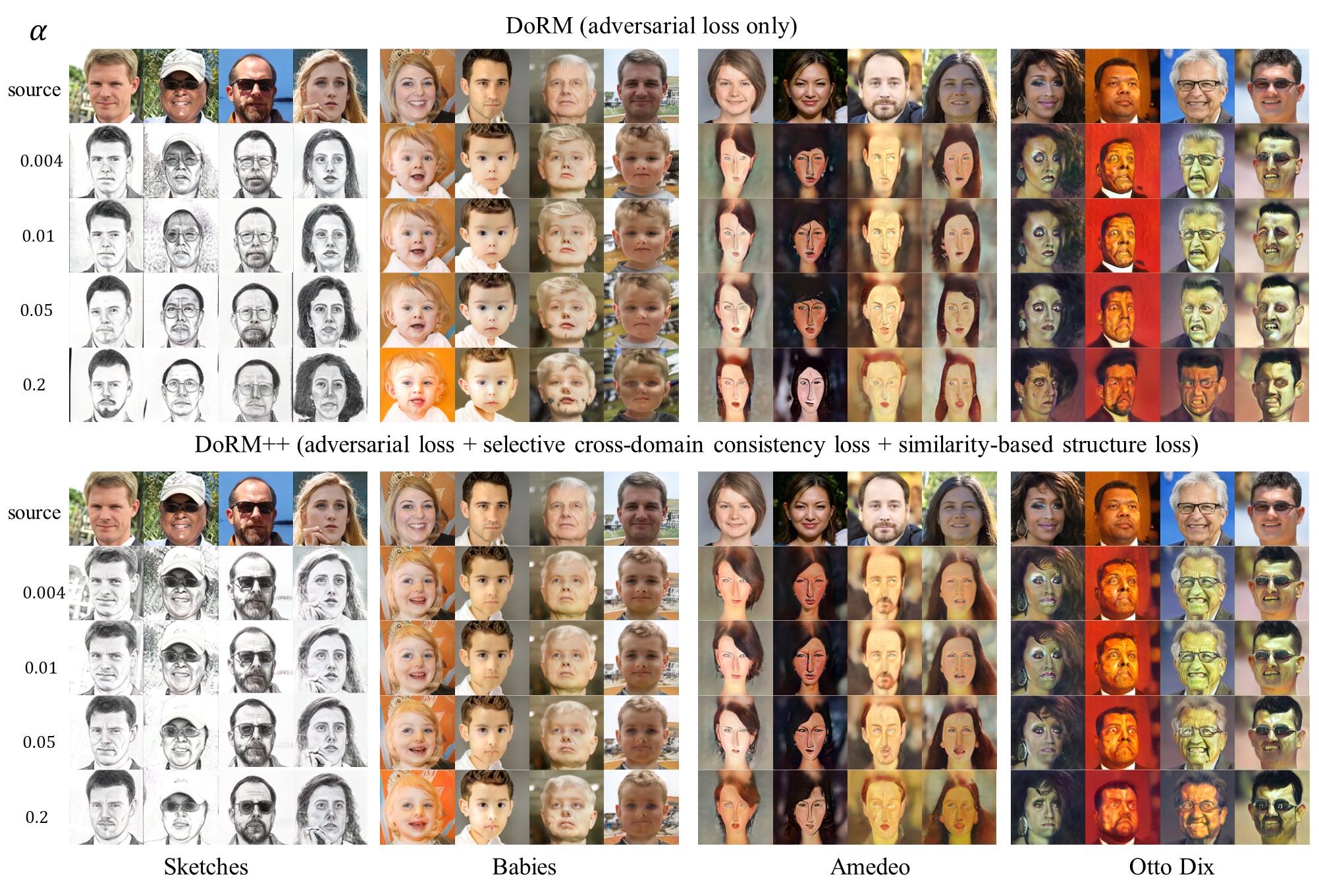

As illustrated in the figure, we can address the follow weaknesses of our DoRM mentioned by the reviewers through adjusting the hyper-parameter

As illustrated in the figure, we can address the follow weaknesses of our DoRM mentioned by the reviewers through adjusting the hyper-parameter

- Due to the limited adaptation capability, DoRM won’t be able to handle hard task, e.g., FFHQ=>Amadeo Painting and FFHQ=>Sketch.

- The sketch results generated by DoRM contain background, making the performance looks inferior to other methods.

- Unnatural blurs and artifacts can be found in many samples of GDA, e.g., FFHQ --> Babies.

Additionally, the proposed DoRM++ has reduced the sensitivity of the hyperparameters

As illustarted in figures, we can also address the weaknesses of our DoRM mentioned by the reviewers through adjusting the hyper-parameter

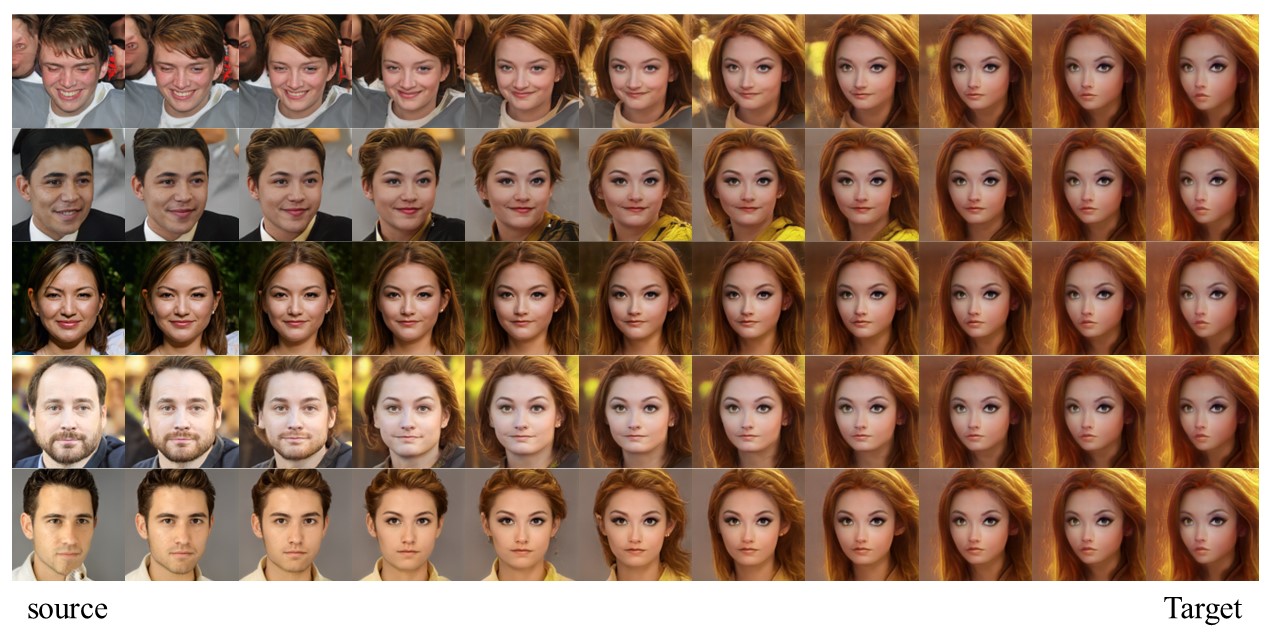

Response to Reviewer ypeq Q4: Qualitative results of the interpolation between source and target domains.

Following the Reviewer ypeq's suggestion, we simply invert the target samples back to the latent space and interpolate the inverse latent codes with source samples. As illustrated in figure, we cannot get the similar results to our DoRM, which demonstrates that our DoRM does not simply carry out latent code interpolation.