This is a collection of papers and other resources for scalable automated alignment, which corresponds to the survey paper Towards Scalable Automated Alignment of LLMs: A Survey. We will update the survey content and this repo regularly, and we very much welcome suggestions of any kind.

[September 4, 2024] We release the third version of the paper, adding more recent papers. Check it out on arXiv and OSS!

[August 1, 2024] We will share the tutorial of this paper at CCL 2024 and CIPS ATT 2024!

[July 17, 2024] We have uploaded the second version of the paper, which features refined expressions that enhance clarity and coherence and incorporates recent literature. Check it out on arXiv and OSS!

[June 5, 2024] Many thanks to elvis@X and DAIR.AI for sharing our work!

[June 4, 2024] The first preprint version of this survey is released. Since many of the topics covered in this paper are rapidly evolving, we anticipate updating this repository and paper at a relatively fast pace.

If you find our survey or repo useful, please consider citing:

@article{AutomatedAlignmentSurvey,

title={Towards Scalable Automated Alignment of LLMs: A Survey},

author={Cao, Boxi and Lu, Keming and Lu, Xinyu and Chen, Jiawei and Ren, Mengjie and Xiang, Hao and Liu, Peilin and Lu, Yaojie and He, Ben and Han, Xianpei and Le, Sun and Lin, Hongyu and Yu, Bowen},

journal={arXiv preprint arXiv:2406.01252},

url={https://arxiv.org/abs/2406.01252}

year={2024}

}

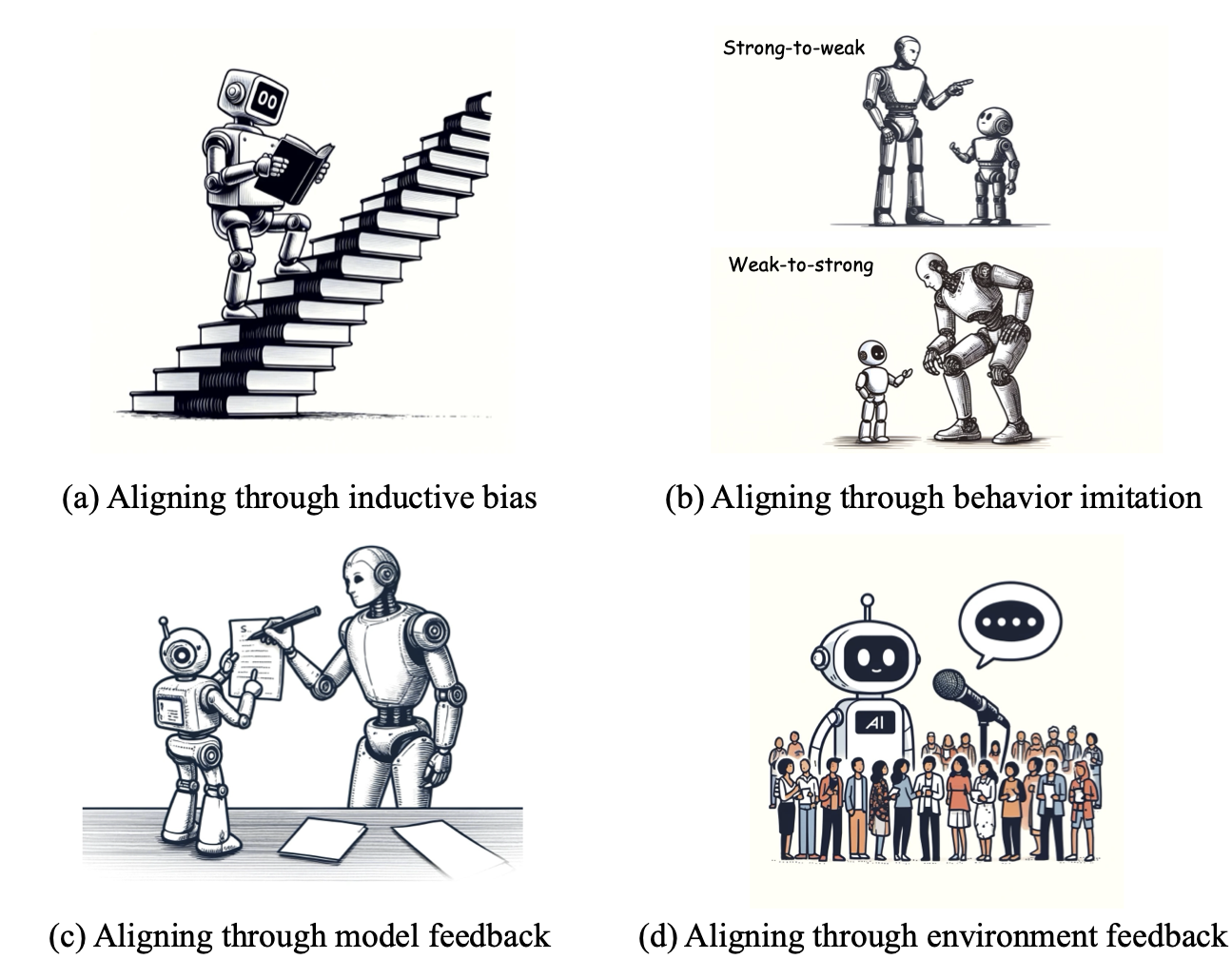

Alignment is the most critical step in building large language models (LLMs) that meet human needs. With the rapid development of LLMs gradually surpassing human capabilities, traditional alignment methods based on human-annotation are increasingly unable to meet the scalability demands. Therefore, there is an urgent need to explore new sources of automated alignment signals and technical approaches. In this paper, we systematically review the recently emerging methods of automated alignment, attempting to explore how to achieve effective, scalable, automated alignment once the capabilities of LLMs exceed those of humans. Specifically, we categorize existing automated alignment methods into 4 major categories based on the sources of alignment signals and discuss the current status and potential development of each category. Additionally, we explore the underlying mechanisms that enable automated alignment and discuss the essential factors that make automated alignment technologies feasible and effective from the fundamental role of alignment.

-

Semi-supervised Learning by Entropy Minimization. Yves Gr et al. NIPS 2004. [paper]

-

Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. Lee et al. Workshop on challenges in representation learning, ICML 2013. [paper]

-

Revisiting Self-Training for Neural Sequence Generation. Junxian He et al. ICLR 2020. [paper]

-

Self-Consistency Improves Chain of Thought Reasoning in Language Models. Wang et al. ICLR 2023. [paper]

-

MoT: Memory-of-Thought Enables ChatGPT to Self-Improve. Li et al. EMNLP 2023. [paper]

-

Large Language Models Can Self-Improve. Huang et al. EMNLP 2023. [paper]

-

West-of-N: Synthetic Preference Generation for Improved Reward Modeling. Pace et al. arXiv 2024. [paper]

-

Quiet-STaR: Language models can teach themselves to think before speaking. Zelikman et al. arXiv 2024. [paper]

-

Constitutional AI: Harmlessness from AI feedback. Bai et al. arXiv 2022. [paper]

-

Tree of Thoughts: Deliberate Problem Solving with Large Language Models. Yao et al. NeurIPS 2023. [paper][code][video]

-

Large Language Models are reasoners with Self-Verification. Weng et al. Findings of EMNLP 2023. [paper] [code]

-

Self-rewarding language models. Yuan et al. arXiv 2024. [paper]

-

Direct language model alignment from online AI feedback. Guo et al. arXiv 2024. [paper]

-

A general language assistant as a laboratory for alignment. Askell et al. arXiv 2021 [paper]

-

Llama 2: Open Foundation and Fine-Tuned Chat Models. Hugo Touvron et al. arXiv 2023. [paper] [model]

-

The Llama 3 Herd of Models. Dubey et al. arXiv 2024. [paper]

-

Principle-Driven Self-Alignment of Language Models from Scratch with Minimal Human Supervision. Sun et al. NIPS 2023. [paper]

-

Propagating Knowledge Updates to LMs Through Distillation. Shankar Padmanabhan et al. NIPS 2023. [paper]

-

RLCD: Reinforcement Learning from Contrastive Distillation for LM Alignment. Kevin Yang et al. ICLR 2024. [paper]

-

The Unlocking Spell on Base LLMs: Rethinking Alignment via In-Context Learning. Lin et al. ICLR 2024. [paper]

-

Supervising strong learners by amplifying weak experts. Christiano et al. arXiv 2018. [paper]

-

Recursively summarizing books with human feedback. Wu et al. arXiv 2021. [paper]

-

Least-to-Most Prompting Enables Complex Reasoning in Large Language Models. Denny Zhou et al. ICLR 2023. [paper]

-

Chain-of-Thought Reasoning is a Policy Improvement Operator. Hugh Zhang et al. NeurIPS 2023 Workshop on Instruction Tuning and Instruction Following 2023. [paper]

-

Plan-and-Solve Prompting: Improving Zero-Shot Chain-of-Thought Reasoning by Large Language Models. Wang et al. ACL 2023. [paper]

-

Learning task decomposition to assist humans in competitive programming. Wen et al. ACL 2024. [paper]

- A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Silver et al. Science 2018. [paper]

-

The Consensus Game: Language Model Generation via Equilibrium Search. Athul Paul Jacob et al. ICLR 2024. [paper]

-

Adversarial Preference Optimization: Enhancing Your Alignment via RM-LLM Game Cheng et al. ACL 2024. [paper]

-

Self-play fine-tuning converts weak language models to strong language models. Chen et al. arXiv 2024. [paper]

-

AI safety via debate. Irving et al. arXiv 2018. [paper]

-

Scalable AI safety via doubly-efficient debate. Brown-Cohen et al. arXiv 2023. [paper]

-

Improving language model negotiation with self-play and in-context learning from ai feedback. Fu et al. arXiv 2023. [paper]

-

Debating with More Persuasive LLMs Leads to More Truthful Answers. Khan et al. arXiv 2024. [paper]

-

Self-playing Adversarial Language Game Enhances LLM Reasoning. Cheng et al. arXiv 2024. [paper]

-

Red teaming game: A game-theoretic framework for red teaming language models. Ma et al. arXiv 2023. [paper]

-

Toward optimal llm alignments using two-player games. Zheng et al. arXiv 2024. [paper]

-

Prover-Verifier Games improve legibility of language model outputs. Kirchner et al. arXiv 2024. [blog] [paper]

-

Unnatural Instructions: Tuning Language Models with (Almost) No Human Labor. Honovich et al. Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) 2023. [paper]

-

Stanford Alpaca: An Instruction-following LLaMA model. Rohan Taori et al. GitHub repository 2023. [code]

-

Self-Instruct: Aligning Language Models with Self-Generated Instructions. Wang et al. ACL 2023. [paper]

-

Dynosaur: A Dynamic Growth Paradigm for Instruction-Tuning Data Curation. Yin et al. EMNLP 2023. [paper]

-

Human-Instruction-Free LLM Self-Alignment with Limited Samples. Hongyi Guo et al. arXiv 2024. [paper]

-

LLM2LLM: Boosting LLMs with Novel Iterative Data Enhancement. Nicholas Lee et al. arXiv 2024. [paper]

-

LaMini-LM: A Diverse Herd of Distilled Models from Large-Scale Instructions. Wu et al. EACL 2024. [paper]

-

WizardLM: Empowering Large Pre-Trained Language Models to Follow Complex Instructions. Can Xu et al. ICLR 2024. [paper]

-

Teaching LLMs to Teach Themselves Better Instructions via Reinforcement Learning. Shangding Gu et al. 2024. [paper]

-

Self-Alignment with Instruction Backtranslation. Xian Li et al. ICLR 2024. [paper]

-

LongForm: Effective Instruction Tuning with Reverse Instructions. Abdullatif Köksal et al. arXiv 2024. [paper]

-

Instruction Tuning with GPT-4. Baolin Peng et al. arXiv 2023. [paper]

-

Enhancing Chat Language Models by Scaling High-quality Instructional Conversations. Ding et al. EMNLP 2023. [paper]

-

Parrot: Enhancing Multi-Turn Chat Models by Learning to Ask Questions. Yuchong Sun et al. arXiv 2023. [paper]

-

ZeroShotDataAug: Generating and Augmenting Training Data with ChatGPT. Solomon Ubani et al. arXiv 2023. [paper]

-

Baize: An Open-Source Chat Model with Parameter-Efficient Tuning on Self-Chat Data. Xu et al. EMNLP 2023. [paper]

-

WizardMath: Empowering Mathematical Reasoning for Large Language Models via Reinforced Evol-Instruct. Haipeng Luo et al. arXiv 2023. [paper]

-

MathGenie: Generating Synthetic Data with Question Back-translation for Enhancing Mathematical Reasoning of LLMs. Zimu Lu et al. arXiv 2024. [paper]

-

MetaMath: Bootstrap Your Own Mathematical Questions for Large Language Models. Longhui Yu et al. ICLR 2024. [paper]

-

MAmmoTH: Building Math Generalist Models through Hybrid Instruction Tuning. Xiang Yue et al. ICLR 2024. [paper]

-

Explanations from Large Language Models Make Small Reasoners Better. Shiyang Li et al. arXiv 2022. [paper]

-

Distilling Reasoning Capabilities into Smaller Language Models. Shridhar et al. Findings of ACL 2023. [paper]

-

Teaching Small Language Models to Reason. Magister et al. ACL 2023. [paper]

-

Specializing Smaller Language Models towards Multi-Step Reasoning. Yao Fu et al. arXiv 2023. [paper]

-

Large Language Models Are Reasoning Teachers. Ho et al. ACL 2023. [paper]

-

Distilling Step-by-Step! Outperforming Larger Language Models with Less Training Data and Smaller Model Sizes. Hsieh et al. Findings of ACL 2023. [paper]

-

Query and Response Augmentation Cannot Help Out-of-domain Math Reasoning Generalization. Chengpeng Li et al. arXiv 2023. [paper]

-

Code Alpaca: An Instruction-following LLaMA model for code generation. Sahil Chaudhary et al. GitHub repository 2023. [code]

-

WizardCoder: Empowering Code Large Language Models with Evol-Instruct. Ziyang Luo et al. ICLR 2024. [paper]

-

MathCoder: Seamless Code Integration in LLMs for Enhanced Mathematical Reasoning. Ke Wang et al. ICLR 2024. [paper]

-

Magicoder: Source Code Is All You Need. Yuxiang Wei et al. arXiv 2023. [paper]

-

WaveCoder: Widespread And Versatile Enhanced Instruction Tuning with Refined Data Generation. Zhaojian Yu et al. arXiv 2024. [paper]

-

MARIO: MAth Reasoning with code Interpreter Output -- A Reproducible Pipeline. Minpeng Liao et al. arXiv 2024. [paper]

-

OpenCodeInterpreter: Integrating Code Generation with Execution and Refinement. Tianyu Zheng et al. arXiv 2024. [paper]

-

CodeUltraFeedback: An LLM-as-a-Judge Dataset for Aligning Large Language Models to Coding Preferences. Martin Weyssow et al. arXiv 2024. [paper]

-

Gorilla: Large Language Model Connected with Massive APIs. Shishir G. Patil et al. arXiv 2023. [paper]

-

GPT4Tools: Teaching Large Language Model to Use Tools via Self-instruction. Yang et al. NIPS 2023. [paper]

-

Toolalpaca: Generalized tool learning for language models with 3000 simulated cases. Tang et al. arXiv 2023. [paper]

-

ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs. Yujia Qin et al. arXiv 2023. [paper]

-

Graph-ToolFormer: To Empower LLMs with Graph Reasoning Ability via Prompt Augmented by ChatGPT. Jiawei Zhang et al. arXiv 2023. [paper]

-

FireAct: Toward Language Agent Fine-tuning. Baian Chen et al. arXiv 2023. [paper]

-

AgentTuning: Enabling Generalized Agent Abilities for LLMs. Aohan Zeng et al. arXiv 2023. [paper]

-

ReST meets ReAct: Self-Improvement for Multi-Step Reasoning LLM Agent. Renat Aksitov et al. arXiv 2023. [paper]

-

ReAct Meets ActRe: When Language Agents Enjoy Training Data Autonomy. Zonghan Yang et al. arXiv 2024. [paper]

-

Trial and Error: Exploration-Based Trajectory Optimization for LLM Agents. Yifan Song et al. arXiv 2024. [paper]

-

Super-NaturalInstructions: Generalization via Declarative Instructions on 1600+ NLP Tasks. Wang et al. EMNLP 2022. [paper]

-

UltraFeedback: Boosting Language Models with High-quality Feedback. Ganqu Cui et al. ICLR 2024. [paper]

-

Preference Ranking Optimization for Human Alignment. Song et al. arXiv 2023. [paper]

-

A general theoretical paradigm to understand learning from human preferences. Azar et al. AISTATS 2024. [paper]

-

CycleAlign: Iterative Distillation from Black-box LLM to White-box Models for Better Human Alignment. Jixiang Hong et al. arXiv 2023. [paper]

-

Zephyr: Direct Distillation of LM Alignment. Lewis Tunstall et al. arXiv 2023. [paper]

-

Aligning Large Language Models through Synthetic Feedback. Kim et al. EMNLP 2023. [paper]

-

MetaAligner: Conditional Weak-to-Strong Correction for Generalizable Multi-Objective Alignment of Language Models. Kailai Yang et al. arXiv 2024. [paper]

-

OpenChat: Advancing Open-source Language Models with Mixed-Quality Data. Guan Wang et al. ICLR 2024. [paper]

-

Safer-Instruct: Aligning Language Models with Automated Preference Data. Taiwei Shi et al. arXiv 2024. [paper]

-

Aligner: Achieving Efficient Alignment through Weak-to-Strong Correction. Jiaming Ji et al. arXiv 2024. [paper]

-

ITERALIGN: Iterative constitutional alignment of large language models. Chen et al. arXiv 2024. [paper]

-

Supervising strong learners by amplifying weak experts. Christiano et al. arXiv 2018. [paper]

-

Weak-to-Strong Generalization: Eliciting Strong Capabilities With Weak Supervision. Collin Burns et al. arXiv 2023. [paper]

-

The Unreasonable Effectiveness of Easy Training Data for Hard Tasks. Peter Hase et al. arXiv 2024. [paper]

-

Superfiltering: Weak-to-Strong Data Filtering for Fast Instruction-Tuning. Ming Li et al. arXiv 2024. [paper]

-

Co-Supervised Learning: Improving Weak-to-Strong Generalization with Hierarchical Mixture of Experts. Yuejiang Liu et al. arXiv 2024. [paper]

-

Easy-to-Hard Generalization: Scalable Alignment Beyond Human Supervision. Zhiqing Sun et al. arXiv 2024. [paper]

-

Self-Supervised Alignment with Mutual Information: Learning to Follow Principles without Preference Labels. Jan-Philipp Fränken et al. arXiv 2024. [paper]

-

Weak-to-Strong Extrapolation Expedites Alignment. Chujie Zheng et al. arXiv 2024. [paper]

-

Quantifying the Gain in Weak-to-Strong Generalization. Moses Charikar et al. arXiv 2024. [paper]

-

Theoretical Analysis of Weak-to-Strong Generalization. Hunter Lang et al. arXiv 2024. [paper]

-

A statistical framework for weak-to-strong generalization. Seamus Somerstep et al. arXiv 2024. [paper]

-

MACPO: Weak-to-Strong Alignment via Multi-Agent Contrastive Preference Optimization. Yougang Lyu et al. [paper]

-

Deep Reinforcement Learning from Human Preferences. Paul F. Christiano et al. NeurIPS 2017. [paper]

-

Learning to summarize with human feedback. Nisan Stiennon et al. NeurIPS 2020. [paper]

-

A general language assistant as a laboratory for alignment. Askell et al. arXiv 2021. [paper]

-

Constitutional ai: Harmlessness from ai feedback. Bai et al. arXiv 2022. [paper]

-

Training language models to follow instructions with human feedback Ouyang et al. NeurIPS 2022. [paper]

-

Training a helpful and harmless assistant with reinforcement learning from human feedback. Bai et al. arXiv 2022. [paper]

- Quark: Controllable Text Generation with Reinforced Unlearning. Ximing Lu et al. arXiv 2022. [paper]

-

Rewarded soups: towards Pareto-optimal alignment by interpolating weights fine-tuned on diverse rewards. Alex et al. NeurIPS 2023. [paper]

-

Fine-Grained Human Feedback Gives Better Rewards for Language Model Training. Zeqiu Wu et al. NeurIPS 2023. [paper]

-

Scaling Laws for Reward Model Overoptimization. Gao et al. ICML 2023. [paper]

-

Principled Reinforcement Learning with Human Feedback from Pairwise or K-wise Comparisons. Zhu et al. ICML 2023. [paper]

-

Secrets of RLHF in Large Language Models Part I: PPO. Rui Zheng et al. arXiv 2023. [paper]

-

RLAIF: Scaling Reinforcement Learning from Human Feedback with AI Feedback. Harrison Lee et al. arXiv 2023. [paper]

-

Zephyr: Direct Distillation of LM Alignment. Lewis Tunstall et al. arXiv 2023. [paper]

-

Scaling Relationship on Learning Mathematical Reasoning with Large Language Models. Zheng Yuan et al. arXiv 2023. [paper]

-

RAFT: Reward rAnked FineTuning for Generative Foundation Model Alignment. Hanze Dong et al. TMLR 2023. [paper]

-

RRHF: Rank Responses to Align Language Models with Human Feedback. Hongyi Yuan et al. NIPS 2023. [paper]

-

CycleAlign: Iterative Distillation from Black-box LLM to White-box Models for Better Human Alignment. Jixiang Hong et al. arXiv 2023. [paper]

-

SALMON: Self-Alignment with Principle-Following Reward Models. Zhiqing Sun et al. ICLR 2023. [paper]

-

Aligning Large Language Models through Synthetic Feedback. Kim et al. EMNLP 2023. [paper]

-

Reward-Augmented Decoding: Efficient Controlled Text Generation With a Unidirectional Reward Model. Deng et al. EMNLP 2023. [paper]

-

Critic-Driven Decoding for Mitigating Hallucinations in Data-to-text Generation. Lango et al. EMNLP 2023. [paper]

-

Large Language Models Are Not Fair Evaluators. Wang et al. arXiv 2023. [paper]

-

Direct language model alignment from online ai feedback. Guo et al. arXiv 2024. [paper]

-

West-of-N: Synthetic Preference Generation for Improved Reward Modeling. Pace et al. arXiv 2024. [paper]

-

Self-rewarding language models. Yuan et al. arXiv 2024. [paper]

-

Safer-Instruct: Aligning Language Models with Automated Preference Data. Taiwei Shi et al. arXiv 2024. [paper]

-

Controlled Decoding from Language Models. Sidharth Mudgal et al. arXiv 2024. [paper]

-

Direct Large Language Model Alignment Through Self-Rewarding Contrastive Prompt Distillation. Aiwei Liu et al. arXiv 2024. [paper]

-

Decoding-time Realignment of Language Models. Tianlin Liu et al. arXiv 2024. [paper]

-

GRATH: Gradual Self-Truthifying for Large Language Models. Weixin Chen et al. arXiv 2024. [paper]

-

RLCD: Reinforcement Learning from Contrastive Distillation for LM Alignment. Kevin Yang et al. ICLR 2024. [paper]

-

Judging llm-as-a-judge with mt-bench and chatbot arena. Zheng et al. NIPS 2024. [paper]

-

On Diversified Preferences of Large Language Model Alignment. Dun Zeng et al. arXiv 2024. [paper]

-

Improving Large Language Models via Fine-grained Reinforcement Learning with Minimum Editing Constraint. Zhipeng Chen et al. arXiv 2024. [paper]

-

Aligning Large Language Models via Fine-grained Supervision Dehong Xu et al. ACL 2024. [paper]

-

BOND: Aligning LLMs with Best-of-N Distillation Pier Giuseppe Sessa et al. arXiv 2024. [paper]

-

Training Verifiers to Solve Math Word Problems. Karl Cobbe et al. arXiv 2021. [paper]

-

Solving math word problems with process- and outcome-based feedback. Jonathan Uesato et al. arXiv 2022. [paper]

-

STaR: Bootstrapping Reasoning With Reasoning. Zelikman et al. NIPS 2022. [paper]

-

Improving Large Language Model Fine-tuning for Solving Math Problems. Yixin Liu et al. arXiv 2023. [paper]

-

Making Language Models Better Reasoners with Step-Aware Verifier. Li et al. ACL 2023. [paper]

-

Let's Verify Step by Step. Hunter Lightman et al. arXiv 2023. [paper]

-

Solving Math Word Problems via Cooperative Reasoning induced Language Models. Zhu et al. ACL 2023. [paper]

-

GRACE: Discriminator-Guided Chain-of-Thought Reasoning. Khalifa et al. Findings of ACL 2023. [paper]

-

Let's reward step by step: Step-Level reward model as the Navigators for Reasoning. Qianli Ma et al. arXiv 2023. [paper]

-

Beyond Human Data: Scaling Self-Training for Problem-Solving with Language Models. Avi Singh et al. TMLR 2024. [paper]

-

V-STaR: Training Verifiers for Self-Taught Reasoners. Arian Hosseini et al. arXiv 2024. [paper]

-

GLoRe: When, Where, and How to Improve LLM Reasoning via Global and Local Refinements. Alex Havrilla et al. arXiv 2024. [paper]

-

InternLM-Math: Open Math Large Language Models Toward Verifiable Reasoning. Huaiyuan Ying et al. arXiv 2024. [paper]

-

OVM, Outcome-supervised Value Models for Planning in Mathematical Reasoning. Fei Yu et al. arXiv 2024. [paper]

-

DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models. Zhihong Shao et al. arXiv 2024. [paper]

-

Math-Shepherd: Verify and Reinforce LLMs Step-by-step without Human Annotations. Peiyi Wang et al. arXiv 2024. [paper]

-

Multi-step Problem Solving Through a Verifier: An Empirical Analysis on Model-induced Process Supervision. Zihan Wang et al. arXiv 2024. [paper]

-

Training Language Models with Language Feedback. Jérémy Scheurer et al. arXiv 2022. [paper]

-

Self-critiquing models for assisting human evaluators. Saunders et al. arXiv 2022. [paper]

-

Shepherd: A Critic for Language Model Generation. Wang et al. arXiv 2023. [paper]

-

Critique Ability of Large Language Models. Liangchen Luo et al. arXiv 2023. [paper]

-

Learning from Natural Language Feedback. Angelica Chen et al. TMLR 2024. [paper]

-

Open Source Language Models Can Provide Feedback: Evaluating LLMs' Ability to Help Students Using GPT-4-As-A-Judge. Charles Koutcheme et al. arXiv 2024. [paper]

-

Learning From Mistakes Makes LLM Better Reasoner. Shengnan An et al. arXiv 2024. [paper]

-

LLMs are Superior Feedback Providers: Bootstrapping Reasoning for Lie Detection with Self-Generated Feedback Tanushree Banerjee et al. arXiv 2024. [paper]

-

Training Socially Aligned Language Models on Simulated Social Interactions. Liu et al. ICLR 2024. [paper] [code]

-

Self-Alignment of Large Language Models via Monopolylogue-based Social Scene Simulation. Pang et al. ICML 2024. [paper] [code]

-

MoralDial: A Framework to Train and Evaluate Moral Dialogue Systems via Moral Discussions. Sun et al. ACL 2023. [paper] [code]

-

SOTOPIA-$\pi$: Interactive Learning of Socially Intelligent Language Agents. Wang et al. arXiv 2024. [paper] [code]

-

Systematic Biases in LLM Simulations of Debates. Taubenfeld et al. arXiv 2024. [paper]

-

Bootstrapping LLM-based Task-Oriented Dialogue Agents via Self-Talk. Ulmer et al. arXiv 2024 [paper]

-

Social Chemistry 101: Learning to Reason about Social and Moral Norms. Forbes et al. EMNLP 2020. [paper] [code]

-

Aligning to Social Norms and Values in Interactive Narratives. Prithviraj et al. NAACL 2022. [paper]

-

The Moral Integrity Corpus: A Benchmark for Ethical Dialogue Systems. Ziems et al. ACL 2022. [paper] [code]

-

Agent Alignment in Evolving Social Norms. Li et al. arXiv 2024. [paper]

-

What are human values, and how do we align AI to them? Klingefjord et al. arXiv 2024. [paper]

-

Constitutional AI: Harmlessness from AI Feedback. Bai et al. arXiv 2022. [paper] [code]

-

Collective Constitutional AI: Aligning a Language Model with Public Input. Huang et al. 2024. [paper] [blog]

-

Democratic inputs to AI grant program: lessons learned and implementation plans. OpenAI. 2023. [blog]

-

Principle-Driven Self-Alignment of Language Models from Scratch with Minimal Human Supervision. Sun et al. NeurIPS 2023. [paper] [code]

-

ConstitutionMaker: Interactively Critiquing Large Language Models by Converting Feedback into Principles. Petridis et al. arXiv 2023. [paper]

-

Case Law Grounding: Aligning Judgments of Humans and AI on Socially-Constructed Concepts. Chen et al. arXiv 2023. [paper]

-

LeetPrompt: Leveraging Collective Human Intelligence to Study LLMs. Santy et al. arXiv 2024. [paper]

-

CultureBank: An Online Community-Driven Knowledge Base Towards Culturally Aware Language Technologies. Shi et al. arXiv 2024. [paper] [code]

-

IterAlign: Iterative Constitutional Alignment of Large Language Models. Chen et al. NAACL 2024. [paper]

-

Teaching Large Language Models to Self-Debug. Chen et al. ICLR 2024. [paper]

-

SelfEvolve: A Code Evolution Framework via Large Language Models. Jiang et al. arXiv 2023. [paper]

-

CodeRL: Mastering Code Generation through Pretrained Models and Deep Reinforcement Learning. Le et al. NeurIPS 2022. [paper] [code]

-

CRITIC: Large Language Models Can Self-Correct with Tool-Interactive Critiquing. Gou et al. ICLR 2024. [paper] [code]

-

AUTOACT: Automatic Agent Learning from Scratch via Self-Planning. Qiao et al. arXiv 2024. [paper] [code]

-

LLMs in the Imaginarium: Tool Learning through Simulated Trial and Error. Wang et al. arXiv 2024. [paper] [code]

-

Making Language Models Better Tool Learners with Execution Feedback. Qiao et al. NAACL 2024. [paper] [code]

-

Grounding Large Language Models in Interactive Environments with Online Reinforcement Learning. Carta et al. arXiv 2023. [paper] [code]

-

Language Models Meet World Models: Embodied Experiences Enhance Language Models. Xiang et al. NeurIPS 2023. [paper] [code]

-

True Knowledge Comes from Practice: Aligning LLMs with Embodied Environments via Reinforcement Learning. Tan et al. arXiv 2024. [paper] [code]

-

Do As I Can, Not As I Say: Grounding Language in Robotic Affordances. Ahn et al. arXiv 2022. [paper] [code]

-

Voyager: An Open-Ended Embodied Agent with Large Language Models. Wang et al. arXiv 2023. [paper] [code]

-

Trial and Error: Exploration-Based Trajectory Optimization for LLM Agents. Song et al. ACL 2024. [paper] [code]

-

Large Language Models as Generalizable Policies for Embodied Tasks. Szot et al. ICLR 2024. [paper]

-

LIMA: Less Is More for Alignment. Zhou et al. NIPS 2023. [paper]

-

AlpaGasus: Training A Better Alpaca with Fewer Data. Chen et al. arXiv 2023. [paper]

-

The False Promise of Imitating Proprietary LLMs. Gudib et al. arXiv 2023. [paper]

-

Exploring the Relationship between In-Context Learning and Instruction Tuning. Duan et al. arxiv 2023. [paper]

-

Towards Expert-Level Medical Question Answering with Large Language Models. Singhal et al. arXiv 2023. [paper]

-

Injecting New Knowledge into Large Language Models via Supervised Fine-Tuning. Mecklenburg et al. arXiv 2024. [paper]

-

The Unlocking Spell on Base LLMs: Rethinking Alignment via In-Context Learning. Lin et al. ICLR 2024. [paper]

-

From Language Modeling to Instruction Following: Understanding the Behavior Shift in LLMs after Instruction Tuning. Wu et al. NAACL 2024. [paper]

-

Does Fine-Tuning LLMs on New Knowledge Encourage Hallucinations?. Gekhman et al. arXiv 2024. [paper]

-

Learning or Self-Aligning? Rethinking Instruction Fine-Tuning. Ren et al. ACL 2024. [paper]

-

Large Language Models Are Not Yet Human-Level Evaluators for Abstractive Summarization. Shen et al. Findings of EMNLP 2023. [paper]

-

Judging llm-as-a-judge with mt-bench and chatbot arena. Zheng et al. NIPS 2023. [paper]

-

The Generative AI Paradox: "What It Can Create, It May Not Understand". West et al. arXiv 2023. [paper]

-

Large Language Models Cannot Self-Correct Reasoning Yet. Huang et al. ICLR 2024. [paper]

-

Large Language Models Are Not Fair Evaluators. Wang et al. arXiv 2023. [paper]

-

Style Over Substance: Evaluation Biases for Large Language Models. Wu et al. arXiv 2023. [paper]

-

G-Eval: NLG Evaluation Using GPT-4 with Better Human Alignment. Liu et al. arXiv 2023. [paper]

-

Benchmarking and Improving Generator-Validator Consistency of Language Models. Li et al. ICLR 2024. [paper]

-

Self-Alignment with Instruction Backtranslation. Li et al. ICLR 2024. [paper]

-

CriticBench: Benchmarking LLMs for Critique-Correct Reasoning. Lin et al. Findings of ACL 2024. [paper]

-

Self-rewarding language models. Yuan et al. arXiv 2024. [paper]

-

CriticBench: Evaluating Large Language Models as Critic. Lan et al. arXiv 2024. [paper]

-

Constitutional ai: Harmlessness from ai feedback. Bai et al. arXiv 2022. [paper]

-

Principle-Driven Self-Alignment of Language Models from Scratch with Minimal Human Supervision. Sun et al. NIPS 2023. [paper]

-

Weak-to-Strong Generalization: Eliciting Strong Capabilities With Weak Supervision. Burns et al. arXiv 2023. [paper]

-

ITERALIGN: Iterative constitutional alignment of large language models. Chen et al. NAACL 2024. [paper]

-

Self-Supervised Alignment with Mutual Information: Learning to Follow Principles without Preference Labels. Fränken et al. arXiv 2024. [paper]

-

Easy-to-Hard Generalization: Scalable Alignment Beyond Human Supervision. Sun et al. arXiv 2024. [paper]

-

The Unreasonable Effectiveness of Easy Training Data for Hard Tasks. Hase et al. arXiv 2024. [paper]

-

A statistical framework for weak-to-strong generalization. Somerstep et al. arXiv 2024. [paper]

-

Theoretical Analysis of Weak-to-Strong Generalization. Lang et al. arXiv 2024. [paper]

-

Quantifying the Gain in Weak-to-Strong Generalization. Charikar et al. arXiv 2024. [paper]

| Workshop | Description |

|---|---|

| NOLA 2023 | Top ML researchers from industry and academia convened in New Orleans, before NeurIPS 2023, to discuss AI alignment and potential risks from advanced AI. |

| Vienna 2024 | The Vienna Alignment Workshop is an exclusive event bringing together 150 hand-selected AI researchers and leaders from various sectors to discuss AI safety and alignment issues. Held in conjunction with ICML, the workshop covers topics like safe AI, interpretability, and governance. |

| AI for Math Workshop @ ICML 2024 | Attendants from various backgrounds discuss autoformalization, automated theorem proving, theorem generation, code augmentation for mathematical reasoning, and formal verification. |

| Models of Human Feedback for AI Alignment @ ICML 2024 | Bringing together different communities to understand human feedback, discuss mathematical and computational models of human feedback, and explore future directions for better AI alignment. |