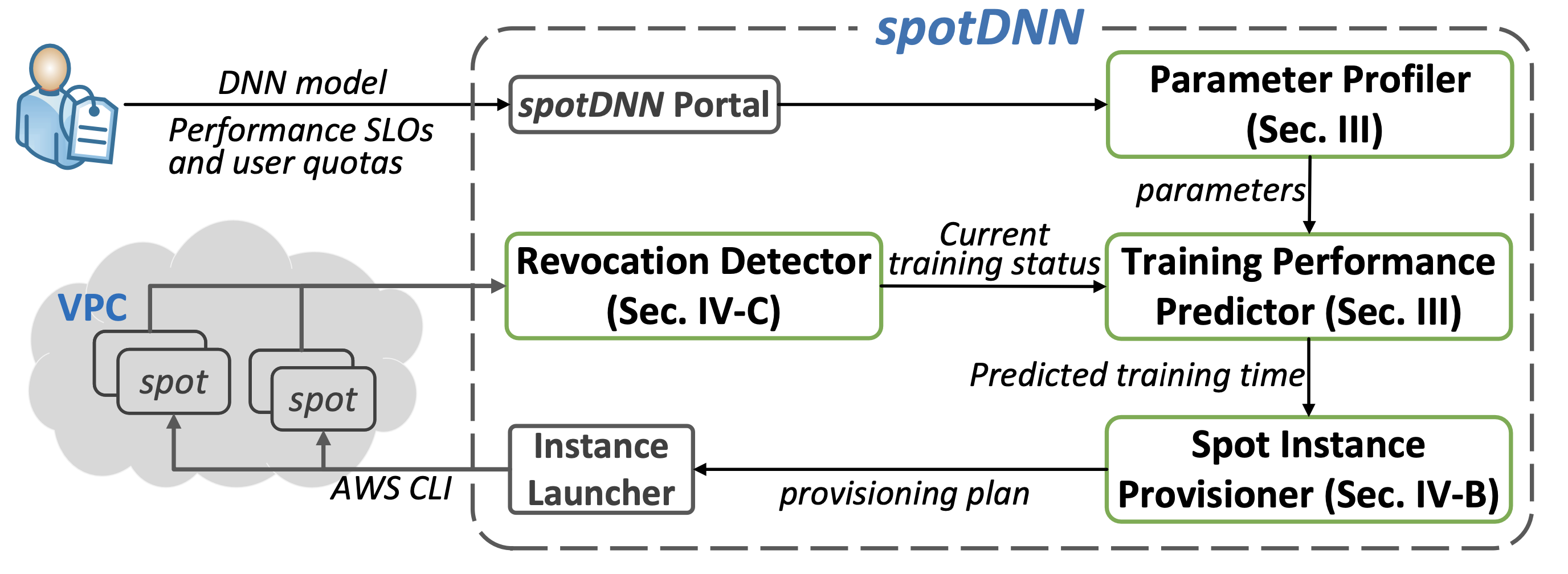

spotDNN is a heterogeneity-aware spot instance provisioning framework to provide predictable performance for DDNN training workloads in the cloud.

spotDNN comprises four pieces of modules: a parameter profiler, a training performance predictor, a spot instance provisioner and a revocation detector. Users first submit a DDNN training workload, the performance SLOs and the quotas to the spotDNN portal. When the parameter profiler finishes the profiling jobs, the performance predictor then predicts the DDNN training time using our performance model. To guarantee the target DDNN training time and training loss, the spot resource provisioner further identifies the cost-efficient resource provisioning plan using spot instances. Once the cost-efficient resource provisioning plan is determined, the instance launcher finally requests the corresponding instances in the plan using the command-line tools (e.g., AWS CLI) and places them in the same VPC.

We characterize the DDNN training process in a heterogeneous cluster with

DDNN training loss converges faster as the WA batch size

In a heterogeneous cluster,

Each iteration of DDNN training can be split into two phases: gradient computation and parameter communication, which are generally processed in sequential for the ASP mechanism. The communication phase consists of the gradient aggregation through PCIe and the parameter communication through the network, which can be formulated as

The contention of PS network bandwidth only occurs during part of the communication phase, Accordingly, the available network bandwidth

The objective is to minimize the monetary cost of provisioned spot instances, while guaranteeing the performance of DDNN training workloads. The optimization problem is formally defined as

-

TensorFlow 1.15.0

-

Python 3.7.13 ( including packages of numpy, pandas, scipy, subprocess, json, time, datetime )

-

Amazon AWS CLI

SpotDNN is integrated on Amazon AWS. To using SpotDNN, an AWS account is required. The following sections break down the steps to setup up the required AWS components.

First, setup an AWS Access Key for your AWS account. More details about setting up an access key can be found at AWS Security Blog: Where's My Secret Access Key. Please remember the values for the AWS Access Key ID and the AWS Secret Access Key. These values are needed to configure the Amazon AWS CLI.

- Download and install the Amazon AWS CLI. More details can be found at Installing or updating the latest version of the AWS CLI.

- Configure Amazon AWS CLI by running command

aws configure, if it is not yet configured. - Enter the following:

AWS Access Key IDAWS Secret Access Key- Default region name

- Default output format

- Create a VPC by specifying the IPv4 CIDR block and remember the value of the

VPC ID. - Create a subnet by specifying the

VPC IDand remember the values of theRoute table IDandSubnet Idreturned. - Create a Internet gate and attached it to the VPC created before. Remember the value of the

Internet gateway ID. - Edit the route table. Enter

0.0.0.0/0to theDestinationandInternet gateway IDto theTarget.

-

Extend spotDNN to Google Cloud Platform

-

Configure Google Cloud CLI.

-

Configure Google Cloud VPC.

-

Substitute

aws ec2commands withgcloud computecommands.

-

-

Extend spotDNN to Azure

-

Configure Azure CLI.

-

Configure Azure Vnet.

-

Substitute

aws ec2commands withaz vmcommands.

-

$ git clone https://github.com/spotDNN/spotDNN.git

$ cd spotDNN

$ python3 -m pip install --upgrade pip

$ pip install -r requirements.txt-

First, provide the path of the model, the part 1 and the part 2 parameters in

profiler/instanceinfo.pyusing for the DDNN training. -

Then, profile the workload-specific parameters:

$ cd spotDNN/profiler $ python3 profiler.py

*We have provide the workload-specific parameters of ResNet-110 model in profiler/instanceinfo.py. You can test the prototype system without this step.

-

Finally, define the objective loss

objlossand objective training timeobjtimeinportal.pyand launch the prototype system:$ cd spotDNN $ python3 portal.py

After you run the script, you will get the training cluster information in spotDNN/launcher/instancesInfo which is a .txt file, and the training results in spotDNN/launcher/result, which contains files copied from different instances.

Ruitao Shang, Fei Xu*, Zhuoyan Bai, Li Chen, Zhi Zhou, Fangming Liu, “spotDNN: Provisioning Spot Instances for Predictable Distributed DNN Training in the Cloud,” in: Proc. of IEEE/ACM IWQoS 2023, June 19-21, 2023.

@inproceedings{shang2023spotdnn,

title={spotDNN: Provisioning Spot Instances for Predictable Distributed DNN Training in the Cloud},

author={Shang, Ruitao and Xu, Fei and Bai, Zhuoyan and Chen, Li and Zhou, Zhi and Liu, Fangming},

booktitle={2023 IEEE/ACM 31st International Symposium on Quality of Service (IWQoS)},

pages={1--10},

year={2023},

organization={IEEE}

}