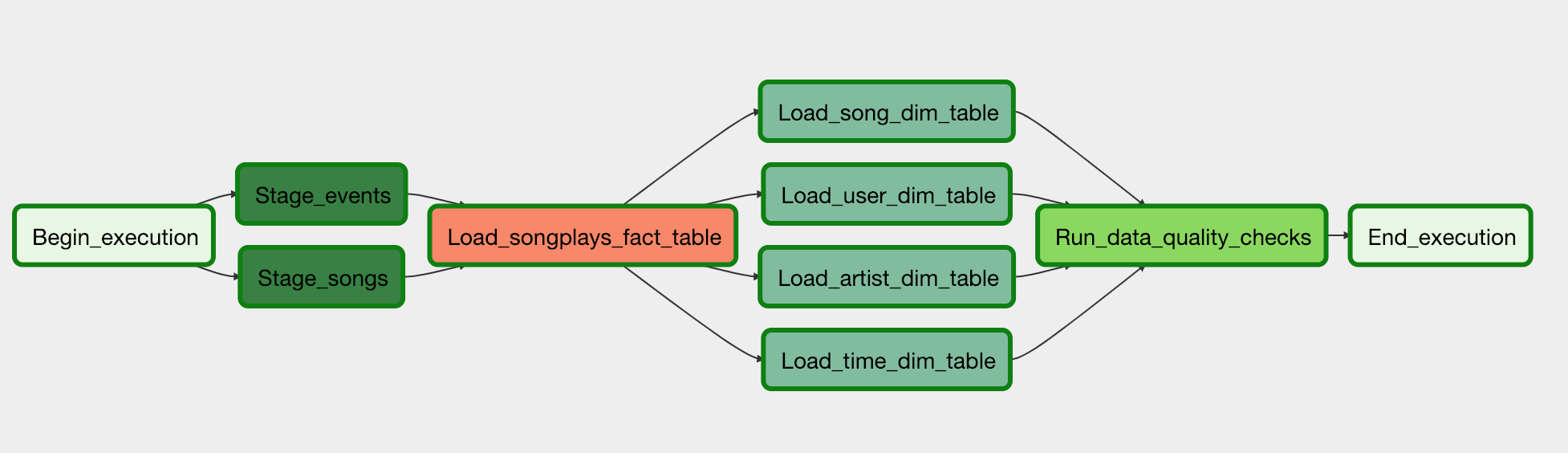

This project showcases how to design and schedule a series of jobs/steps using Apache Airflow with the following purposes

- Backfill data

- Build a dimensional data model using python

- load data from AWS S3 bucket to AWS Redshift Datawarehouse

- run quality checks on the data

- Use or create custom operators and available hooks to create reusable code

You can run the DAG on your own machine using docker-compose. To use docker-compose, you

must first install Docker. Once Docker is installed:

- Open a terminal in the same directory as

docker-compose.ymlan - Run

docker-compose up - Wait 30-60 seconds

- Open

http://localhost:8080in Google Chrome (Other browsers occasionally have issues rendering the Airflow UI) - Make sure you have configured the

aws_credentialsandredshiftconnections in the Airflow UI

When you are ready to quit Airflow, hit ctrl+c in the terminal where docker-compose is running.

Then, type docker-compose down