This is a PyTorch implementation of "Contextual Convolutional Neural Networks".

The models trained on ImageNet can be found here.

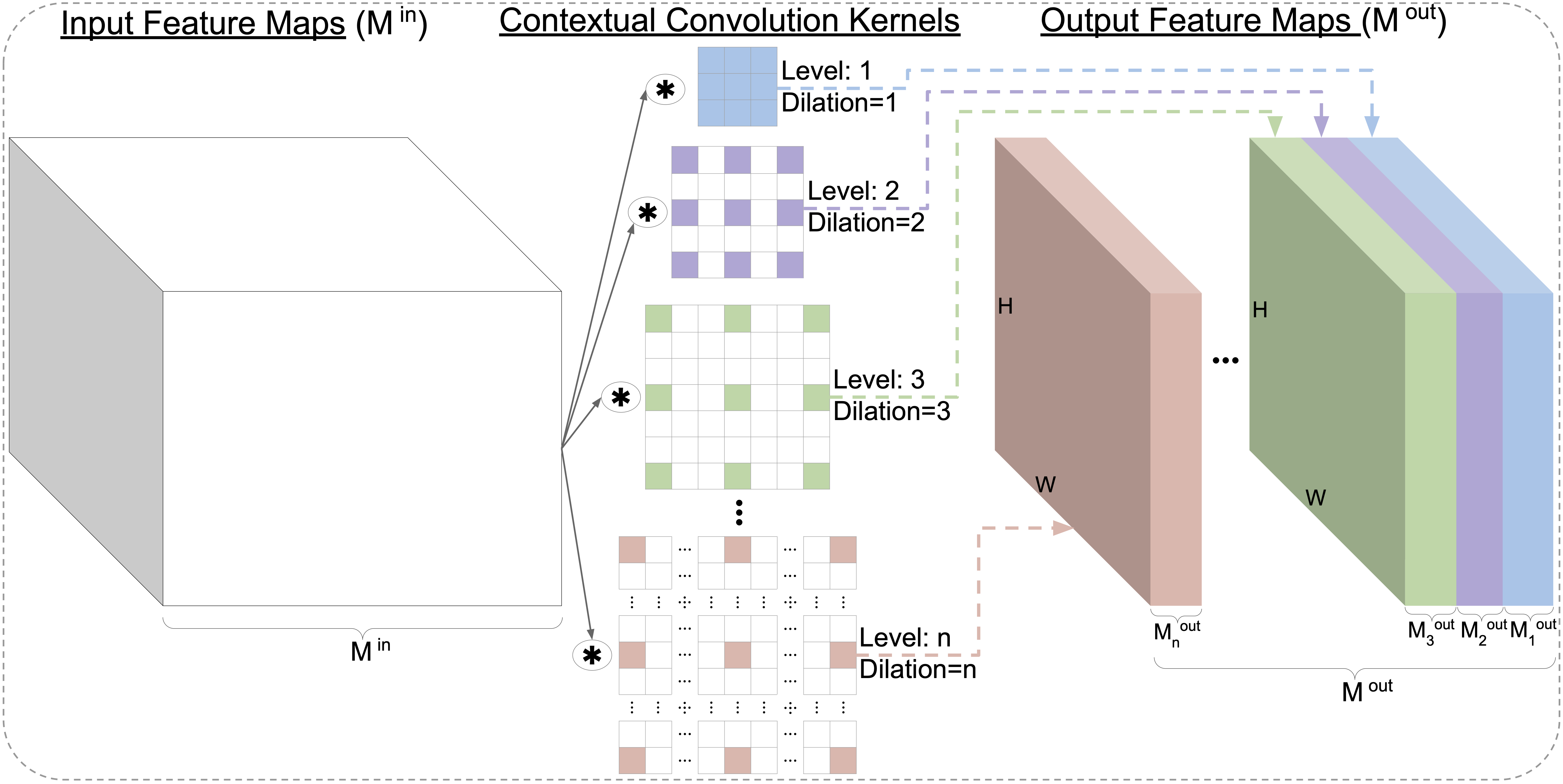

Contextual Convolution (CoConv) is able to provide improved recognition capabilities over the baseline (see the paper for details).

The accuracy on ImageNet (using the default training settings):

| Network | 50-layers | 101-layers | 152-layers |

|---|---|---|---|

| ResNet | 76.12% (model) | 78.00% (model) | 78.45% (model) |

| CoResNet | 77.27% (model) | 78.71% (model) | 79.03% (model) |

Install PyTorch and ImageNet dataset following the official PyTorch ImageNet training code.

A fast alternative (without the need to install PyTorch and other deep learning libraries) is to use NVIDIA-Docker, we used this container image.

To train a model (for instance, CoResNet with 50 layers) using DataParallel run main.py;

you need also to provide result_path (the directory path where to save the results

and logs) and the --data (the path to the ImageNet dataset):

result_path=/your/path/to/save/results/and/logs/

mkdir -p ${result_path}

python main.py \

--data /your/path/to/ImageNet/dataset/ \

--result_path ${result_path} \

--arch coresnet \

--model_depth 50To train using Multi-processing Distributed Data Parallel Training follow the instructions in the official PyTorch ImageNet training code.

If you find our work useful, please consider citing:

@article{duta2021contextual,

author = {Ionut Cosmin Duta and Mariana Iuliana Georgescu and Radu Tudor Ionescu},

title = {Contextual Convolutional Neural Networks},

journal = {arXiv preprint arXiv:2108.07387},

year = {2021},

}