SMAL4V (SMAL for Videos)

This repository contains research done as part of the diploma thesis, which will be available for download at https://dspace.cvut.cz/ after the defence. The installation instruction and example results follow.

All the code from this repository is free to use if the original source is cited. Note that it has some dependences that are protected by different licences, including SMAL (A Skinned Multi-Animal Linear Model of 3D Animal Shape). Consult https://smal.is.tue.mpg.de/license.html for the information on SMAL licensing.

This research ows a lot to the following works:

- [model|paper] 3D Menagerie: Modeling the 3D Shape and Pose of Animals

- [code|paper] Who Left the Dogs Out? 3D Animal Reconstruction with Expectation Maximization in the Loop

- [code]|paper] Three-D Safari: Learning to Estimate Zebra Pose, Shape, and Texture from Images "In the Wild"

Description of the Approach

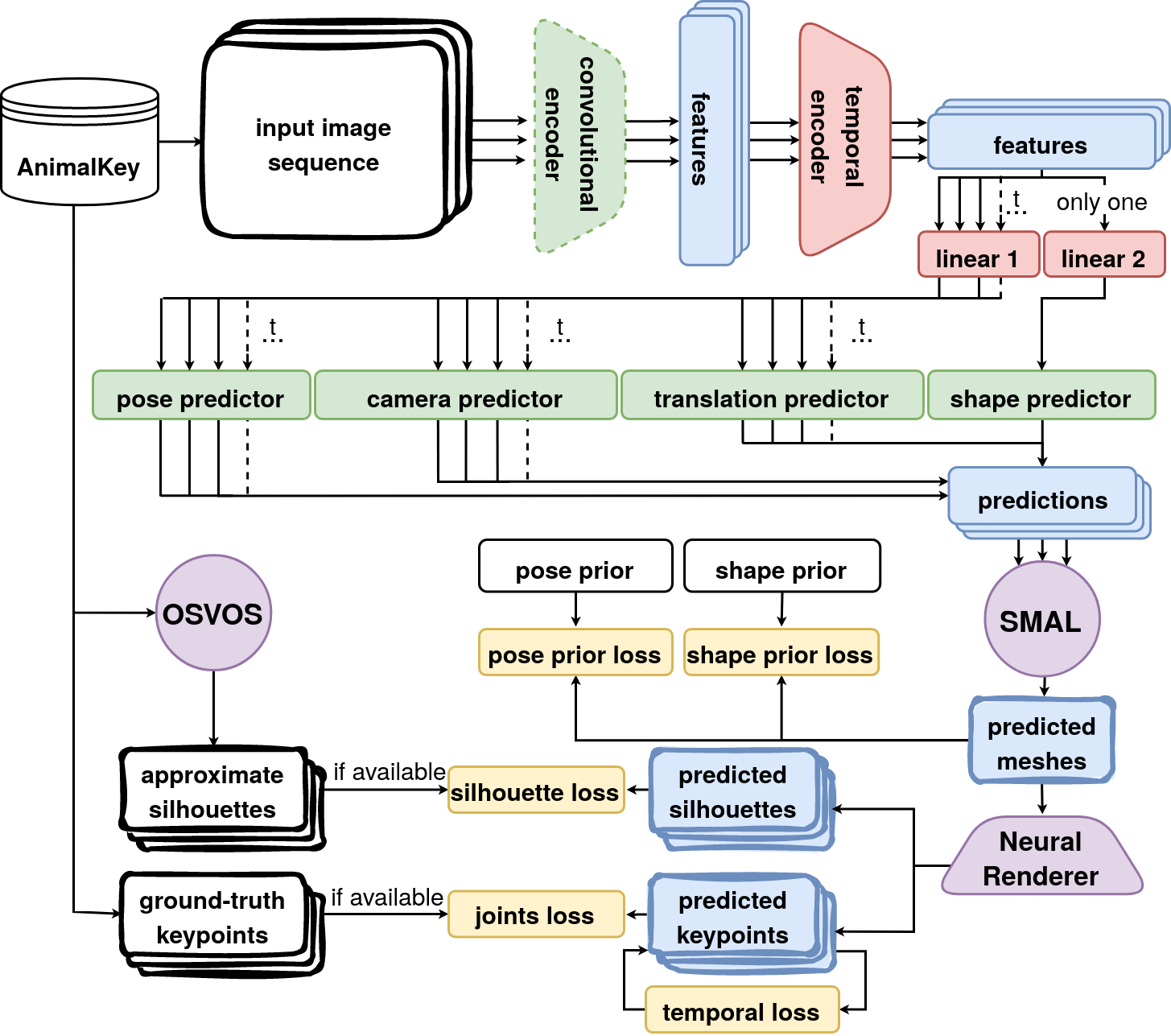

Architecture

The arhitecute of the neural network, used for the online learning, is shown in the image below. More information will be available once the diploma thesis is officially released by the university. The link will be added to this description.

Results

Below are shown some first results, achieved on the AnimalKey dataset (video sequences 295, 301 and 306 are randomly chosen for the demonstration purposes).

- Results of the base network, i.e. the WLDO pipeline trained on StanfordExtra with no fine-tuning on AnimalKey.

- Results of the parent network, i.e. the WLDO pipeline, fine-tuned on the annotated frames from AnimalKey without incorporating any temporal information.

- Results of tthe test network, i.e. the SMAL4V pipeline, trained on a single video with sparse annotations.

Note that the performance of the non-temporal pipeline fine-tuned the same data, is even less stable. (TO BE ADDED FOR DEMONSTRATION)

Still, current results suffer a lot from the insufficient training data from AnimalKey, making the networks predictions non-smooth despite training with the temporal losses. Most likelely, a more powerful parent network is required, which would pre-train the RNN encoder on more temporal sequences.

Want to help and collaborate on AnimalKey dataset? Let me know!

Installation Instructions

NOTE THAT SMALL UPDATES ARE BEING MADE TO THE REPO -> WAIT BEFORE INSTALLING

To install SMAL4V, folow the steps described below. If you find any of them not reproducable, please open an issue on github or contact me at iegorval@gmail.com. Note that all the instructions below are tested only on Ubuntu and you would probably need some version of Linux to run the project.

-

Make sure you have correctly installed CUDA as described at https://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html.

-

Install conda environment from

environment.ymland activate it:conda env create -f environment.ymlconda activate smal4v

-

Install PyTorch with CUDA support. Note that the command below corresponds to the CUDA v11.1. In case you have different CUDA versions, consult https://pytorch.org/get-started/locally/.

pip3 install torch==1.8.1+cu111 torchvision==0.9.1+cu111 torchaudio==0.8.1 -f https://download.pytorch.org/whl/lts/1.8/torch_lts.html

-

Install a PyTorch version of Neural 3D Mesh Renderer. Note that (as of time of writing)

pipinstallation fails with this package. Also, you should change allAT_CHECKtoAT_ASSERTin.cppfiles inneural_renderer/cuda/folder to be able to install it.cd externalgit clone https://github.com/daniilidis-group/neural_renderercd neural_rendererpython setup.py install

-

Download the SMPL model and move its

smpl_webuserdirectory to the SMAL4V's project root. -

Download the

smpl_modelsdirectory from another SMAL-related project (eg. from SMALST) and place it to the SMAL4V's project root. -

Download the data you want to use with the SMALST/WLDO/SMAL4V pipeline:

- StanfordExtra or GrevyZebra if you want to test mesh predictors on single-frame data with ground-truth silhouettes and 2D keypoints.

- AnimalKey if you want videos with sparse silhouettes and keypoints supervision.

- TigDog or BADJA if you want videos with dense keypoints supervision.

-

Download the pre-trained models:

-

Download the OSVOS model to be able densify the silhouette annotations if needed for training.

-

Set the

PYTHONPATHenvironment variable to include project root (or configure it in your IDE) and setLD_LIBRARY_PATHso that it has access to CUDA.export PYTHONPATH=[YOUR_PATH_TO_SMAL4V]export LD_LIBRARY_PATH=/usr/local/cuda/compat/lib:/usr/local/nvidia/lib:/usr/local/nvidia/lib64:/.singularity.d/libs:/home/*USERNAME*/miniconda3/envs/smalmv/lib

-

Now, you are ready to use the code base.

- If you want to test single-frame SMALST/WLDO mesh predictors, use

src/smal_eval.pywith the corresponding configuration files. - If you want to fine-tune a single frame mesh predictor on another dataset, use

src/smal_train.py(currently supports only weak/2D supervision). - If you want to train SMAL4V online/test network on a video sequence with sparse supervision, densify the silhouette annotations with OSVOS, and then use

src/smal_train_online.py. - If you want to evaluate a single-frame or multi-frame mesh predictor on a video sequence, use

src/smal_eval_online.py.

- If you want to test single-frame SMALST/WLDO mesh predictors, use