This repository can be used to reproduce results of the CVPR 2023 SAIAD (Safe Artificial Intelligence for All Domains) Workshop publication:

A Novel Benchmark for Refinement of Noisy Localization Labels in Autolabeled Datasets for Object Detection Andreas Bär, Jonas Uhrig, Jeethesh Pai Umesh, Marius Cordts, Tim Fingscheidt.

The goal is to train a LLRN (localization label refinement network) that is capable of refining potentially badly located 2D object bounding box labels (e.g. coming from autolabeling approaches). To this end we provide means to reproduce all three main contributions: Generate artificially corrupted datasets, training and evaluating our proposed localization label refinement network (LLRN), as well as training and evaluating a state-of-the-art 2D object detector.

For more details, refer to the paper

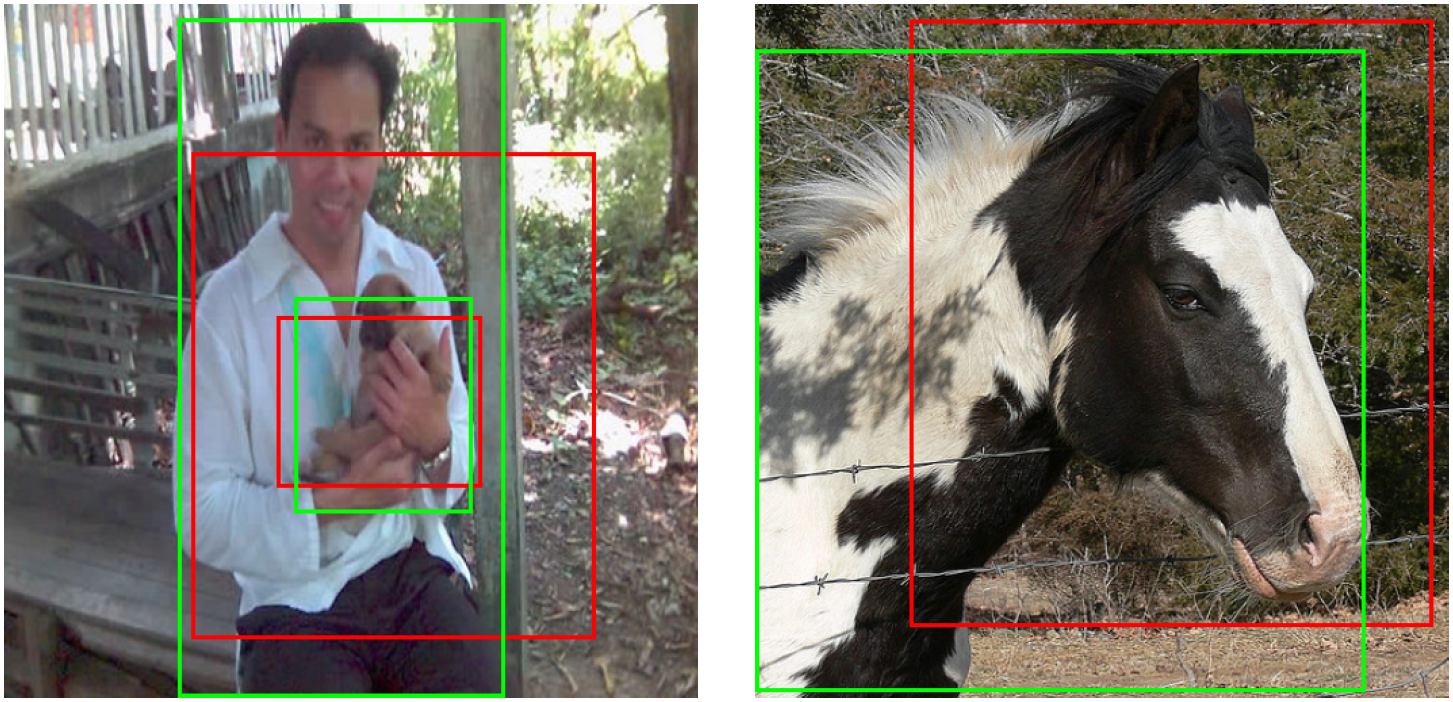

Examples for our localization label refinement. Our proposed framework takes a noisy localization label (red box)

as input and outputs a refined localization label (green box).

Examples for our localization label refinement. Our proposed framework takes a noisy localization label (red box)

as input and outputs a refined localization label (green box).

- LocalizationLabelNoise

- Initialize git submodules.

git submodule update --init --recursive- Make sure conda is installed.

conda --version- Install mmdetection by following the Installation instructions:

- Create a conda virtual environment and activate it.

conda create -n open-mmlab python=3.10 -y

conda activate open-mmlab- Install PyTorch and torchvision following the official instructions and make sure that the compilation cuda version and runtime CUDA version match. We pinned pytorch and CUDA to the following:

conda install pytorch==1.12.1 torchvision==0.13.1 torchaudio==0.12.1 cudatoolkit=11.3 -c pytorch- Install mmdetection dependencies.

cd mmdetection/

pip install -r requirements/build.txt

pip install "git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI"- Install mmdetection.

pip install -v -e .- Switch back to the root folder.

cd ..- Download PascalVOC devkit.

- Download and extract the data file.

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar

tar -xf VOCtrainval_11-May-2012.tar- Create an environment variable pointing to the data folder.

export PASCAL_VOC_ROOT=$(pwd)/VOCdevkit/VOC2012- Install LocalizationLabelNoise dependencies.

pip install -r requirements.txtNote: for some machines, mmcv installation failed, but worked smoothly via following command:

pip install "mmcv>=2.0.0" -f https://download.openmmlab.com/mmcv/dist/cu116/torch1.12.0/index.html- Download the weights of the ConvNeXt model, which serves as a backbone in our experiments.

mkdir model

wget -O model/convnext_tiny_22k_224.pth https://dl.fbaipublicfiles.com/convnext/convnext_tiny_22k_224.pthNote: All the scripts expect that the environment variable PASCAL_VOC_ROOT has been properly set during the installation step. Alternatively, you may provide the root path of the dataset using the command line parameter pascal-voc-root.

Generate the LLRN training dataset and provide the desired noise percentage of modified bounding boxes as an integer, e.g. 30.

Example:

python generate_noisy_dataset.py 30Analyze the average intersection-over-union (IoU) of the noisy dataset compared to the ground truth. The script offers various ways to refer to the noisy dataset:

- Use the command line option

--box-error-percentageand provide the noise percentage. The script construct the path to the noise labels assuming that the above command has been used to generate the noisy data. - Use the command line option

--noisy-annotation-dirand specify the directory of noisy annotations explicitly.

Example:

python analyze_noisy_dataset_iou.py --box-error-percentage 30Training requires the weights of the ConvNeXt backbone, which are provided as a file using the --weights argument.

Our experiments are based on the ConvNeXt tiny model and its weights were retrieved during the installation step #6.

In case a different backbone such as ConvNeXt base shall be used, you may need to adapt the depths and dims of the FasterRCNN model.

There are three model types available:

- single-pass:

--model-type single - multi-pass:

--model-type multi - cascade-pass:

--model-type cascade

Run python train_LLRN.py --help to obtain the full set of available CLI parameters.

Example (single-pass):

python train_LLRN.py --model-type single --box-error-percentage 30Example (multi-pass):

python train_LLRN.py --model-type multi --num-stages 3 --box-error-percentage 30Running the LLRN evaluation requires

- generating the noisy dataset as described above

- training the LLRN model as described above

The evaluation script expects the same command line arguments as the training script.

There is an additional option --visualize-every-kth-batch to visualize one output of a batch.

For visuzalizing the entire validation set predictions, use --batch-size 1 and --visualize-every-kth-batch 1.

Example (single-pass):

python eval_LLRN.py output/single<settings>/<epoch>_val_loss_<val_loss>.pth --model-type single --box-error-percentage 30 --visualize-every-kth-batch 1Having trained LLRN, it may be used to create the refinded dataset.

For detailed information on the required command line arguments, please refer to the above section on evaluation.

Use the --description <some-description> command line option to distinguish between different refinded datasets.

Example (single-pass):

python generate_refined_dataset.py <path_to_model_checkpoint>.pth --model-type single --box-error-percentage 30 --description some-refined-datasetTo visualize the ground truth, the noisy or the refined annotations, use the following script:

python visualize_dataset.py [--visualize-gt] [--visualize-noisy-labels] [--visualize-training-data] [--visualize-refined-labels --refined-labels-dir Refined_box_corruption_30_single1_default]To train the SOTA object detector based on ConvNext and Faster RCNN in mmdetection v3.0.0, use the following command. As shown below, you can easily specify which annotation subdirectory shall be used, i.e. the default 'Annotations' or a corrupted version such as 'Annotations_box_corruption_30' or a LLRN-refined version such as 'Refined_box_corruption_30_single1_default' via '--cfg-options train_dataloader.dataset.ann_subdir'. If your machine has multiple GPUs, you can specify the GPU ID via 'export CUDA_VISIBLE_DEVICES='. For the publication, we trained on a single GPU with batch-size of 4 using mmdetection v2.25.0 (but batch size of 2 as well as mmdetection v3.0.0 worked similarly well).

python mmdetection/tools/train.py configs/faster_rcnn/faster_rcnn_ConvNext_CustomNeck_VOC.py --work-dir /<desired_output_dir>/ --cfg-options train_dataloader.dataset.ann_subdir=<desired_annotations_dir, e.g. Annotations_box_corruption_30>Besides the regular evaluation during the training (typically 1 eval every epoch, computing mAP for IoU thresholds of 0.5, 0.6, 0.7, 0.8, 0.9), you can also trigger a dedicated evaluation of a certain checkpoint with the following command:

python mmdetection/tools/test.py configs/faster_rcnn/faster_rcnn_ConvNext_CustomNeck_VOC.py <path_to_above_work_dir>/epoch_<xx>.pthThis project is licensed under MIT license. Please see the LICENSE file for more information.

SPDX-License-Identifier: MIT@InProceedings{Bar_2023_CVPR,

author = {B\"ar, Andreas and Uhrig, Jonas and Umesh, Jeethesh Pai and Cordts, Marius and Fingscheidt, Tim},

title = {{A Novel Benchmark for Refinement of Noisy Localization Labels in Autolabeled Datasets for Object Detection}},

booktitle = {Proc. of CVPR - Workshops},

month = {June},

year = {2023},

address = {Vancouver, BC, Canada},

pages = {3850-3859}

}