⚡️ DeepLX API npm package.

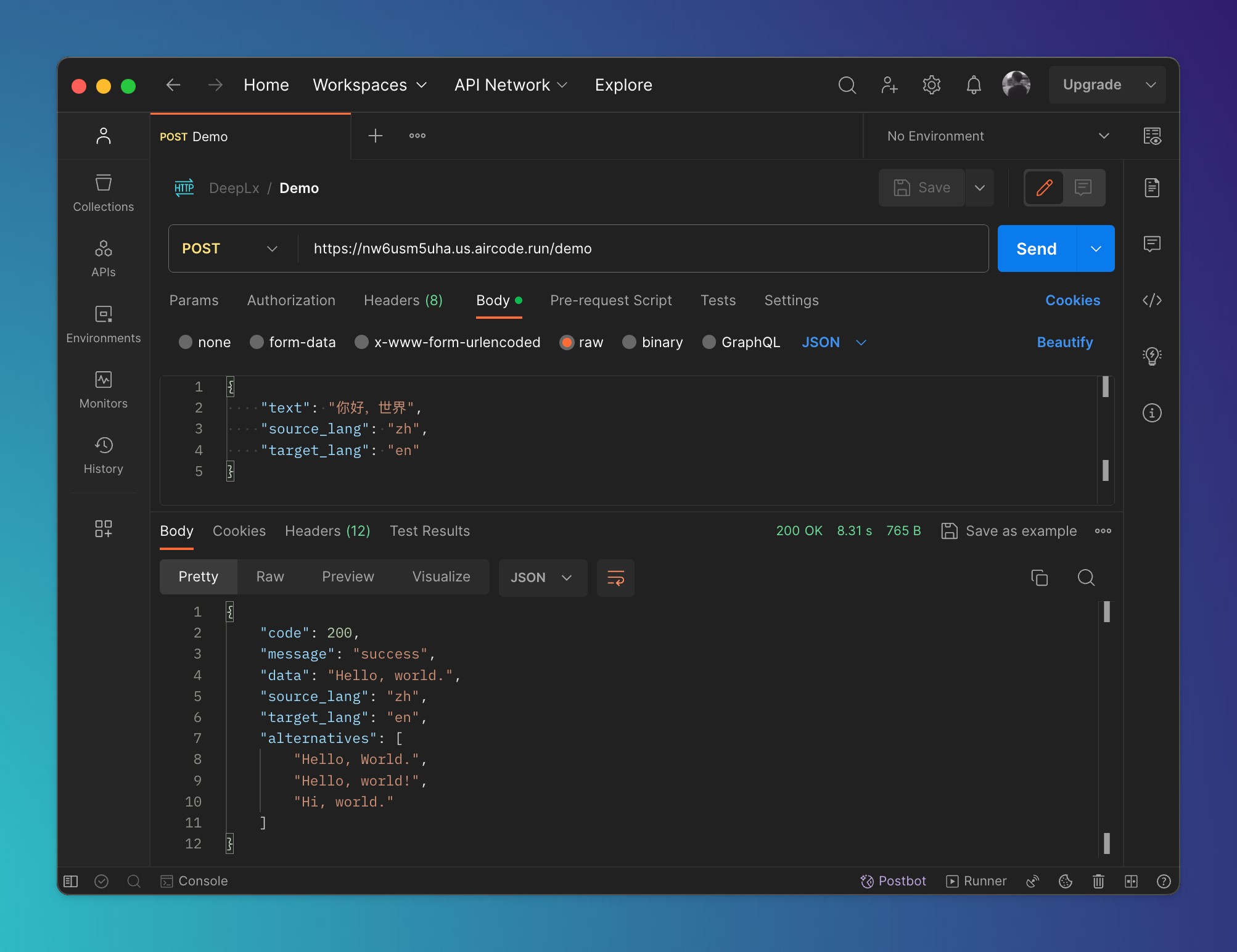

npm i @ifyour/deeplxIn your backend service, install and use this package, you can use any backend framework you like. Here's a demo of my deployment on AirCode, you can click here to deploy one of your own.

import { query } from '@ifyour/deeplx';

export default async function(params, context) {

return await query(params);

}curl --location 'https://nw6usm5uha.us.aircode.run/demo' \

--header 'Content-Type: application/json' \

--data '{

"text": "你好,世界",

"source_lang": "zh",

"target_lang": "en"

}'

{

"code": 200,

"message": "success",

"data": "Hello, world.",

"source_lang": "zh",

"target_lang": "en",

"alternatives": ["Hello, World.", "Hello, world!", "Hi, world."]

}yarn install

# You need to install bun, please refer to https://bun.sh

yarn run dev

yarn run test

yarn run lint --fixBased on current testing, Cloudflare and Cloudflare-based edge function runtimes (Vercel) are not able to correctly request the DeepL server, and a 525 error occurs, a detailed description of the issue can be found here.

For this case, it can be solved using the DeepL proxy server, please refer to the code example.

DeepLX is available under the MIT license.