Marxan Cloud platform

Welcome to the Marxan Cloud platform. We aim to bring to the planet the finest workflows for conservation planning.

Quick start

This repository is a monorepo which includes all the microservices of the Marxan Cloud platform. Each microservice lives in a top-level folder.

Services are packaged as Docker images.

Microservices are set up to be run via Docker Compose for local development.

In CI, testing, staging and production environments, microservices are orchestrated via Kubernetes (forthcoming).

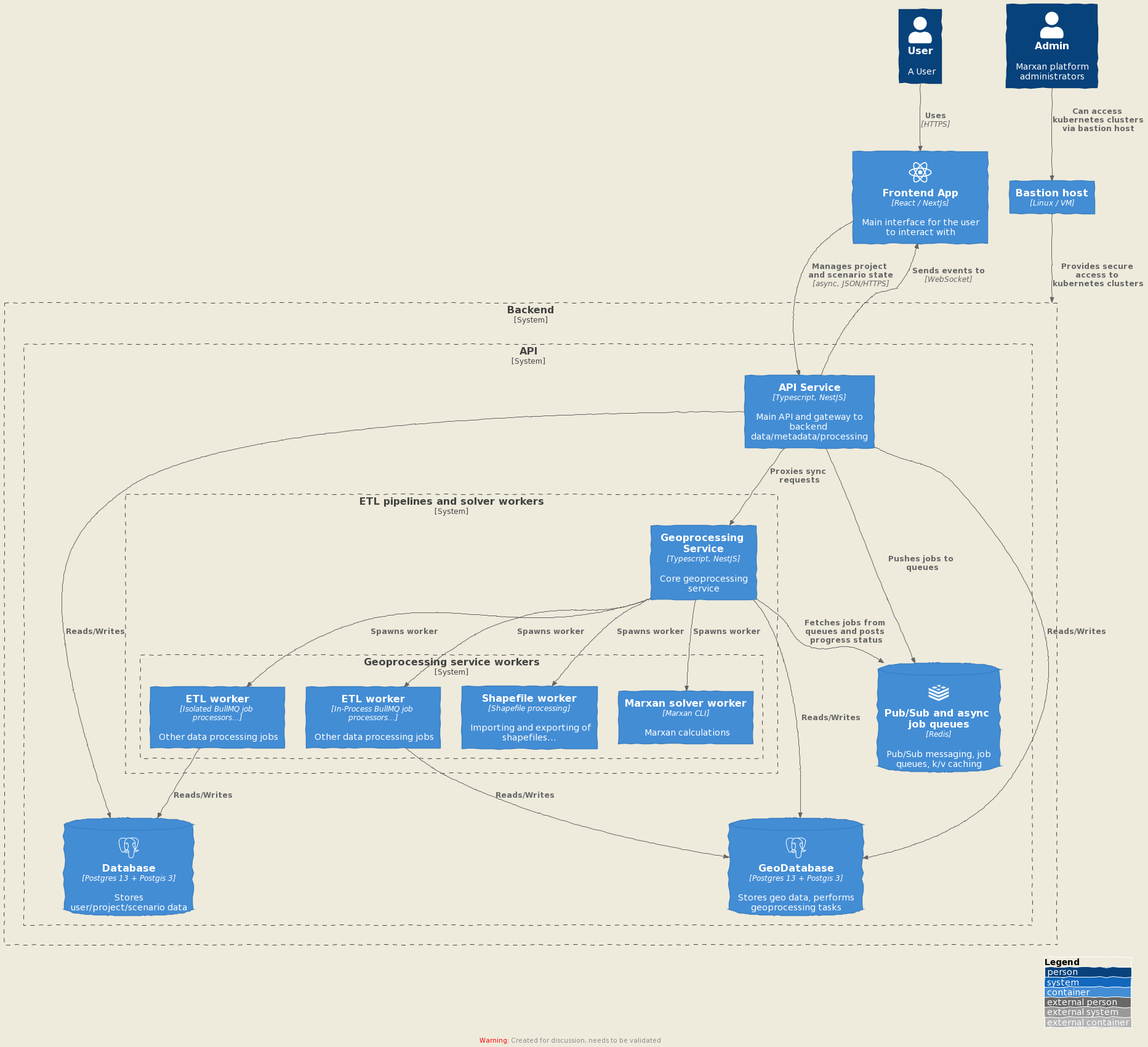

Platform architecture

See ARCHITECTURE_infrastructure.md for details.

Prerequisites

-

Install Docker (19.03+):

-

Install Docker Compose

-

Create an

.envat the root of the repository, defining all the required environment variables listed below. In most cases, for variables other than secrets, the defaults inenv.defaultmay just work - YMMV.API_AUTH_JWT_SECRET(string, required): a base64-encoded secret for the signing of API JWT tokens; can be generated via a command such asdd if=/dev/urandom bs=1024 count=1 | base64 -w0API_AUTH_X_API_KEY(string, required): a secret used as API key for requests from the Geoprocessing service to the API; can be generated similarly toAPI_AUTH_JWT_SECRETAPI_SERVICE_PORT(number, required): the port exposed by Docker for the API service; when running an instance under Docker Compose, NestJS will always be listening on port 3000 internally, and this is mapped toAPI_SERVICE_PORTwhen exposed outside of the containerAPI_SERVICE_URL(URL, optional, default is http://api:3000): the internal (docker-compose or k8s cluster) where the API service can be reached by other services running in the clusterAPI_RUN_MIGRATIONS_ON_STARTUP: (true|false, optional, default istrue): set this tofalseif migrations for the API service should not run automatically on startupAPI_LOGGING_MUTE_ALL(boolean, optional, default isfalse): can be used to mute all logging (for example, in CI pipelines) irrespective of Node environment and other settings that would normally affect the logging verbosity of the APIAPI_SHARED_FILE_STORAGE_LOCAL_PATH(string, optional, default is/tmp/storage): set this to a filesystem path if needing to override the default temporary storage location where shared volumes for files shared from the API to the Geoprocessing service are mounted; configuration of mount point for shared storage (via Docker volumes in development environments and via Persistent Volumes in Kubernetes environments) should be set accordinglyAPP_SERVICE_PORT(number, required): the port on which the App service should listen on the local machinePOSTGRES_API_SERVICE_PORT(number, required): the port on which the PostgreSQL service should listen on the local machineAPI_POSTGRES_USER(string, required): username to be used for the PostgreSQL connection (API)API_POSTGRES_PASSWORD(string, required): password to be used for the PostgreSQL connection (API)API_POSTGRES_DB(string, required): name of the database to be used for the PostgreSQL connection (API)GEOPROCESSING_SERVICE_PORT(number, required): the port exposed by Docker for the Geoprocessing service; when running an instance under Docker Compose, NestJS will always be listening on port 3000 internally, and this is mapped toGEOPROCESSING_SERVICE_PORTwhen exposed outside of the containerPOSTGRES_GEO_SERVICE_PORT(number, required): the port on which the geoprocessing PostgreSQL service should listen on the local machineGEOPROCESSING_RUN_MIGRATIONS_ON_STARTUP: (true|false, optional, default istrue): set this tofalseif migrations for the Geoprocessing service should not run automatically on startupGEO_POSTGRES_USER(string, required): username to be used for the geoprocessing PostgreSQL connection (API)GEO_POSTGRES_PASSWORD(string, required): password to be used for the geoprocessing PostgreSQL connection (API)GEO_POSTGRES_DB(string, required): name of the database to be used for the geoprocessing PostgreSQL connection (API)POSTGRES_AIRFLOW_SERVICE_PORT(number, required): the port on which the PostgreSQL for Airflow service should listen on the local machineAIRFLOW_PORT(number, required): the port on which the Airflow service should listen on the local machineREDIS_API_SERVICE_PORT(number, required): the port on which the Redis service should listen on the local machineREDIS_COMMANDER_PORT(number, required): the port on which the Redis Commander service should listen on the local machineSPARKPOST_APIKEY(string, required): an API key to be used for Sparkpost, an email serviceSPARKPOST_ORIGIN(string, required): the URL of a SparkPost API service: this would normally be eitherhttps://api.sparkpost.comorhttps://api.eu.sparkpost.com(note: no trailing/character or the SparkPost API client library will not work correctly); please check SparkPost's documentation and the client library's own documentation for detailsAPPLICATION_BASE_URL(string, required): the public URL of the frontend application on the running instance (without trailing slash). This URL will be used to compose links sent via email for some flows of the platform, such as password recovery or sign-up confirmation (see alsoPASSWORD_RESET_TOKEN_PREFIXandSIGNUP_CONFIRMATION_TOKEN_PREFIX)PASSWORD_RESET_TOKEN_PREFIX(string, required): the path that should be appended after the application base URL (APPLICATION_BASE_URL), corresponding to the frontend route where users are redirected from password reset emails to complete the process of resetting their password; the reset token is appended at the end of this URL to compose the actual link that is included in password reset emailsPASSWORD_RESET_EXPIRATION(string, optional, default is 1800000 milliseconds: 30 minutes): a time (in milliseconds) that a token for a password reset is valid forSIGNUP_CONFIRMATION_TOKEN_PREFIX(string, required): the path that should be appended after the application base URL (APPLICATION_BASE_URL), corresponding to the frontend route where users are redirected from sign-up confirmation emails to complete the process validating their account; the validation token is appended at the end of this URL to compose the actual link that is included in sign-up confirmation emails

The PostgreSQL credentials are used to create a database user when the PostgreSQL container is started for the first time. PostgreSQL data is persisted via a Docker volume.

Running API and Geoprocessing services natively

When running the API and Geoprocessing services without relying on Docker

Compose for container orchestration, the following two environment variables can

be used to set on which port the NestJS/Express daemon should be listening,

instead of the hardcoded port 3000 which is used in Docker setups.

API_DAEMON_LISTEN_PORT(number, optional, default is 3000): port on which the Express daemon of the API service will listenGEOPROCESSING_DAEMON_LISTEN_PORT(number, optional, default is 3000): port on which the Express daemon of the Geoprocessing service will listen

Running the Marxan Cloud platform

Run make start to start all the services.

Run make start-api to start api services.

Running the notebooks

Run make notebooks to start the jupyterlab service.

Seed data

To seed the geodb database after a clean state, you need to follow the next instructions:

make seed-geodb-dataThis will populate the metadata DB and will trigger the geo-pipelines to seed the geoDB.

Note: Full db set up will require at least 16GB of RAM and 40GB of disk space in order to fulfill

some of these tasks (GADM and WDPA data import pipelines). Also the number of

CPU cores will impact the time needed to seed a new instance with the complete

GADM and WDPA datasets.

or if you only wants to populate the newly fresh instance with a small subset of test data:

make seed-dbsWe also provide a way to freshly clean the dbs instances(we recommend do it regularly):

make clean-slateAnd finally we provided a set of commands to create a new dbs dumps, upload them to an azure instance and restore both dbs that is faster that triggering the geodb pipes.

make generate-content-dumps && make upload-dump-datamake restore-dumpsDevelopment workflow (TBD)

We use a lightweight git flow workflow. develop, main, feature/bug fix

branches, release branches (release/vX.Y.Z-etc).

Please use per component+task feature branches: <feature type>/<component>/NNNN-brief-description. For example:

feature/api/12345-helm-setup.

PRs should be rebased on develop.

As feature types:

featurebugfix(regular bug fix)hotfix(urgent bug fixes fast-tracked tomain)

Bugs

Please use the Marxan Cloud issue tracker to report bugs.

License

(C) Copyright 2020-2021 Vizzuality.

This program is free software: you can redistribute it and/or modify it under the terms of the MIT License as included in this repository.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the MIT License for more details.

You should have received a copy of the MIT License along with this program. If not, see https://spdx.org/licenses/MIT.html.