This repository provides sample plugin Lambda functions for use with the QnABot on AWS solution.

The directions below explain how to build and deploy the plugins. For more information on the QnABot solution itself, see the QnABot on AWS Solution Implementation Guide.

Plugins to extend QnABot LLM integration

- Amazon Bedrock Embeddings and LLM: Uses Amazon Bedrock service API

- AI21 LLM: Uses AI21's Jurassic model API - requires an AI21 account with an API Key

- Anthropic LLM: Uses Anthropic's Claude model API - requires an Anthropic account with an API Key

- Llama 2 13b Chat LLM: Uses Llama 2 13b Chat model - requires Llama-2-chat model to be deployed via SageMaker JumpStart. Refer to Llama 2 foundation models from Meta are now available in Amazon SageMaker JumpStart on how to deploy the Llama-2-chat model in SageMaker JumpStart.

- Mistral 7b Instruct LLM: Uses Mistral 7b Instruct model - requires Mistral 7b Instruct model to be deployed via SageMaker JumpStart. Refer to Mistral 7B foundation models from Mistral AI are now available in Amazon SageMaker JumpStart on how to deploy the Mistral 7B Instruct model in SageMaker JumpStart.

- Amazon Q, your business expert: Integrates Amazon Q, your business expert (preview) with QnABot as a fallback answer source, using QnAbot's Lambda hooks with CustomNoMatches/no_hits. For more information see: QnABot LambdaHook for Amazon Q, your business expert (preview)

Note: Perform this step only if you want to create deployment artifacts in your own account. Otherwise, we have hosted a CloudFormation template for 1-click deployment in the deploy section.

Pre-requisite: You must already have the AWS CLI installed and configured. You can use an AWS Cloud9 environment.

Copy the plugins GitHub repo to your local machine.

Either:

- use the

gitcommand:git clone https://github.com/aws-samples/qnabot-on-aws-plugin-samples.git - OR, download and expand the ZIP file from the GitHub page: https://github.com/aws-samples/qnabot-on-aws-plugin-samples/archive/refs/heads/main.zip

Then use the publish.sh bash script in the project root directory to build the project and deploy CloudFormation templates to your own deployment bucket.

Run the script with up to 3 parameters:

./publish.sh <cfn_bucket> <cfn_prefix> [public]

- <cfn_bucket>: name of S3 bucket to deploy CloudFormation templates and code artifacts. If bucket does not exist, it will be created.

- <cfn_prefix>: artifacts will be copied to the path specified by this prefix (path/to/artifacts/)

- public: (optional) Adding the argument "public" will set public-read acl on all published artifacts, for sharing with any account.

To deploy to a non-default region, set environment variable AWS_DEFAULT_REGION to a region supported by QnABot. See: Supported AWS Regions

E.g. to deploy in Ireland run export AWS_DEFAULT_REGION=eu-west-1 before running the publish script.

It downloads package dependencies, builds code zipfiles, and copies templates and zip files to the cfn_bucket. When completed, it displays the CloudFormation templates S3 URLs and 1-click URLs for launching the stack creation in CloudFormation console, e.g.:

------------------------------------------------------------------------------

Outputs

------------------------------------------------------------------------------

QNABOTPLUGIN-AI21-LLM

==============

- Template URL: https://s3.us-east-1.amazonaws.com/xxxxx-cfn-bucket/qnabot-plugins/ai21-llm.yaml

- Deploy URL: https://us-east-1.console.aws.amazon.com/cloudformation/home?region=us-east-1#/stacks/create/review?templateURL=https://s3.us-east-1.amazonaws.com/xxxxx-cfn-bucket/qnabot-plugins/ai21-llm.yaml&stackName=QNABOTPLUGIN-AI21-LLM

QNABOTPLUGIN-ANTHROPIC-LLM

==============

- Template URL: https://s3.us-east-1.amazonaws.com/xxxxx-cfn-bucket/qnabot-plugins/anthropic-llm.yaml

- Deploy URL: https://us-east-1.console.aws.amazon.com/cloudformation/home?region=us-east-1#/stacks/create/review?templateURL=https://s3.us-east-1.amazonaws.com/xxxxx-cfn-bucket/qnabot-plugins/anthropic-llm.yaml&stackName=QNABOTPLUGIN-ANTHROPIC-LLM

QNABOTPLUGIN-BEDROCK-EMBEDDINGS-LLM

==============

- Template URL: https://s3.us-east-1.amazonaws.com/xxxxx-cfn-bucket/qnabot-plugins/bedrock-embeddings-llm.yaml

- Deploy URL: https://us-east-1.console.aws.amazon.com/cloudformation/home?region=us-east-1#/stacks/create/review?templateURL=https://s3.us-east-1.amazonaws.com/xxxxx-cfn-bucket/qnabot-plugins/bedrock-embeddings-llm.yaml&stackName=QNABOTPLUGIN-BEDROCK-EMBEDDINGS-LLM

QNABOTPLUGIN-LLAMA-2-13B-CHAT-LLM

==============

- Template URL: https://s3.us-east-1.amazonaws.com/xxxxx-cfn-bucket/qnabot-plugins/llama-2-13b-chat-llm.yaml

- Deploy URL: https://us-east-1.console.aws.amazon.com/cloudformation/home?region=us-east-1#/stacks/create/review?templateURL=https://s3.us-east-1.amazonaws.com/xxxxx-cfn-bucket/qnabot-plugins/llama-2-13b-chat-llm.yaml&stackName=QNABOTPLUGIN-LLAMA-2-13B-CHAT-LLM

QNABOTPLUGIN-MISTRAL-7B-INSTRUCT-CHAT-LLM

==============

- Template URL: https://s3.us-east-1.amazonaws.com/xxxxx-cfn-bucket/mistral-7b-instruct-chat-llm.yaml

- Deploy URL: https://us-east-1.console.aws.amazon.com/cloudformation/home?region=us-east-1#/stacks/create/review?templateURL=https://s3.us-east-1.amazonaws.com/xxxxx-cfn-bucket/qnabot-plugins/mistral-7b-instruct-chat-llm.yaml&stackName=QNABOTPLUGIN-MISTRAL-7B-INSTRUCT-CHAT-LLM

Use AWS CloudFormation to deploy one or more of the sample plugin Lambdas in your own AWS account (if you do not have an AWS account, please see How do I create and activate a new Amazon Web Services account?):

- Log into the AWS console if you are not already. Note: Ensure that your IAM Role/User have permissions to create and manage the necessary resources and components for this application.

- Choose one of the Launch Stack buttons below for your desired LLM and AWS region to open the AWS CloudFormation console and create a new stack.

- On the CloudFormation

Create Stackpage, clickNext - Enter the following parameters:

Stack Name: Name your stack, e.g. QNABOTPLUGIN-LLM-AI21.APIKey: Your Third-Party vendor account API Key, if applicable. The API Key is securely stored in AWS Secrets Manager.LLMModelIdandEmbeddingsModelId(for Bedrock),LLMModel(for Anthropic),LLMModelType(for AI21): Choose one of the available models to be used depending on the model provider.

| Plugin | Launch Stack | Template URL |

|---|---|---|

| QNABOT-BEDROCK-EMBEDDINGS-AND-LLM |  |

https://s3.us-east-1.amazonaws.com/aws-ml-blog/artifacts/qnabot-on-aws-plugin-samples/bedrock-embeddings-and-llm.yaml |

| QNABOT-AI21-LLM |  |

https://s3.us-east-1.amazonaws.com/aws-ml-blog/artifacts/qnabot-on-aws-plugin-samples/ai21-llm.yaml |

| QNABOT-ANTHROPIC-LLM |  |

https://s3.us-east-1.amazonaws.com/aws-ml-blog/artifacts/qnabot-on-aws-plugin-samples/anthropic-llm.yaml |

| QNABOTPLUGIN-LLAMA-2-13B-CHAT-LLM |  |

https://s3.us-east-1.amazonaws.com/aws-ml-blog/artifacts/qnabot-on-aws-plugin-samples/llama-2-13b-chat-llm.yaml |

| QNABOTPLUGIN-MISTRAL-7B-INSTRUCT-CHAT-LLM |  |

https://s3.us-east-1.amazonaws.com/aws-ml-blog/artifacts/qnabot-on-aws-plugin-samples/mistral-7b-instruct-chat-llm.yaml |

| QNABOTPLUGIN-QNA-BOT-QBUSINESS-LAMBDAHOOK |  |

https://s3.us-east-1.amazonaws.com/aws-ml-blog/artifacts/qnabot-on-aws-plugin-samples/qna_bot_qbusiness_lambdahook.yaml |

| Plugin | Launch Stack | Template URL |

|---|---|---|

| QNABOT-BEDROCK-EMBEDDINGS-AND-LLM |  |

https://s3.us-west-2.amazonaws.com/aws-ml-blog-us-west-2/artifacts/qnabot-on-aws-plugin-samples/bedrock-embeddings-and-llm.yaml |

| QNABOT-AI21-LLM |  |

https://s3.us-west-2.amazonaws.com/aws-ml-blog-us-west-2/artifacts/qnabot-on-aws-plugin-samples/ai21-llm.yaml |

| QNABOT-ANTHROPIC-LLM |  |

https://s3.us-west-2.amazonaws.com/aws-ml-blog-us-west-2/artifacts/qnabot-on-aws-plugin-samples/anthropic-llm.yaml |

| QNABOTPLUGIN-LLAMA-2-13B-CHAT-LLM |  |

https://s3.us-west-2.amazonaws.com/aws-ml-blog-us-west-2/artifacts/qnabot-on-aws-plugin-samples/llama-2-13b-chat-llm.yaml |

| QNABOTPLUGIN-MISTRAL-7B-INSTRUCT-CHAT-LLM |  |

https://s3.us-west-2.amazonaws.com/aws-ml-blog-us-west-2/artifacts/qnabot-on-aws-plugin-samples/mistral-7b-instruct-chat-llm.yaml |

| QNABOTPLUGIN-QNA-BOT-QBUSINESS-LAMBDAHOOK |  |

https://s3.us-west-2.amazonaws.com/aws-ml-blog-us-west-2/artifacts/qnabot-on-aws-plugin-samples/qna_bot_qbusiness_lambdahook.yaml |

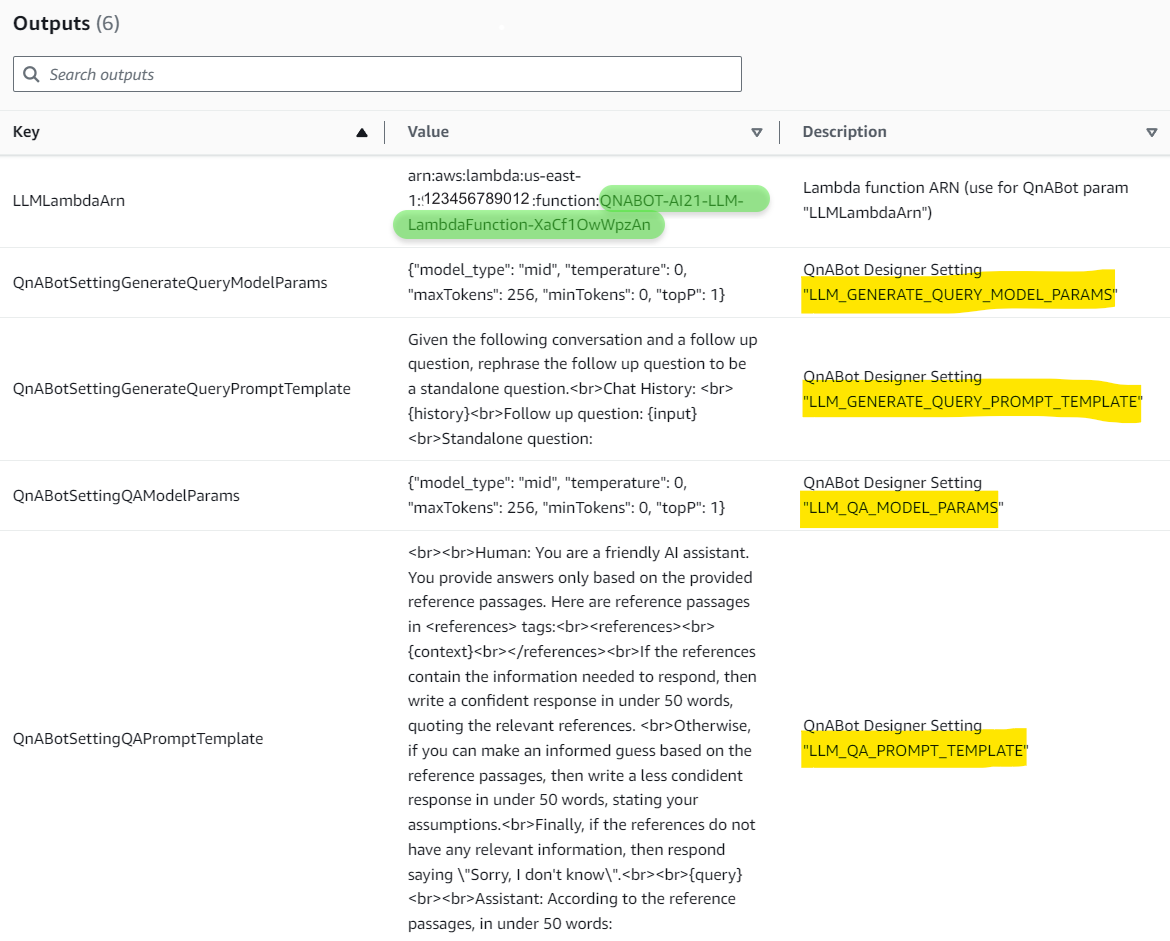

When your CloudFormation stack status is CREATE_COMPLETE, choose the Outputs tab

- Copy the value for

LLMLambdaArn - Deploy a new QnABot Stack (instructions) or Update an existing QnABot stack (instructions), selecting LLMApi parameter as

LAMBDA, and for LLMLambdaArn parameter enter the Lambda Arn copied above.

For more information, see QnABot LLM README - Lambda Function

When the QnABot Cloudformation stack status is CREATE_COMPLETE or UPDATE_COMPLETE:

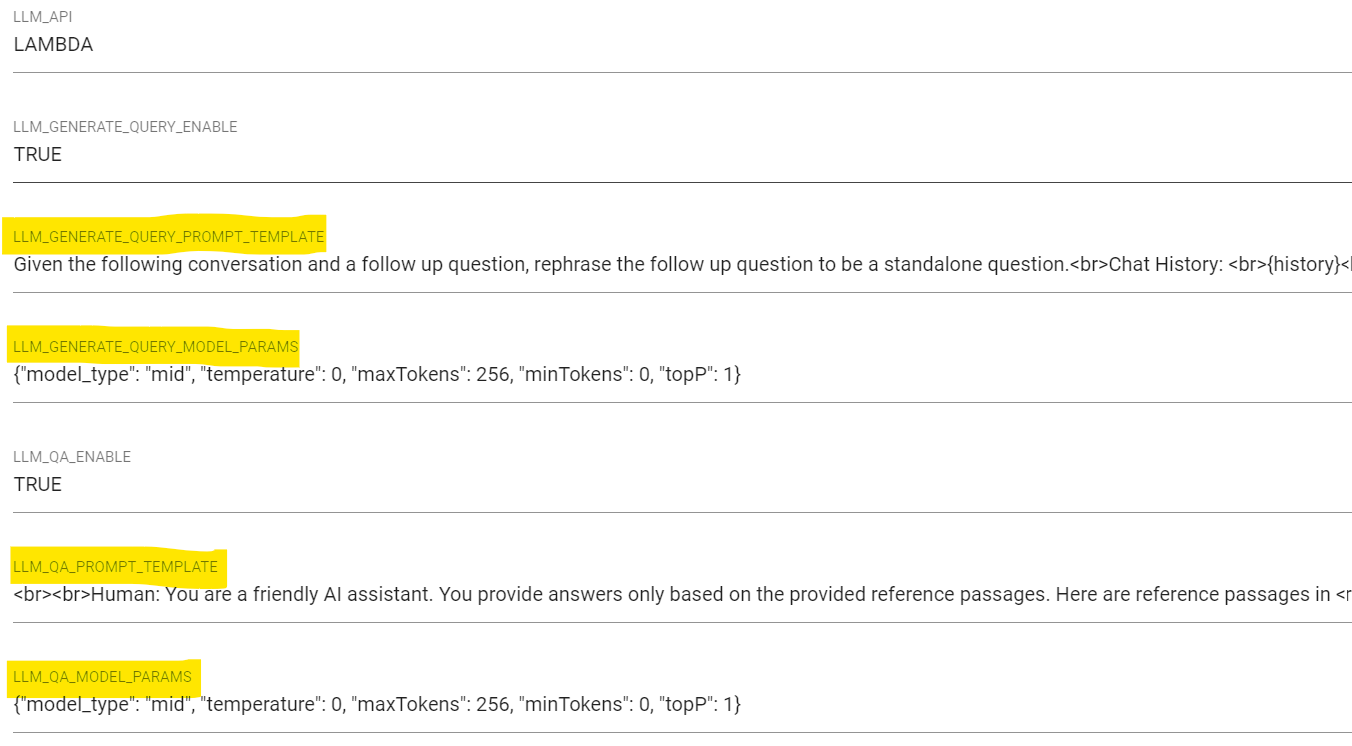

- Keep your QnABot plugins CloudFormation stack Outputs tab open

- In a new browser window, log into QnABot Content Designer (You can find the URL in the Outputs tab of your QnABot CloudFormation stack

ContentDesignerURL). You will need to set your password for the first login. - From the Content Designer tools (☰) menu, choose Settings

- From your QnABot plugins CloudFormation stack Outputs tab, copy setting values from each of the outputs named

QnABotSetting... - In a new browser window, access the QnABot Client URL (You can find the URL in the Outputs tab of your QnABot CloudFormation stack

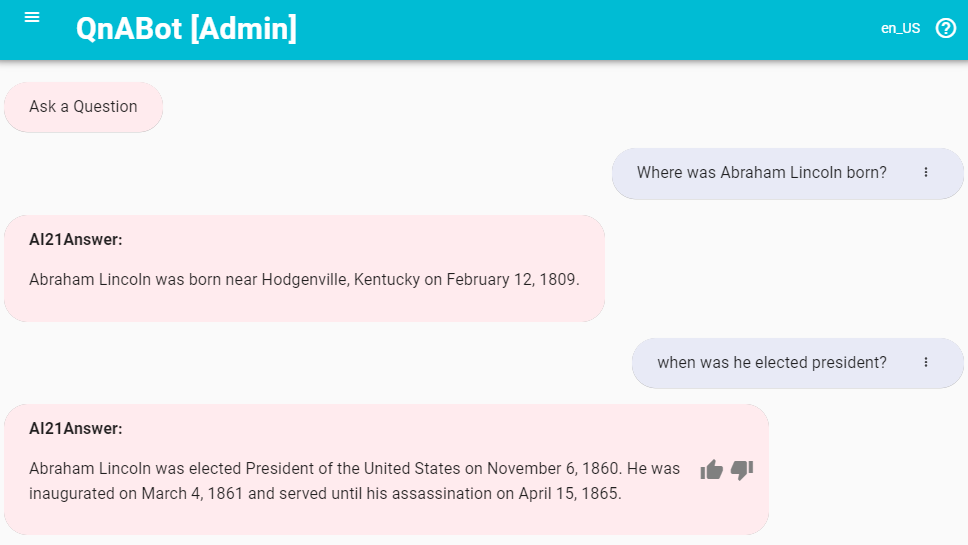

ClientURL), and start interacting with the QnA bot!

(Optional) Configure QnABot to use your new Embeddings function (currently only available for Bedrock)

When your CloudFormation stack status is CREATE_COMPLETE, choose the Outputs tab

- Copy the value for

EmbeddingsLambdaArnandEmbeddingsLambdaDimensions - Deploy a new QnABot Stack (instructions) or Update an existing QnABot stack (instructions), selecting EmbeddingsApi as

LAMBDA, and for EmbeddingsLambdaArn and EmbeddingsLambdaDimensions enter the Lambda Arn and embedding dimension values copied above.

For more information, see QnABot Embeddings README - Lambda Function

The default AWS region and endpoint URL are set based on the CloudFormation deployed region and the default third-party LLM provider/Bedrock endpoint URL. To override the endpoint URL:

- Once your CloudFormation stack status shows CREATE_COMPLETE, go to the Outputs tab and copy the Lambda Function Name [refer to green highlighted field above].

- In Lambda Functions, search for the Function Name.

- Go to the Configuration tab, edit Environment Variables, add ENDPOINT_URL to override the endpoint URL.

When your CloudFormation stack status is CREATE_COMPLETE, choose the Outputs tab. Use the link for APIKeySecret to open AWS Secrets Manager to inspect or edit your API Key in Secret value.

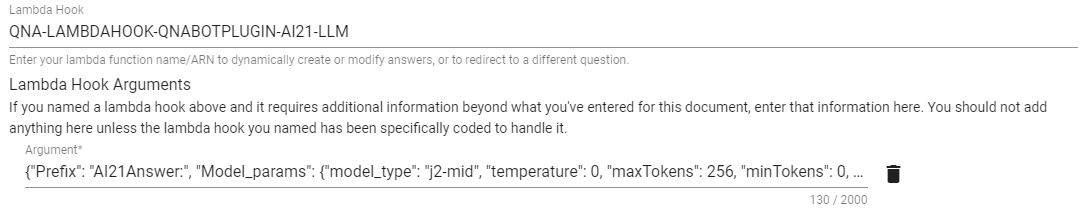

Optionally configure QnAbot to prompt the LLM directly by configuring the LLM Plugin LambdaHook function QnAItemLambdaHookFunctionName as a Lambda Hook for the QnABot CustomNoMatches no_hits item. When QnABot cannot answer a question by any other means, it reverts to the no_hits item, which, when configured with this Lambda Hook function, will relay the question to the LLM.

When your Plugin CloudFormation stack status is CREATE_COMPLETE, choose the Outputs tab. Look for the outputs QnAItemLambdaHookFunctionName and QnAItemLambdaHookArgs. Use these values in the LambdaHook section of your no_hits item. You can change the value of "Prefix', or use "None" if you don't want to prefix the LLM answer.

The default behavior is to relay the user's query to the LLM as the prompt. If LLM_QUERY_GENERATION is enabled, the generated (disambiguated) query will be used, otherwise the user's utterance is used. You can override this behavior by supplying an explicit "Prompt" key in the QnAItemLambdaHookArgs value. For example setting QnAItemLambdaHookArgs to {"Prefix": "LLM Answer:", "Model_params": {"modelId": "anthropic.claude-instant-v1", "temperature": 0}, "Prompt":"Why is the sky blue?"} will ignore the user's input and simply use the configured prompt instead. Prompts supplied in this manner do not (yet) support variable substitution (eg to substitute user attributes, session attributes, etc. into the prompt). If you feel that would be a useful feature, please create a feature request issue in the repo, or, better yet, implement it, and submit a Pull Request!

Currently the Lambda hook option has been implemented only in the Bedrock, AI21, and (new!) AmazonQ (Business) plugins.

For more infomation on the Amazon Q plugin, see QnABot LambdaHook for Amazon Q, your business expert (preview)

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.