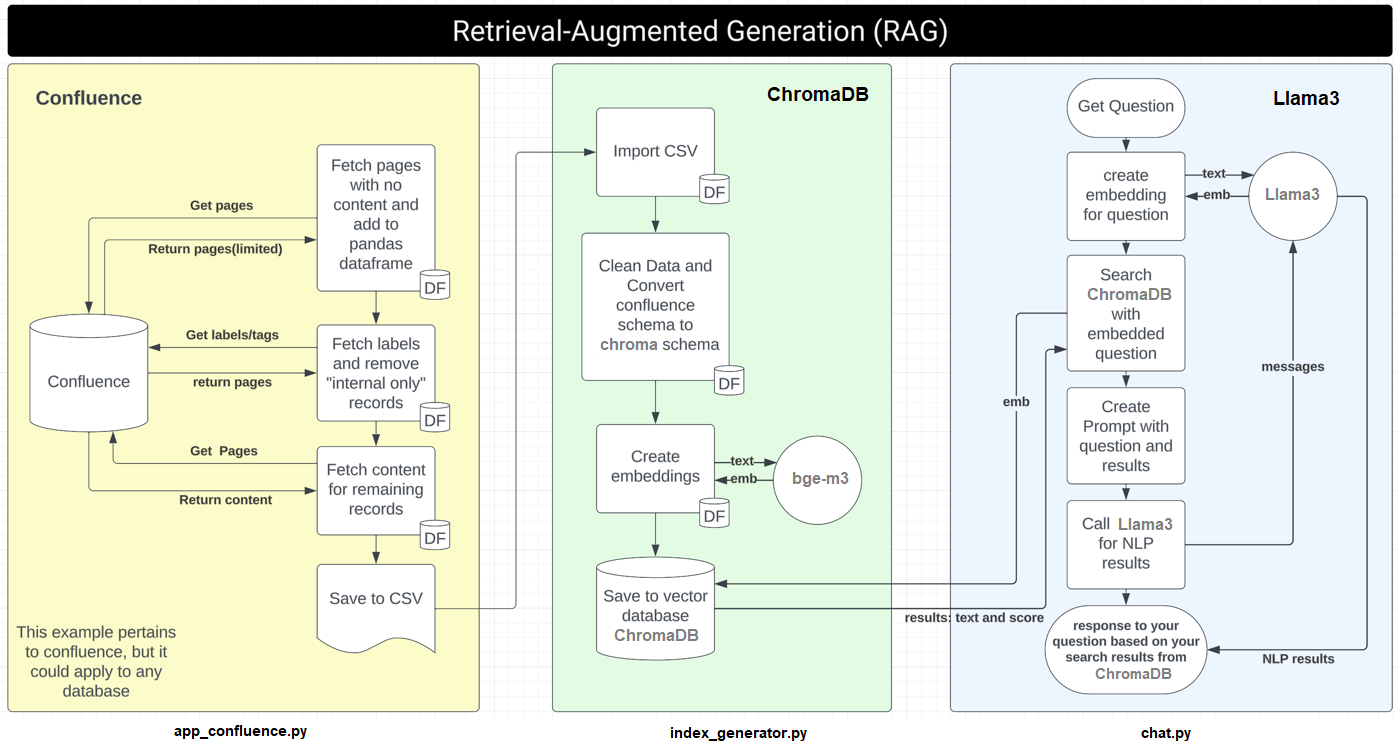

This RAG system fetches information from your private Confluence as a CSV file, vectorizes and stores the embeddings in ChromaDB, and then uses it via Streamlit or as a Slack bot, interpreting the result with Llama 3.

RAG Flow Chart: Please refer to the rag_flowchart.png in the repository for a visual representation of the system workflow.

- Python 3.8+

- Python packages listed in

requirements.txt .envfile with the following variables:CONFLUENCE_DOMAINCONFLUENCE_TOKENCONFLUENCE_SPACE_KEYCONFLUENCE_TEAM_KEYSLACK_BOT_TOKENSLACK_APP_TOKENSLACK_SIGNING_SECRETGROQ_API_KEY(optional, for using Groq)

- If you are using a fully local installation, install Llama3 (it should require a good GPU in your system)

Clone the repository.

git clone git@github.com:ikarius6/baymax-rag-system.gitCreate your local enviroment

python -m venv venv

source venv/bin/activateInstall the necessary packages:

pip install -r requirements.txtCreate a .env file in the root directory with the required environment variables.

# Confluence

CONFLUENCE_DOMAIN="https://yourconfluence.com"

CONFLUENCE_TOKEN=""

CONFLUENCE_SPACE_KEY="SPACE_KEY"

CONFLUENCE_TEAM_KEY="TEAM"

# Groq

GROQ_API_KEY=""

# Slack

SLACK_BOT_TOKEN=''

SLACK_APP_TOKEN=''

SLACK_SIGNING_SECRET=''Install Ollama in your system (https://github.com/ollama/ollama)

curl -fsSL https://ollama.com/install.sh | shDownload llama3 and start the ollama server

ollama pull llama3

ollama serveTo use a remote version of Llama3, enable the Grop API by getting your own GROQ_API_KEY

- Go to https://console.groq.com/keys

- Generate a new token

- Ad it to

GROQ_API_KEYin your.env

To get your own CONFLUENCE_TOKEN

- Go to https://yourconfluence.com/plugins/personalaccesstokens/usertokens.action

- Generate a new token

- Add it to

CONFLUENCE_TOKENin your.env

Import the slack_manifest.yml to your Slack App, then get your access tokens for your .env file.

For SLACK_SIGNING_SECRET go to Basic Information > App Credentials > Signing Secret

For SLACK_APP_TOKEN go to Basic Information > App-Level Tokens > Generate Token

For SLACK_BOT_TOKEN go to OAuth & Permissions > OAuth Tokens > Bot User OAuth Token

Make sure you have the cookie.txt file with the session to avoid SSO issues. The cookie can be extracted for any request in the confluence page.

Run app_confluence.py to fetch data from Confluence and save it as a CSV file, the process could take a few minutes:

python app_confluence.pyThis process going to create data/kb.csv file with all the necessary data for the next step.

Run index_generator.py to generate embeddings and save them in ChromaDB, this process need to download an embedding model from HuggingFace so the process could take several minutes:

python index_generator.pyOnce your vector database is populated you can use your chatbot with Streamlit or as a Slack app.

Run streamlit.py to start the Streamlit application:

streamlit run streamlit.py- Set up your application in Slack and get the necessary tokens by using the Slack Setup section.

Run slack.py to start the Slack bot:

python slack.pyCode to fetch data from Confluence and save it as a CSV file.

Code to generate embeddings and save them in ChromaDB.

Code for the query logic using the stored embeddings.

Code for the Streamlit user interface.

Code for the Slack integration.

Helper methods to simplify the operation