Hyundo Lee*, Inwoo Hwang, Hyunsung Go, Won-Seok Choi, Kibeom Kim, and Byoung-Tak Zhang

(AI Institute, Seoul National University)

This repository contains the pytorch code for reproducing our paper "Learning Geometry-aware Representations by Sketching".

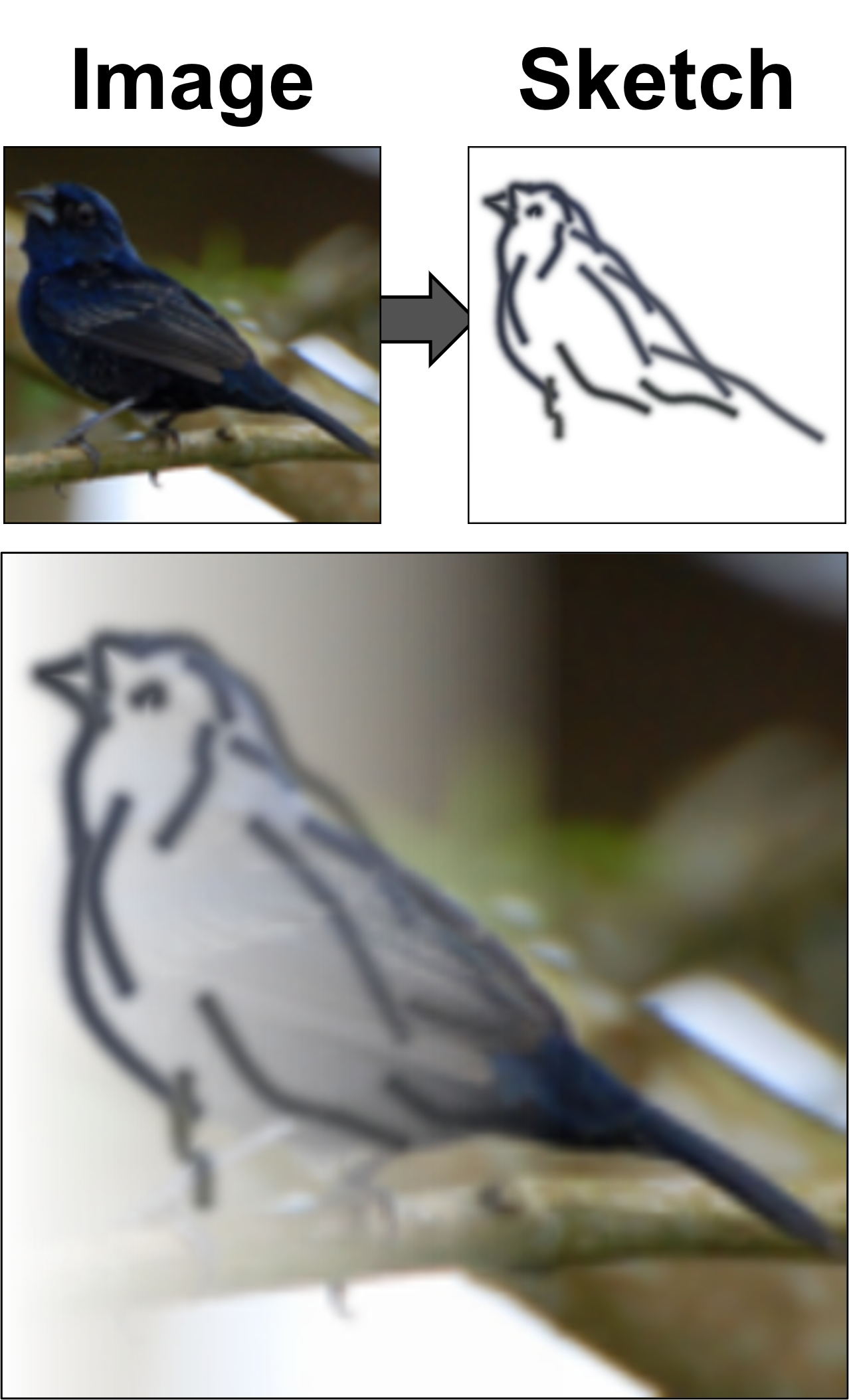

At a high level, our model learns to abstract an image into a stroke-based color sketch that accurately reflects the geometric information (e.g., position, shape, size). Our sketch consists of a set of strokes represented by a parameterized vector that specifies their curvature, color, and thickness. We use these parameterized vectors directly as a compact representation of an image.

An overview of LBS(Learning by Sketching), including a CNN-based encoder, Transformer-based Stroke Generator, a Stroke Embedding Network and a Differentiable Rasterizer.

For training, we use CLIP-based perceptual loss, a guidance stroke from optimization-based generation (CLIPasso).

You can optionally train with an additional loss function specified by the --embed_loss argument (choices=['ce', 'simclr', 'supcon']).

Currently, the following environment has been confirmed to run the code:

- python >= 3.7

- pytorch >= 1.10.0

# Clone the repo:

git clone https://github.com/illhyhl1111/LearningBySketching.git LBS

cd LBS

# Install dependencies:

pip install -r requirements.txt

## If pytorch not installed:

pip install torch==1.10.0+cu111 torchvision==0.11.0+cu111 -f https://download.pytorch.org/whl/torch_stable.html

# TODO

git clone https://github.com/BachiLi/diffvg

git submodule update --init --recursive

cd diffvg

python setup.py install

By default, the code assumes that all the datasets are located under ./data/. You can change this path by specifying --data_root.

- STL-10, Rotated MNIST: will be automatically downloaded.

- CLEVR dataset: download

CLEVR_v1.0.zip.- extract the contents (images, scenes folder) under

--data_root/clevr.

- extract the contents (images, scenes folder) under

- Geoclidean dataset: download our realized samples of Geoclidean dataset based on Geoclidean repo.

- extract the contents (constraints, elements folder) under

--data_root/geoclidean. The structure should be as:

- extract the contents (constraints, elements folder) under

--data_dir

├── clevr

│ ├── questions

│ ├── scenes

│ ├── images

│ │ ├── val

│ │ ├── train

│ │ │ ├── CLEVR_train_xxxxxx.png

│ │ │ └── ...

│ │ └── test

│ └── README.txt

└── geoclidean

├── constraints

└── elements

├── train

│ ├── triangle

│ │ ├── in_xxx_fin.png

│ │ └── ...

│ └── ...

└── test

To train our model with the CLEVR and STL-10 datasets, you must first generate guidance strokes.

-

Downloading pre-generated strokes from:

put the downloaded files into

gt_sketches/ -

Generating the guidance strokes with:

- install pydiffvg

sudo apt install python3-dev cmake

git clone https://github.com/BachiLi/diffvg

cd diffvg

git submodule update --init --recursive

python setup.py install

cd ../

- STL10 (train+unlabeled)

python generate_data.py --config_path config/stl10.yaml --output_dir ./gt_sketches/stl10_train+unlabeled/ --dataset stl10_train+unlabeled --data_root /your/path/to/dir --visualize --device cuda

python merge_data.py --output_file ./gt_sketches/path_stl10.pkl --data_files ./gt_sketches/stl10_train+unlabeled/data_* --maskarea_files ./gt_sketches/stl10_train+unlabeled/maskareas_*

- CLEVR (train)

python generate_data.py --config_path config/clevr.yaml --output_dir ./gt_sketches/clevr_train --dataset clevr_train --data_root /your/path/to/dir --num_generation 10000 --visualize --device cuda

python merge_data.py --output_file ./gt_sketches/path_clevr.pkl --data_files ./gt_sketches/clevr_train/data_* --maskarea_files ./gt_sketches/clevr_train/maskareas_*

The execution of generate_data.py can be splited into multiple chunks with --chunk (num_chunk) (chunk_idx) options

python generate_data.py ... --chunk 2 0

python generate_data.py ... --chunk 2 1

# Rotated MNIST

python main.py --data_root /your/path/to/dir --config_path config/rotmnist.yaml

# Geoclidean-Elements

python main.py --data_root /your/path/to/dir --config_path config/geoclidean_elements.yaml

# Geoclidean-Constraints

python main.py --data_root /your/path/to/dir --config_path config/geoclidean_constraints.yaml

# CLEVR

python main.py --data_root /your/path/to/dir --config_path config/clevr.yaml

# STL-10

python main.py --data_root /your/path/to/dir --config_path config/stl10.yaml

Note: More than 30G of GPU memory is required to run the settings within the default configuration for CLEVR and STL-10. Utilzing multi-GPU with DDP is not currently supported.

If you run out of memory, we recommend changing --clip_model_name to RN50, reducing --num_aug_clip to reduce the amount of memory used by the CLIP model, or reducing the batch size, but performance may be degraded.

Optional arguments:

-

--embed_loss: type of$\mathcal{L}_{embed}$ , choices: ['none', 'ce', 'simclr', 'supcon'] -

--lbd_g, --lbd_p, --lbd_e: weights of loss $\mathcal{L}{guide}, \mathcal{L}{percept}, \mathcal{L}_{embed}$, respectively -

--num_strokes: number of total strokes- Changing the number of strokes requires the generation of a corresponding number of guidance strokes.

-

--num_background: number of strokes assigned to background -

--enable_color: enables to learn color-coded strokes (generate black strokes if disabled)-

--disable_color: setsenable_coloras False

-

-

--rep_type: the form of the final representation. options:-

LBS+:$(z_e, z_p, z_h)$ -

LBS:$(z_e, z_p)$ - combinations of 'e', 'p', 'h': Vector concatenating

$z_e, z_p, z_h$ , respectively. ex) 'ph' ->$(z_p, z_h)$

-

python evaluate.py logs/{dataset}/{target_folder}

If you make use of our work, please cite our paper:

@inproceedings{lee2023learning,

title={Learning Geometry-aware Representations by Sketching},

author={Lee, Hyundo and Hwang, Inwoo and Go, Hyunsung and Choi, Won-Seok and Kim, Kibeom and Zhang, Byoung-Tak},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={23315--23326},

year={2023}

}