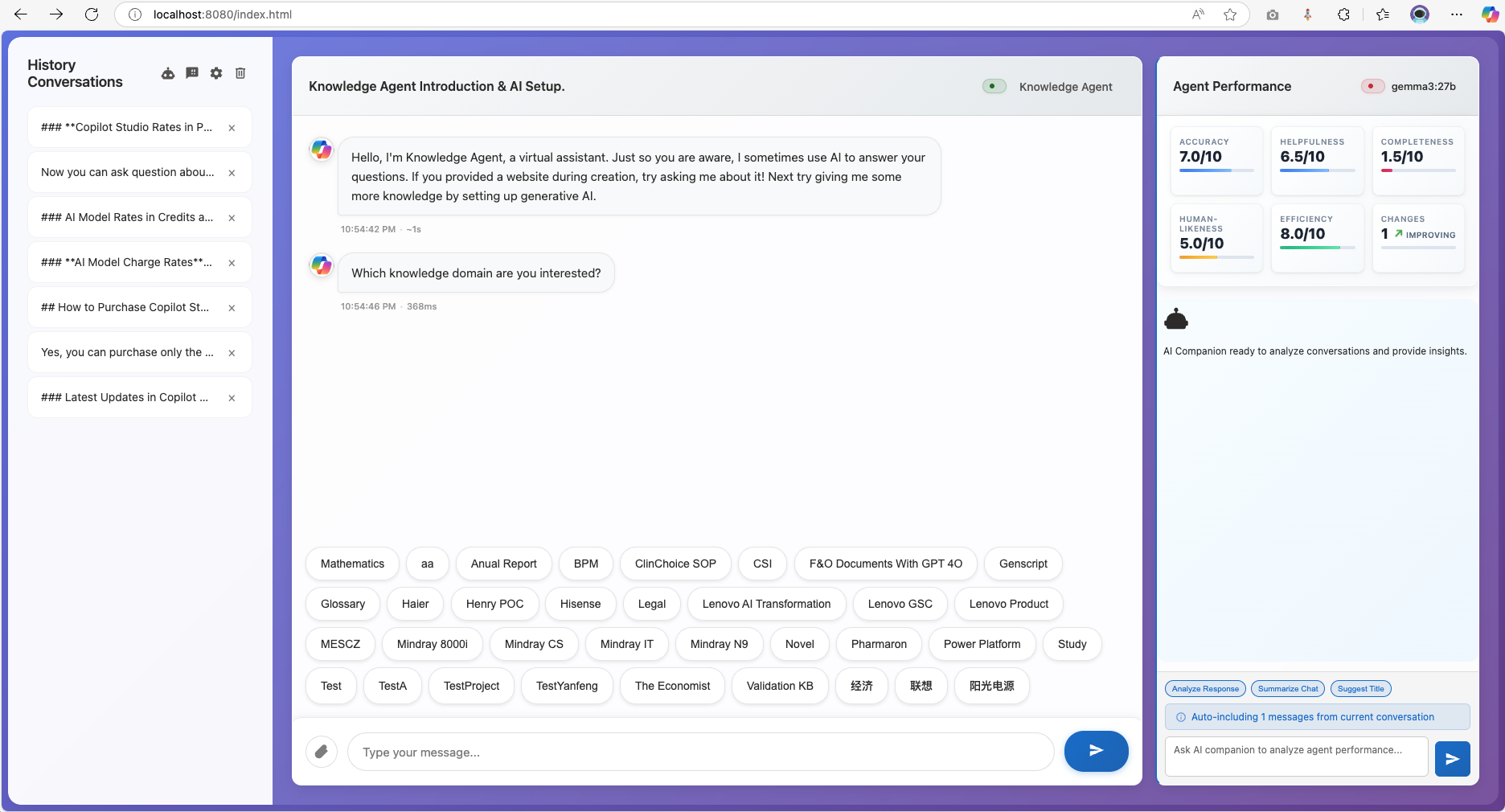

A sophisticated chatbot user interface that demonstrates how to create a customized chat experience with multiple AI backends, featuring Microsoft Copilot Studio integration via DirectLine API, AI companion analysis, and local Ollama model support with real-time streaming capabilities.

Ready to get started? Follow our quick setup guide:

- Get DirectLine Secret from Microsoft Copilot Studio

- Launch

index.htmlin your browser - Configure your agent in the settings panel

- Start chatting with your AI assistant

- Quick Start Guide - Get up and running in 5 minutes

- Installation - Development environment setup

- Configuration - Detailed configuration options

- AI Companion Setup - Enable AI-powered analysis

- System Architecture - High-level system design

- Module Structure - Code organization and components

- API Integration - Backend integrations guide

- Security - Encryption and security features

- Core Features - Multi-agent management, streaming, file uploads

- Mobile Responsive - Touch-optimized mobile interface and navigation

- AI Companion - Performance analytics and insights

- User Interface - Customization and appearance options

- Advanced Features - Professional mode, citations, and more

- Development Setup - Local development environment

- Production Deployment - Hosting and scaling options

- Docker Deployment - Containerized deployment

- Security Checklist - Production security guide

- Contributing Guide - How to contribute to the project

- Migration Guides - Legacy system migration documentation

- Performance Optimization - Performance tuning and optimization

- Development Utilities - Debugging tools, test files, and maintenance scripts

- DirectLine Issues - Complete DirectLine connection troubleshooting

- Connection Problems - Connection debugging and fixes

- Performance Issues - Retry loops and stability problems

- Mobile Issues - Mobile platform specific problems

- All Troubleshooting - Complete troubleshooting index

- Configure multiple chatbot agents with individual settings

- Real-time connection monitoring and status indicators

- Secure credential storage with AES-256 encryption

- Real-time conversation analysis with performance metrics

- Support for OpenAI GPT, Anthropic Claude, Azure OpenAI, and local Ollama

- Interactive KPI tracking (Accuracy, Helpfulness, Completeness)

- Streaming response display with typing indicators

- Adaptive card rendering for rich bot responses

- File upload support with drag-and-drop functionality

- Professional full-width mode for document-like interface

- Mobile-responsive design with touch-optimized interface

- Collapsible sidebar with swipe gestures for mobile navigation

- Mobile AI companion access via floating action button

- Client-side AES-256-GCM encryption for sensitive data

- Secure key derivation and management

- CORS-compliant local model access

- SVG-based icon management with fallback support

- Consistent iconography across all UI components

- Optimized loading with async icon collection

- KPI and performance analytics icons

- GitHub Repository - Source code and issues

- Release Notes - Latest updates and features

- Project Tasks - Current development priorities

- 🧠 Conversation-Aware Thinking: Revolutionary thinking system that leverages complete conversation context (last 3-5 turns) for deeper, more insightful AI thinking responses

- ⚡ Enhanced Thinking Performance: Immediate LLM invocation with 1.5-second display delay and intelligent timeout feedback for optimal user experience

- 🔄 Progressive Thinking Types: Four distinct thinking phases (Analysis, Context-Aware, Practical, Synthesis) that build upon conversation history

- 🌐 Contextual Continuity: Thinking responses now reference conversation patterns, follow-up questions, and thematic connections across discussion history

- 📁 Project Structure Cleanup: Organized development utilities into

utils/directory and consolidated documentation structure - ✨ Documentation Organization: Consolidated and organized all documentation into logical categories

- ✨ Performance Documentation: Comprehensive performance optimization guides

- ✨ Troubleshooting Guides: Complete troubleshooting documentation with DirectLine fixes

- ✨ Feature Documentation: Organized feature-specific documentation and guides

- ✨ Clean Project Structure: Eliminated root directory clutter for better maintainability

- Issues: GitHub Issues

- Discussions: GitHub Discussions

- Documentation: Browse the

/docsfolder for detailed guides

This project is licensed under the MIT License - see the LICENSE file for details.

Last Updated: August 22, 2025

Version: 3.6.0

Maintained by: MCSChat Contributors

# Start the chat application server

node chat-server.js

# Access at http://localhost:8080

# Start the Ollama CORS proxy (if using local models)

node ollama-proxy.js

# Proxy runs at http://localhost:3001- Chat Interface: Real-time messaging with streaming support and dual-panel layout

- Agent Manager: Multi-agent configuration and switching with status monitoring

- AI Companion Panel: Performance analytics, conversation analysis, and KPI tracking

- Enhanced Settings Panel: Organized configuration with navigation (Agent Management, AI Companion, Appearance)

- File Handler: Drag-and-drop uploads with preview

- Stream Manager: Progressive response rendering system

- Security Layer: Client-side encryption for sensitive data

- Font Customization: User-configurable font sizes with real-time updates for optimal readability

- Message Renderer: Advanced chronological ordering with timestamp validation and citation handling

- Window Context Manager: Dynamic targeting for Agent Chat vs AI Companion Chat windows

- Citation System: Enhanced reference display with inline styling and proper positioning

- Debug Console: Comprehensive logging system for troubleshooting message ordering and rendering

- Professional System Mode: Full-width document-like interface for data analysis workflows

- Unified CSS Architecture: Custom property system with minimal redundancy and optimized performance

- DirectLine Client: Microsoft Bot Framework connectivity

- Ollama Interface: Local model API integration

- Proxy Server: CORS-compliant local model access

- Storage Engine: Encrypted localStorage with key management

- AES-256 Encryption: All credentials encrypted at rest

- Key Derivation: Secure key generation and management

- Session Security: Temporary credential handling

- CORS Protection: Secure cross-origin request handling

MCSChat/

├── index.html # Main application interface

├── ollama-proxy.js # CORS proxy server for local Ollama access

├── chat-server.js # Development HTTP server

├── src/ # Modular source code architecture

│ ├── main.js # Application entry point

│ ├── core/

│ │ └── application.js # Main application controller

│ ├── managers/

│ │ ├── agentManager.js # Multi-agent configuration

│ │ └── sessionManager.js # Chat session management

│ ├── services/

│ │ └── [DirectLine managers moved to components/directline/]

│ ├── components/

│ │ └── directline/ # DirectLine API integration (3 implementations)

│ ├── ui/

│ │ └── messageRenderer.js # Message display and rendering

│ ├── ai/

│ │ └── aiCompanion.js # AI companion features

│ ├── components/

│ │ ├── svg-icon-manager/ # Unified icon management system

│ │ └── directline/ # DirectLine components

│ └── utils/

│ ├── encryption.js # AES-256-GCM encryption utilities

│ ├── secureStorage.js # Encrypted localStorage wrapper

│ ├── domUtils.js # DOM manipulation helpers

│ └── helpers.js # General utility functions

├── docs/ # Comprehensive documentation

│ ├── migration/ # Migration guides and documentation

│ ├── performance/ # Performance optimization guides

│ ├── development/ # Development and contribution guides

│ ├── features/ # Feature documentation

│ └── troubleshooting/ # Troubleshooting guides

├── legacy/ # Legacy code for reference

├── lib/ # Third-party libraries

└── images/ # UI assets and screenshots

- Application Controller (

src/core/application.js): Central orchestrator managing all modules - Main Entry Point (

src/main.js): Application initialization and DOM ready management

- AgentManager: Multiple bot configurations and credential management

- SessionManager: Chat sessions, message history, and conversation state

- DirectLineManager: Microsoft Bot Framework DirectLine API integration

- MessageRenderer: Message display, adaptive cards, streaming text, citations

- SVG Icon Manager: Unified icon system with async loading and fallbacks

- AICompanion: Ollama model integration, conversation analysis, KPI tracking

- Encryption: AES-256-GCM encryption for secure storage

- SecureStorage: Encrypted localStorage wrapper

- DOMUtils: Safe DOM manipulation with error handling

- Helpers: Common utilities and formatting functions

- ES6 Modules: Clean import/export system

- Singleton Pattern: Shared instances for managers and services

- Event-Driven Architecture: Modular communication through custom events

- Separation of Concerns: Single responsibility per module

- Agent Name: Friendly identifier for the bot

- DirectLine Secret: Azure Bot Service authentication key

- Connection Status: Real-time connectivity monitoring

- Streaming Options: Enable/disable streaming response simulation

- Provider Selection: OpenAI GPT, Anthropic Claude, Azure OpenAI, or Local Ollama

- API Configuration: Secure storage of API keys and credentials

- Model Selection: Automatic discovery and selection of available models

- Connection Testing: Built-in connectivity verification

- Agent Chat Font Size: Customizable font size (10-20px) with real-time updates

- AI Companion Font Size: Separate font size control (8-16px) with instant preview

- Message Display Mode: Choose between bubble chat or full-width professional mode

- Professional Interface: Document-like professional display

- Compact Layout: Space-efficient spacing for maximum information density

- Icon System: Unified SVG icons with consistent styling

# Clone the repository

git clone https://github.com/illusion615/MCSChat.git

cd MCSChat

# Start development server

python -m http.server 8000

# Access application

# Navigate to http://localhost:8000Please read our Contributing Guide for details on our code of conduct and the process for submitting pull requests.

- Issues: GitHub Issues

- Discussions: GitHub Discussions

- Documentation: Browse the

/docsfolder for detailed guides

This project is licensed under the MIT License - see the LICENSE file for details.

Last Updated: August 14, 2025

Version: 3.5.0

Maintained by: MCSChat Contributors