This framework is designed to automate the validation of these reports by applying a set of rules to check for data integrity and highlight any regressions.

My assumption was that the production data are the "source of truth".

- Goal

- Project Overview

- Dependencies

- Getting Started

- Running the Tests

- Validation Rules

- Test Quality Improvements

The goal of this framework is to automate regression testing for Focal's reports in both CSV and PDF formats. The source of truth is production data. The tests will identify any discrepancies to ensure data integrity and consistency before deploying changes.

The framework leverages the following technologies and tools:

- Java 17: As main programming language.

- TestNG: Test orchestration and execution.

- AssertJ: Fluent assertions for data verification.

- Allure: Test reporting.

- PDFCompare: PDF comparison.

- OpenCSV: CSV parsing and handling.

The project is configured with Maven and uses the following dependencies for specific functionalities:

- TestNG: Testing framework for running and organizing tests.

- AssertJ: For fluent assertion statements.

- Lombok: Reduces boilerplate code.

- OpenCSV: Reads and writes CSV data for validation.

- PDFCompare: Compares PDF content for detecting changes.

- Allure: Generates test reports.

- Java 17 or higher: Ensure JDK 17 or higher is installed.

- Maven 3.9.9: Required to build and manage dependencies.

- GNU Make 4.3: As commands aggregator for fast and easy use

- Linux/Ubuntu 24.04 LTS - As main execution platform

-

Install Java, Maven, Make on any Linux distribution preferably Ubuntu

-

Clone the repository:

git clone git@github.com:ilusi0npl/focal-test.git cd focal-report-regression -

Run all tests

make build-test-project

• Runs all tests in the project, clearing any previous build artifacts before executing the test suite.

• Command:

make run-all-tests• Runs only the CSV comparison tests.

• Uses ProductRecordComparisonTest to compare the production and staging CSV files by passing file paths as properties.

• Command:

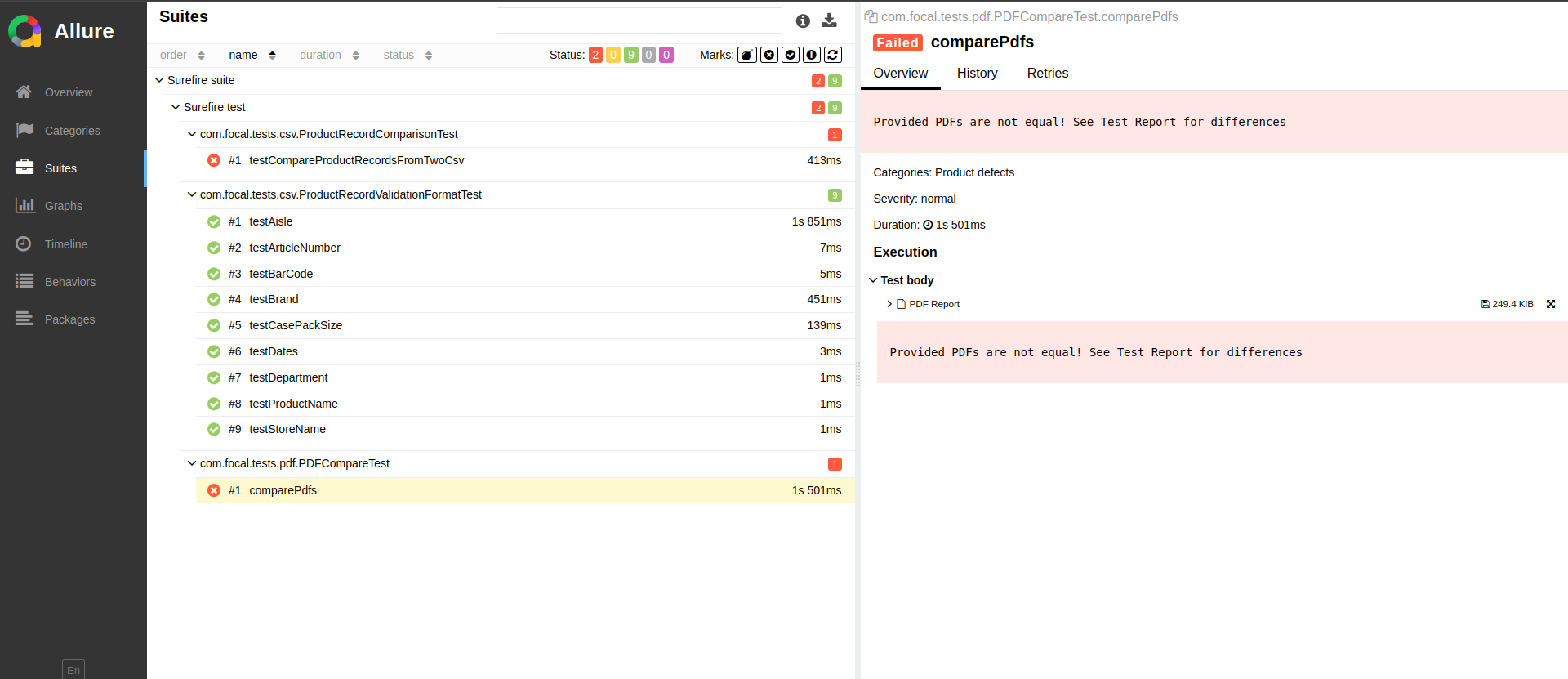

make run-csv-tests-files• Executes only the PDF comparison tests.

• Runs the PDFCompareTest class to verify that production and staging PDFs match.

• Command:

make run-pdf-comparison-tests• Runs the ProductRecordValidationFormatTest on the production CSV file to validate format compliance.

• The production file path is passed as a property.

• Command:

make run-validation-format-tests-production• Runs the ProductRecordValidationFormatTest on the staging CSV file to validate format compliance.

• The staging file path is passed as a property.

• Command:

make run-validation-format-tests-stagingTo generate test report execute:

make generate-test-reportReport should automatically pop up in your default browser.

- There are discrepancies both in CSV and PDF

For CSV:

- Staging data does not follow the same validation rules as production data. Run

make run-validation-format-tests-stagingto see details - Comparison 1-to-1 for between staging and production also fails. Results does not match. Run

make run-all-testsand seetestCompareProductRecordsFromTwoCsvtest results

For PDF:

- From a structural point of view there are two different files

- From content type of view data is shifted probably due to

'See pdf-result.pdf

-

General Field Requirements

- Fields such as

Store Name,Product Name, andDepartmentshould be non-null and non-empty.

- Fields such as

-

Unique Identifier Format

- Unique identifiers, such as

Barcode, should:- Be non-null and non-empty.

- Follow a specific pattern (e.g.,

Barcodemight start with a character and contain a set number of digits).

- Unique identifiers, such as

-

Number Formatting

- Numeric fields like

Case Pack SizeorStock Levelsshould:- Be non-null and positive.

- Meet specific constraints, such as being even or falling within a logical range.

- Numeric fields like

-

Text Format

- Text fields, such as

AisleorArticle Number, should:- Follow a specific format (e.g., two letters followed by two digits for

Aisle). - Have a consistent length if specified.

- Follow a specific format (e.g., two letters followed by two digits for

- Text fields, such as

-

Date and Time Format

- Date fields, like

Marked AtorLast Received Date, should:- Be in a standardized format (e.g.,

yyyy-MM-dd HH:mm:ss). - Ensure logical consistency if multiple dates are related (e.g.,

Last Received Dateshould not be afterMarked At).

- Be in a standardized format (e.g.,

- Date fields, like

-

Record Count Check

- Ensure that both production and staging data files contain the same number of records.

-

Field-by-Field Record Comparison

- Each field in staging records should match the corresponding field in production records to detect any inconsistencies.

-

Value Range Constraints

- Fields like

Stock RatioorCase Pack Sizeshould stay within a specified range based on logical or business rules.

- Fields like

-

Predefined Value Sets

- Fields like

StatusorAvailabilityshould only contain predefined, expected values (e.g., "In Stock", "Out of Stock").

- Fields like

-

Data Cleanliness

- Text fields such as

Product Name,Brand, andStore Nameshould not have leading/trailing spaces or special characters unless allowed.

- Text fields such as

-

Cross-Field Consistency

- Ensure logical consistency between fields, such as alignment between

DepartmentandProduct Category.

- Ensure logical consistency between fields, such as alignment between

-

Error Handling Scenarios

- Test handling of missing, null, or malformed values in essential fields to confirm the system manages errors gracefully.

Generally also we have to implement stricter validation of data types, such as integer or decimal fields, to catch subtle format changes or type mismatches.

The PDFCompareTest class, which aims to ensure that production and staging PDFs are identical in content and structure.

Due to Java's limited free libraries for detailed PDF validation current approach only check report in pixel-by-pixel manner.

Current approach is not enough.

- Structural and Content Equality

- Match in Page Count: Ensure both PDFs have the same number of pages.

- Text and Element Positioning: Validate that text elements and graphical elements are positioned identically on both PDFs.

- Font and Style Consistency: Confirm that fonts, styles, and other text formatting are consistent across both files.

Java has limited support for robust, free PDF comparison tools, which restricts thorough PDF content validation. Current libraries may not reliably detect graphical discrepancies or slight textual variations, particularly in complex or image-heavy PDFs. There are solution on the market which are much better, but are paid solutions.

To improve accuracy and consistency in PDF validation, consider the following enhancements:

-

OCR Integration

- Text Extraction for Image-Based PDFs: Integrate an Optical Character Recognition (OCR) tool to read and validate text from graphical content, ensuring even image-based text is verified.

- Enhanced Text Comparison: OCR can help recognize and validate text content that traditional PDF comparison tools might miss, particularly beneficial for PDFs that include images of text or complex layouts.

-

Adoption of CSV Validation Rules for PDFs

- Apply Similar Rules: Where possible, implement the same validation rules as those for CSV files, ensuring that fields such as product names, identifiers, and other essential details are consistent in content and format across production and staging.

- Structured Data Consistency: Ensure that key structured data (e.g., headers, table contents) matches in both content and positioning across PDFs.