This project explores the intersection of Natural Language Processing (NLP) and Computer Vision through advanced text-based image editing techniques. By leveraging three powerful models—Grounding DINO, Segment Anything Model (SAM), and Stable Diffusion—we aim to create an intuitive system that allows users to modify images based on textual input.

Grounding DINO: Facilitates object detection and localization within images based on text prompts, allowing for precise object targeting.

Segment Anything Model (SAM): Provides robust segmentation capabilities to isolate specific objects or regions within an image for editing.

Stable Diffusion: Handles image generation and enhancement, enabling creative transformations based on text instructions.

Before you begin, ensure you have the following installed on your system:

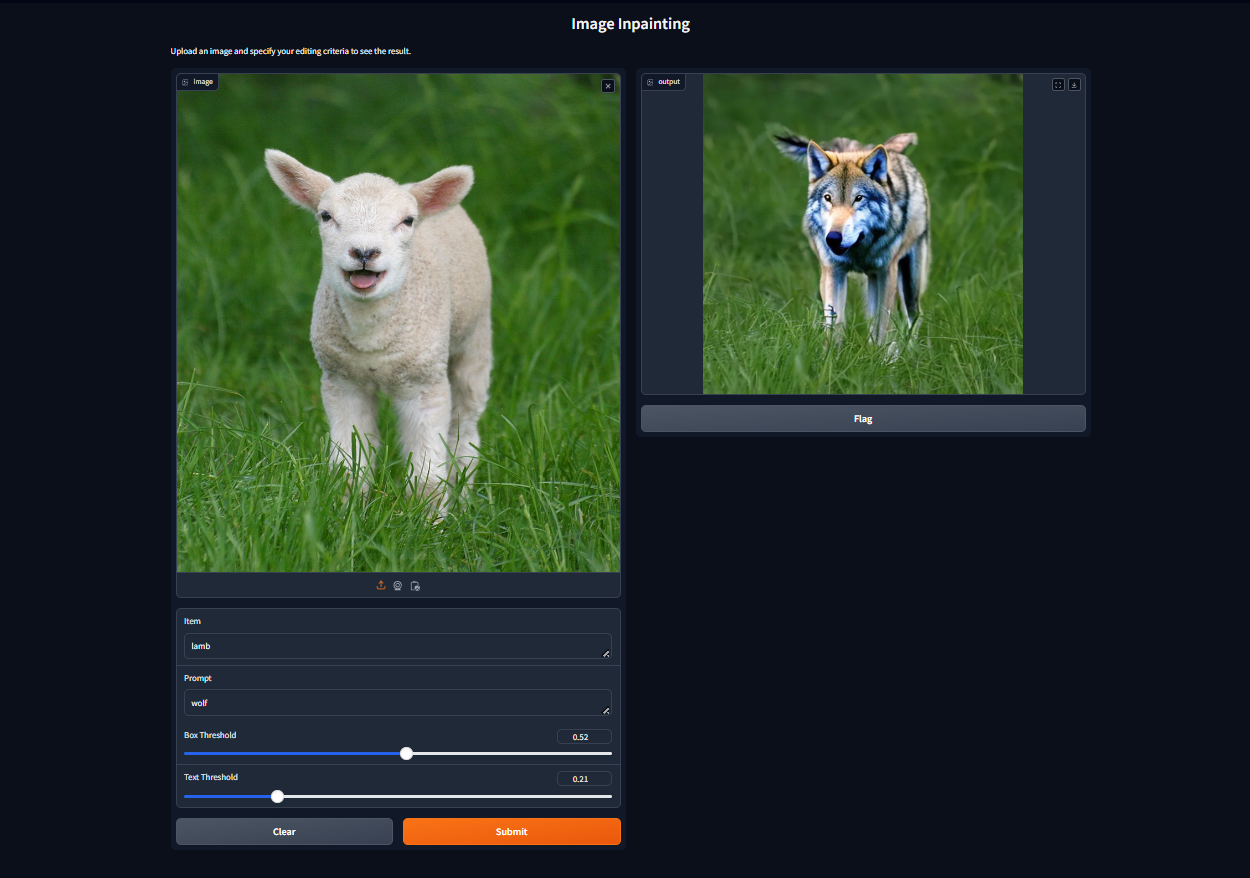

docker build -t image_editing .docker run --gpus all -p 7860:7860 image_editing- Replacing lamb with wolf.