This is a supplementary code for the Imitrob dataset located at http://imitrob.ciirc.cvut.cz/imitrobdataset.php. The code and the dataset are intended to be used for 6D pose estimator training and evaluation (benchmarking).

Simply clone this repo, install the required Python packages (listed below) and download the necessary data.

- Python packages required to run the code are listed in the requirements.txt file. All the required packages can be installed by:

$ pip install -r requirements.txt - Training a 6D pose estimator requires the Imitrob Train dataset ("light" version is also available) and a dataset of background images (for augmentation), e.g., the ImageNet or a subset of it (you can use mini-ImageNet to generate the subset).

- Evaluation requires the Imitrob Test dataset.

- The scripts were tested on Ubuntu 18.04 with Python 3.6. Nonetheless, they should run on most platforms where PyTorch can be installed and with Python version 3.6 or later.

The base class for working with the Imitrob dataset is imitrob_dataset in imitrob_dataset.py and it is based on the PyTorch Dataset class. As such, it can be easily used to train any PyTorch model via the DataLoader class.

The Imitrob dataset specific parameters are described in the imitrob_dataset constructor. For general PyTorch dataset parameters and usage, please see the torch.utils.data.Dataset class documentation.

The trainer.py file contains an example of using the dataset with the DOPE pose estimator. The evaluation.py file is used to evaluate an already trained network. Use the training and evaluation scripts as a template to train and test your estimator. In case of a PyTorch model, you will typically only need to assign an instance of your model to the net variable, i.e., changing this line of the trainer.py file:

net = dope_net(lr, gpu_device) # switch dope_net for your own networkSimilar change has to be done in the evaluation.py script.

The data acquisition process is described in this readme file.

The trainer.py file performs training (and the evaluation after training, though this can be skipped). The script accepts command line arguments to specify which parts of the dataset should be used. Use help invocation command to see all the possible options and their descriptions:

$ python trainer.py -hHere is an example of executing the trainer.py script with a condensed summary of the options:

$ python trainer.py --traindata "path/to/train/data/directory"

--testdata "path/to/test/data/directory"

--bg_path "path/to/baground/data/directory"

--exp_name experiment_1

--randomizer_mode overlay

--gpu_device 0

--dataset_type roller

--subject [S1,S2,S3]

--camera [C1,C2]

--hand [LH,RH]

--subject_test [S4]

--camera_test [C1,C2]

--hand_test [LH,RH]

--task_test [clutter,round,sweep,press,frame,sparsewave,densewave]The list notation (multiple items in square brackets, e.g., [XX,YY,ZZ]) is used to specify multiple options for a given argument. For example, --camera [C1,C2] tells the script to use images from both the C1 and C2 cameras.

The evaluation.py file performs the estimator evaluation. Typically, the trainer.py script is used to both train and evaluate the estimator. However, the evaluation can also be performed separately. Similar to the trainer.py, dataset and network settings are passed to the script via the command line. The first positional argument is the path to the train weights of the network (typically directory/something.pth.tar). Run the script with the -h argument to see the list and description of possible arguments:

$ python evaluation.py -hWe provide example weights for DOPE here. The weights are trained for the glue gun tool only, using the following configuration:

$ python trainer.py --traindata "Imitrob\Train" --testdata "Imitrob\Test" --bg_path "mini_imagenet_dataset\images" --epochs 5 --exp_name experiment_5 --randomizer_mode overlay --gpu_device 0 --dataset_type gluegun --subject [S1,S2,S3,S4] --camera [C1,C2] --hand [LH,RH] --subject_test [S1,S2,S3,S4] --camera_test [C1,C2] --hand_test [LH,RH] --task_test [clutter,round,sweep,press,frame,sparsewave,densewave]These can be used to run the evaluation.py script.

If you develop and evaluate your own model on our dataset, we would really appreciate if you send us your results. We will include them in the leaderboard below.

| Method | training configuration | testing configuration | metric | results |

|---|---|---|---|---|

| DOPE (original) | full ImitrobTrain | full ImitrobTest | ADD5 | |

Data acquisition tutorial (codes, docker, sample data)

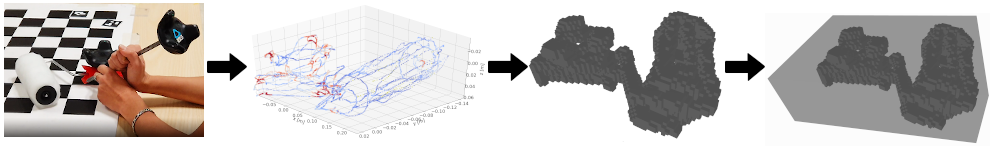

We provide methods for the acquisition of training data for a new tool.

First, mount a tracker on the tool and prepare a tracing tool with mounted tracker.

Afterwards follow the steps to calibrate the data acquisition setup as well as calibrating the bounding box for the tool.

-Now you can record data for the manipulated object while manipulating it in front of the green background. 6DoF positions are extracted and objects segmentation masks are created.

data_recording_train_seg.mp4

Individual steps with corresponding codes and sample data are described in Data acquisition tutorial.

This code is published under the CC BY-NC-SA 4.0 license.

If you use this code in your research, please, give us an attribution, using the following citation:

@Misc{imitrobdataset,

author = {{CIIRC CTU in Prague}},

title = {{I}mitrob dataset version 2.0},

howpublished = {\url{http://imitrob.ciirc.cvut.cz/imitrobdataset.php}},

year = 2022

}Part of this work is based on the code of NVidia Deep Object Pose (paper). We also used the mini-ImageNet library (proposed in the paper Matching Networks for One Shot Learning) to generate the background images in our benchmarks.

Manager of the dataset: karla.stepanova@cvut.cz.