Agile and DevOps principles to improve CyberSecurity Operations. https://github.com/infchg/SecOps

Latest:

- Propose modern (yaml) handling of Keys in Authentication Authorization against GCP Keycloak Okta@ Github.com/InfChg/SecOps 2022 https://github.com/infchg/SecOps/blob/master/KeyCloack-yaml.md

(expanded and moved from former https://github.com/infchg/ palo-alto-watch )

Applying Modern-Agile to improve CERT SOC CSIRT https://github.com/infchg/SecOps

| steps | to | 🌡📈Collect | 💡✋Alert | 🥝🍀Serve | 🌐 Guideline |

|---|---|---|---|---|---|

| ❌Gap | unrealistic manual check | hard & slow manual verif | Need summary to add value, BI | Teams in Silos, double efforts❌ | |

| ✋Acted | automated collection | automated alerts | prepared Py Notebooks | Seeded a guide, transparent💡 | |

| ✅Value | Teams spot diffs easily | Teams query, build upon | notebooks as calculate aid | space for team Cooperation🍀 | |

| 🌏Share safely | shared data Online | shared alerts Online | Share key Findings | gradually edit🌐 gain consensus | |

| ✋Action, for Teams: | Internal use, keep adding | Broader-teams add feedback | got external feedback | Lead to expand goals & teams |

✋💡alert (Symmetry & Icons by InfChg CC-BY)

/ \

🌡📈📌collect value✅🍀 (inspired on Modern-Agile clock-wise)

\ /

🌐🌏guide

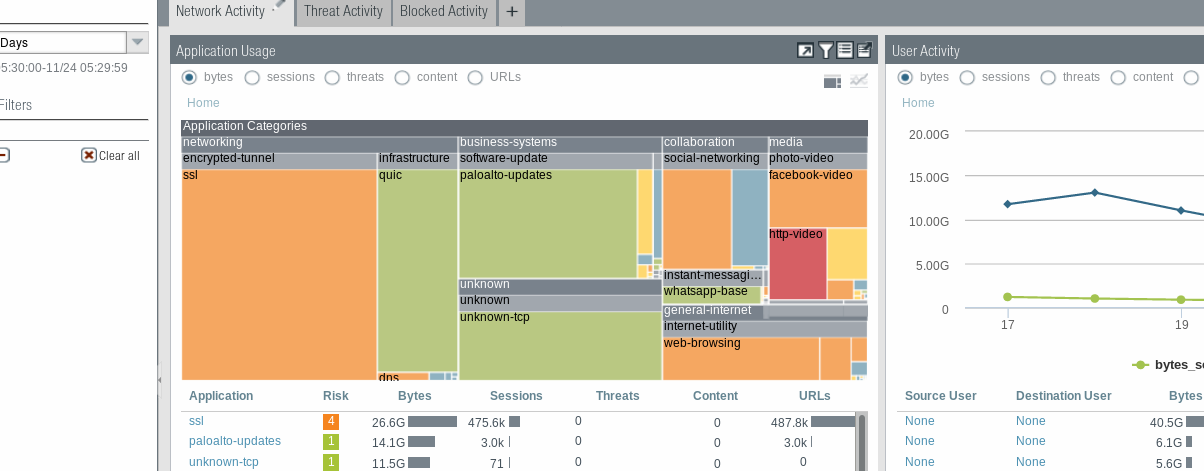

FW Watch collects MIBs and scripts to analyze FireWall for forensics investigations in Splunk or Elastic. These infos bring new details to the standard Palo Alto tools and charts.

During forensics, we need to investigate specific times of deviations counted by the Firewalls. We might have Palo Alto parameters, charts, logs, and tools but would be ideal to research in our own elastic or splunk.

This has also a place to add and discuss latest SecOps improvements https://github.com/infchg/palo-alto-watch/discussions/1

Since old PAN-OS v7 few firewall counters could be accessed through Palo Alto Networks private MIBs. A handful of those SNMP OIDs are very valuable, but only succinctly documented in Palo Alto MIBs.

The simple script below let you track live key Firewall counters.

((date +%s.%2N && snmpbulkget -mALL -Os -c $PAS -v 2c -Cr1 -Oqvt $FW \

enterprises.25461.2.1.2.1.19.8.10 enterprises.25461.2.1.2.1.19.8.14 \

enterprises.25461.2.1.2.1.19.8.18 enterprises.25461.2.1.2.1.19.8.30 \

enterprises.25461.2.1.2.1.19.8.31 enterprises.25461.2.1.2.1.19.11.7 \

1.3.6.1.4.1.25461.2.1.2.3.4 SysUptime && date +%s.%2N ) | perl -pe 'chop; s/$/ /g' && echo ) >> $bas/pa-$FW.txt

#tim sessdeny icmp udp synmaxthre activred nonsynunmatch activeTCP upti timeThis demo saves the parameters to a data file. The structure is:

timestamp sessdeny icmp udp synmaxthre activred nonsynunmatch activeTCP FWuptime timestamp

(the timestamp an uptimes a couple of symmetry-checks for site reliability)

You can run the script on a small linux shell, in any box able to reach the FW management interface.

(between the points 1 and 2 of this architecture in Fig.0).

Verified in bash CentOS it should run well in Ubuntu, RHEL, Debian, even windows' GITshell ...

Calling the script in crontabs or loops let us pull Firewall data every few seconds, this let you find the precise time of issues or attacks.

We identified 10 Palo Alto Counters (Figure 1 rainbow colors) useful for investigating attacks. Some counters are in the Palo Alto CLI too, but SNMP let us monitor FW every few secs and keep the 10 key values in logs .. Matlab etc :

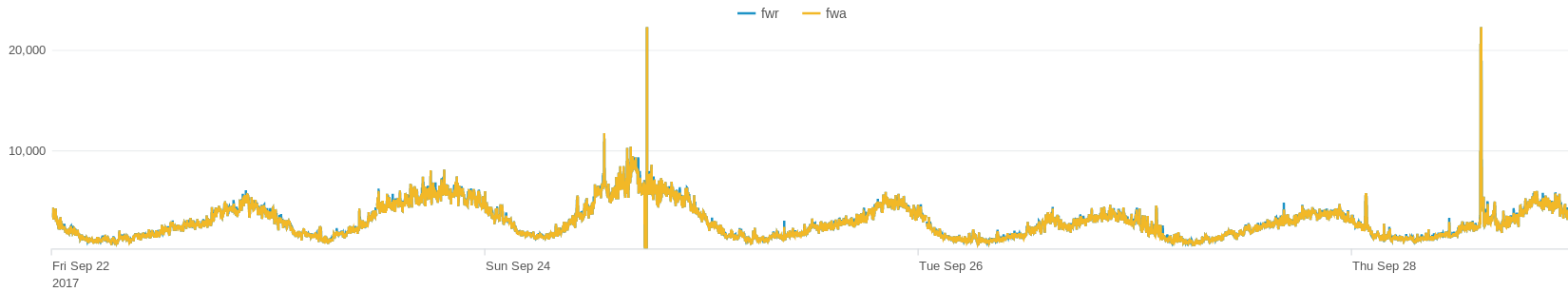

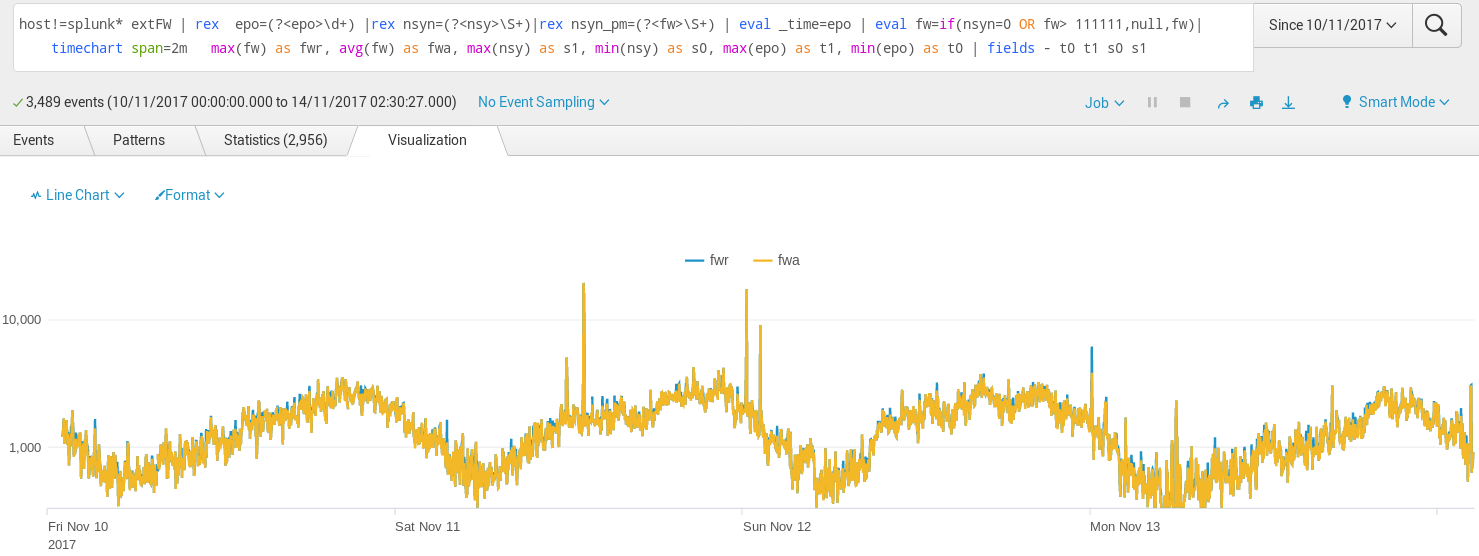

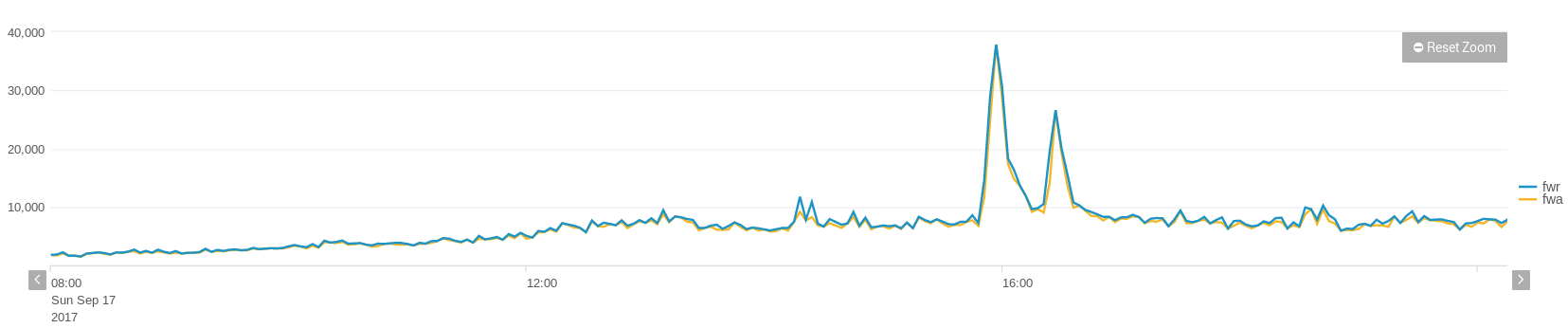

The number of TCP drops due to non-syn/non-session is especially important. This splunk example filters the snmp-gathered data for just a pair of HA Firewalls. The plot let us identify peaks visually, at those moments our infrastructure or providers suffered short glitches ( + delays in eBGP).

.

Fig2.  Usually the Palo Alto NonSYN NonSession drops is a small percentage ~2% of regular traffic, and it follows the typical daily usage curve. These regular nonSYN are due to glitches randomly distributed among users, caused by individual user browsers, their devices, or local user isp disconnections.

Usually the Palo Alto NonSYN NonSession drops is a small percentage ~2% of regular traffic, and it follows the typical daily usage curve. These regular nonSYN are due to glitches randomly distributed among users, caused by individual user browsers, their devices, or local user isp disconnections.

The FW monitoring scales up well using simple bash scripts collect among many FWs and feed logs to Elastic or Splunk for plots. We could follow 10 firewalls around America, Asia, Europe. Usually tracked those per minute (20sec in high business). These examples had millions hits to many brands and apps, hence the high counter variance. The method to track PA can also help normal traffic of few webs.

fws=( 10.41.17.5 10.41.17.6 10.12.61.5 10.12.61.6 10.4.61.5 10.4.61.6 10.6.61.5 10.6.61.6 pwallx-1.domain pwallx-2.domain )

#remote pull by FW cluster just need to loop $FW among the values in the $fws list

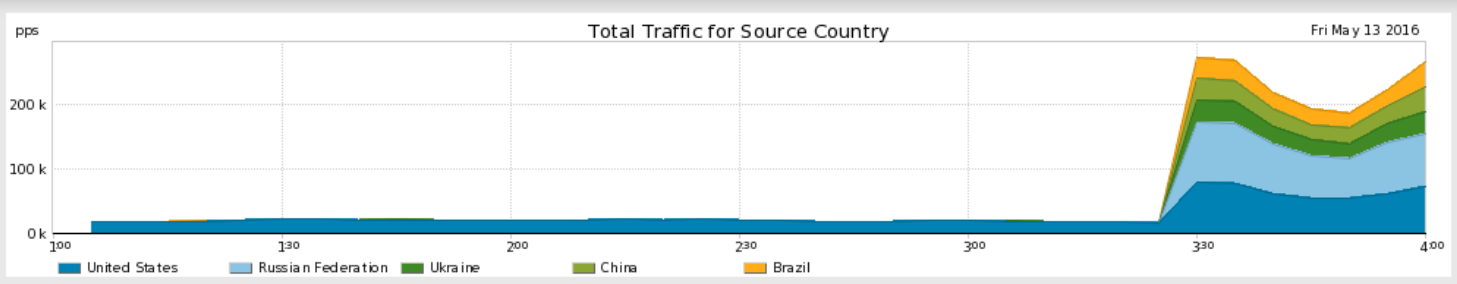

for FW in $fws;Let us see an attack example in Fig3. These are peaks that are not jumping by 6000 connections in a minute but rather jump abruptly over 20000 non-syn tcps is short time. Figure 3, of close monitoring of Palo Alto counters. The same figure let us detect precisely other moments of short disruptions (smaller peaks) due to own or near-own infrastructures (for instance caused by eBGP renegotiation after another link failed).

These monitored FW peaks were well contrasted against the providers’ reports on DOS attacks, as a confirmation.

In short. These SNMP counters and monitoring scripts complement well existing Palo Alto tools and summaries, because can add useful details to explore later in elastic, splunk, etc. It track attacks and issues and large consumer peaks real time with better precision than own PA tools.

This monitoring works independently to minimize risks , a server nearby pulls 10 snmp values via the PA management interface.

zooming out the time and values during the peaks & attacks

This work was developed 2015-19 tracking large Palo Alto firewalls closely, it can feed logs to elastic, splunk, …. This lead us evolve from large operations into Site Reliability Engineering (service oriented, automated, scaled)

standard : standard PA image

Since PAN-OS v7.0.0, the following counters could also be accessed through Palo Alto Networks private MIBs:

- panGlobalCountersDOSCounters

- panGlobalCountersDropCounters

- panGlobalCountersIPFragmentationCounters

- panGlobalCountersTCPState

while CLI command to extract Flow counters with a DoS aspect:

show counter global filter category flow aspect dos

- service level oriented

- automated

- scalable

Previous graphical flows were coded in beluga graphical language. We gave few examples querying Google App Enginge infchg site in beluga.

Later the application was renamed in the Google Compute preview App. The google cloud URLs are no longer reacheable, Since the Beluga service was moved to other cloud servers,

- backlog: the urls to be updated with the correct servers apps on GC & AWS

ll cx</i<

<script src="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.7/js/bootstrap.min.js"></script> <script src="//maxcdn.bootstrapcdn.com/bootstrap/3.3.6/js/bootstrap.min.js"></script>