Repo to benchmark inference optimizations for WizardCoder / Starcoder model.

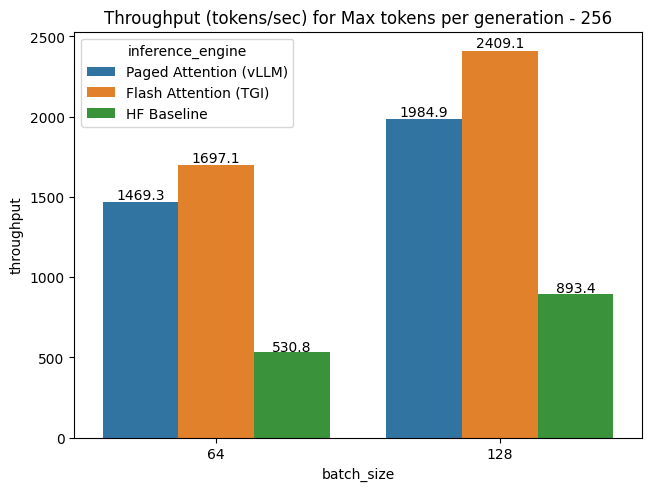

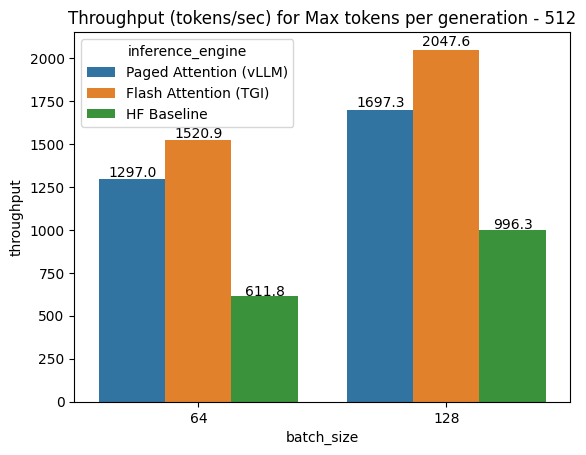

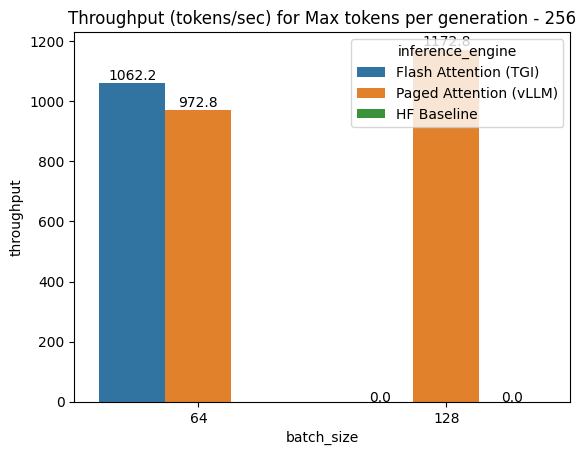

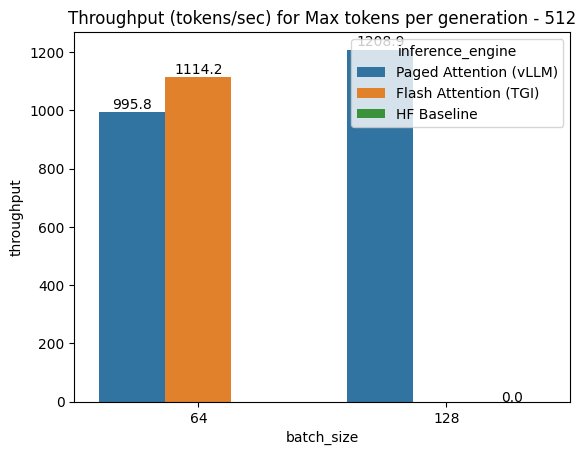

Note: All results reported were ran in A100 40GB instance and with WizardLM/WizardCoder-15B-V1.0.

Install required packages

git clone https://github.com/infinitylogesh/Wizcoder_benchmark.git

cd Wizcoder_benchmark/scripts && make install-vllm && make install-tgi

cd Wizcoder_benchmark/scripts && make install-flash-attn

Download the model weights

text-generation-server download-weights WizardLM/WizardCoder-15B-V1.0

To run the benchmark for a specific inference engine:

python3 main.py --batch_size <BATCH-SIZE> --num_tokens <NUM-TOKENS-TO_GENERATE> --inference_engine <INFERENCE-ENGINE>Values of INFERENCE-ENGINE can be:

hf: Vanilla hf inferencetgi: Flash attention using HF's Text-generation-inferencevllm: Paged Attention using vLLMhf_pipeling: Inference using huggingface pipeline

To run the complete benchmark:

sh scripts/run_benchmark.sh-

Flash Attention (Implemented from Text-generation-inference) Performs the best in various setting. However, with long sequences(especially with long input sequences), It seems to result into OOM

-

Paged Attention (via vLLM) - Performs second best in our benchmark runs and It is better at handling long sequences even in the settings where Flash attention fails , vLLM completes the generation without OOM

-

HF Generate (baseline) - Huggingface's venilla

AutoModelForCausalLMtaken as a baseline. -

HF Pipeline - Huggingface's Pipeline for text-generation performed the worst of all (Results are to be added).

- With Batch size of 64 , HF baseline throwed OOM. Flash attention performed better than Paged attention.

- With Batch size of 128, Both HF and Flash attention throwed OOM. Paged attention completed the generations.

CSV results of the benchmark is available here - results/results.csv

For further improvements in throughput,

- Performance comparison Quantized model (GPTQ)

- Flash Attention + Paged Attention ( Using latest Text-generation-inference)

- Falsh attention v2

- Continous batching

- Other optimizations listed here.

- Flash attention implementation was used from Text-generation-inference. Adapted the TGI wrapper from Bigcode's bigcode-inference-benchmark

- Paged Attention from vLLM