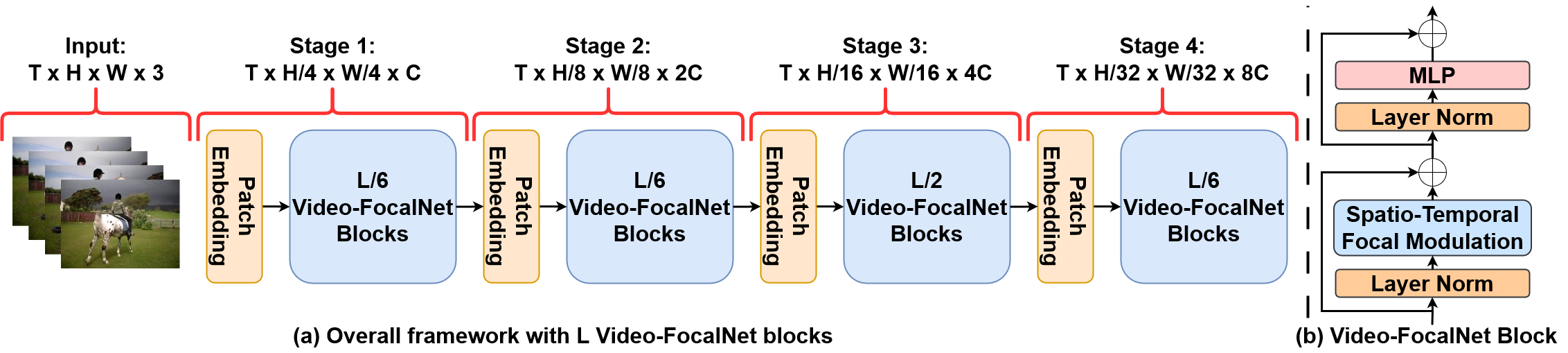

Video-FocalNet is an architecture for efficient video recognition that is effectively modeled on both local and global contexts. A spatio-temporal focal modulation approach is utilized, in which self-attention steps are optimized for greater efficiency through cost-effective convolution and element-wise multiplication. After extensive exploration, the parallel spatial and temporal encoding was determined to be the best design choice.

This is a unofficial Keras implementation of Video-FocalNet. The official PyTorch implementation is here

- [02-11-2023]: Add gradio web app, huggingface space.

- [31-10-2023]: GPU(s), TPU-VM for fine-tune training are supported, colab.

- [31-10-2023]: Video-FocalNet checkpoints for Driving-48 becomes available, link.

- [30-10-2023]: Video-FocalNet checkpoints for ActivityNet becomes available, link.

- [29-10-2023]: Video-FocalNet checkpoints for SSV2 becomes available, link.

- [28-10-2023]: Video-FocalNet checkpoints for Kinetics-600 becomes available, link.

- [27-10-2023]: Video-FocalNet checkpoints for Kinetics-400 becomes available, link.

- [27-10-2023]: Code of Video-FocalNet in Keras becomes available.

git clone https://github.com/innat/Video-FocalNets.git

cd Video-FocalNets

pip install -e . The Video-FocalNet checkpoints are available in both SavedModel and H5 formats. The variants of this models are tiny, small, and base. Check this release and model zoo page to know details of it. Following are some hightlights.

Inference

>>> from videofocalnet import VideoFocalNetT

>>> model = VideoFocalNetT(name='FocalNetT_K400')

>>> _ = model(np.ones(shape=(1, 8, 224, 224, 3)))

>>> model.load_weights('TFVideoFocalNetT_K400_8x224.h5')

>>> container = read_video('sample.mp4')

>>> frames = frame_sampling(container, num_frames=8)

>>> y = model(frames)

>>> y.shape

TensorShape([1, 400])

>>> probabilities = tf.nn.softmax(y_pred_tf)

>>> probabilities = probabilities.numpy().squeeze(0)

>>> confidences = {

label_map_inv[i]: float(probabilities[i]) \

for i in np.argsort(probabilities)[::-1]

}

>>> confidencesA classification results on a sample from Kinetics-400.

Fine Tune

Each video-focalnet checkpoints returns logits. We can just add a custom classifier on top of it. For example:

# import pretrained model, i.e.

video_focalnet = keras.models.load_model(

'TFVideoFocalNetB_K400_8x224', compile=False

)

video_focalnet.trainable = False

# downstream model

model = keras.Sequential([

video_focalnet,

layers.Dense(

len(class_folders), dtype='float32', activation=None

)

])

model.compile(...)

model.fit(...)

model.predict(...)Spatio-Temporal Modulator [GradCAM]

Here are some visual demonstration of first and last layer Spatio-Temporal Modulator of Video-FocalNet. More details visual-gradcam.ipynb.

video_sample1.mp4

The 3D video-focalnet checkpoints are listed in MODEL_ZOO.md.

- Custom fine-tuning code.

- Support

Keras V3to support multi-framework backend. - Publish on TF-Hub.

If you use this video-focalnet implementation in your research, please cite it using the metadata from our CITATION.cff file.

@InProceedings{Wasim_2023_ICCV,

author = {Wasim, Syed Talal and Khattak, Muhammad Uzair and Naseer, Muzammal and Khan, Salman and Shah, Mubarak and Khan, Fahad Shahbaz},

title = {Video-FocalNets: Spatio-Temporal Focal Modulation for Video Action Recognition},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

year = {2023},

}