This repository contains additional materials of our paper "Fair Evaluation in Concept Normalization: a Large-scale Comparative Analysis for BERT-based Models". In biomedical research and healthcare, the entity linking problem is known as medical concept normalization (MCN). In this work, we perform a comparative evaluation of various benchmarks and study the efficiency of BERT-based models for linking of three entity types across three domains: research abstracts, drug labels, and usergenerated texts on drug therapy in English.

Given predefined train/test splits of six biomedical datasets, we found out that app. 78% entity mentions in the test set are textual duplicates of other entities in the test set or entities presented in train+dev sets. In order to obtain more realistic results, we present refined test sets without duplicates or exact overlaps (see Table 1). Please refer to our paper for details.

This table presents the summary statistics of corpora used in study.

| NCBI Disease | BC5CDR Disease | BC5CDR Chem | BC2GN Gene | TAC 2017 ADR | SMM4H 2017 ADR | |

|---|---|---|---|---|---|---|

| domain | abstracts | abstracts | abstracts | abstracts | drug labels | tweets |

| entity type | disease | disease | chemicals | genes | ADRs | ADRs |

| terminology | MEDIC | MEDIC | CTD Chem | Entrez Gene | MedDRA | MedDRA |

| number of pre-processed entity mentions | ||||||

| full corpus | 6881 | 12850 | 15935 | 5712 | 13381 | 9150 |

| avg. len in chars | 20.37 | 14.88 | 11.27 | 8.35 | 17.28 | 11.69 |

| % have numerals | 5.74% | 0.11% | 7.32% | 62.46% | 1.62% | 2.52% |

| train set | 5134 | 4182 | 5203 | 2725 | 7038 | 6650 |

| dev set | 787 | 4244 | 5347 | - | - | - |

| test set | 960 | 4424 | 5385 | 2987 | 6343 | 2500 |

| refined test | 204 (21.2%) | 657 (14.9%) | 425 (7.9%) | 985 (32.9%) | 1,544 (24.3%) | 831 (33.3%) |

| number of concepts | ||||||

| train set |T_1| | 668 | 968 | 922 | 556 | 1517 | 472 |

| test set |T_2| | 203 | 669 | 617 | 670 | 1323 | 254 |

| refined test |T_3| | 140 | 438 | 351 | 642 | 857 | 201 |

| |T_1 & T_2| | 136 | 457 | 368 | 55 | 867 | 218 |

| |T_1 & T_3| | 76 | 226 | 102 | 27 | 401 | 165 |

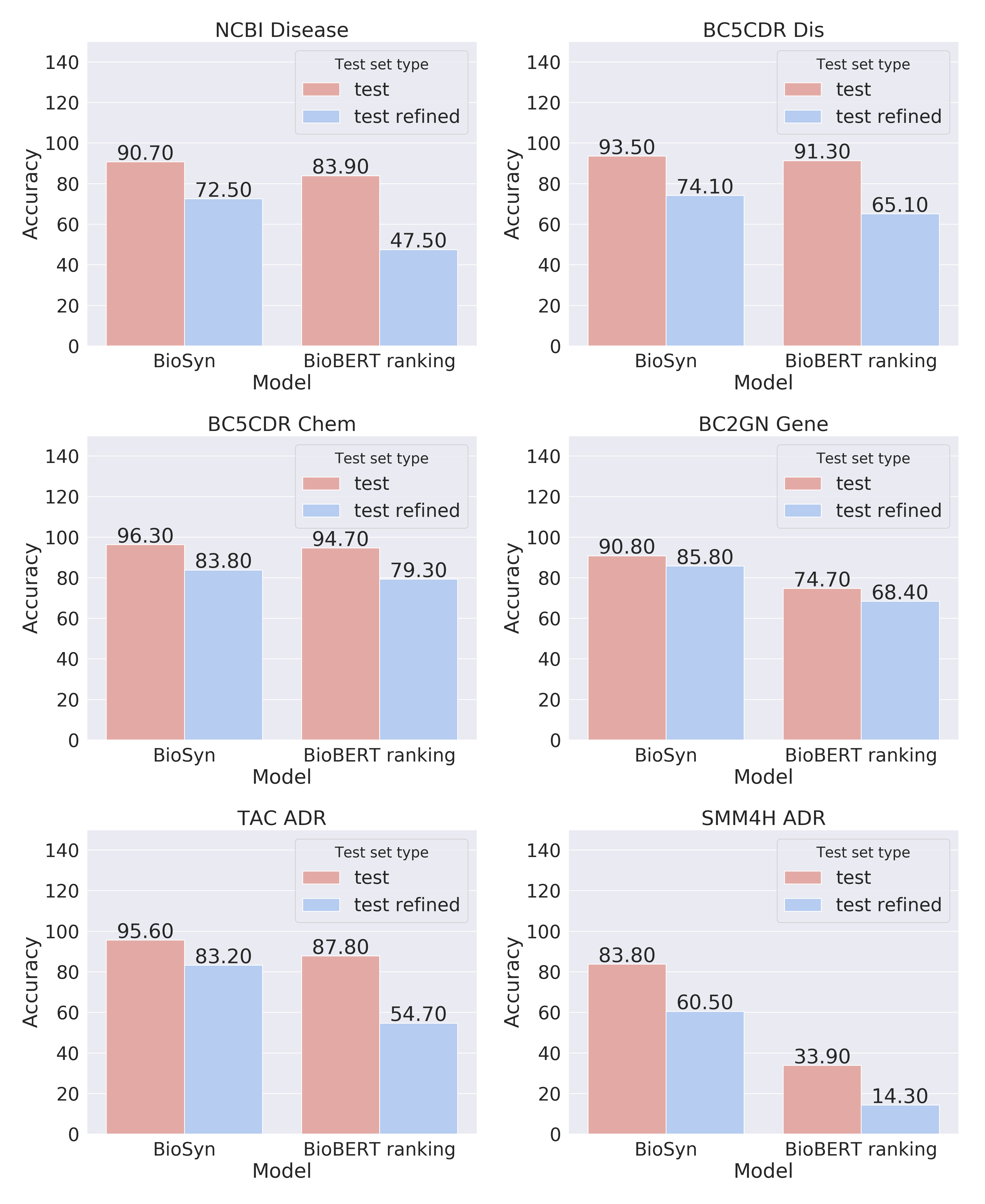

This figure shows differences in evaluation metrics on the refined and full test set of BioSyn and BERT ranking approaches.

Tables 2 & 3 contain metrics on cross-terminology evaluation mode. Table 2 shows performance of BioSyn on refined test sets in terms of accuracy@1; the numbers in bold print represent bert performance in a row.

| Test set | Train set | |||||

| NCBI Dis | BC5CDR Dis | BC5CDR Chem | BC2GN Gene | TAC ADR | SMM4H ADR | |

| NCBI | 72.5 | 67.6 | 64.7 | 67.6 | 67.2 | 48.5 |

| CDR Dis | 74.7 | 74.1 | 73.4 | 74.9 | 73.1 | 58.3 |

| CDR Chem | 82.4 | 84.2 | 83.8 | 82.4 | 82.6 | 73.9 |

| TAC ADR | 74.3 | 77.5 | 70.1 | 83.2 | 69.9 | 51.5 |

| BC2GN | 83.1 | 81.7 | 83.7 | 82.6 | 85.8 | 73.2 |

| SMM4H ADR | 27.3 | 35.6 | 24.8 | 30.1 | 21.9 | 60.5 |

Table 3 shows differences in results between a given model and the in-domain model in parentheses (by row).

| Test set | Train set | |||||

| NCBI Dis | BC5CDR Dis | BC5CDR Chem | BC2GN Gene | TAC ADR | SMM4H ADR | |

| NCBI Disease | 72.5 | -4.9 | -7.8 | -5.4 | -4.9 | -24.0 |

| BC5CDR Dis | +0.6 | 74.1 | -0.8 | -1.1 | +0.8 | -15.8 |

| BC5CDR Chem | -1.4 | +0.5 | 83.8 | -1.2 | -1.4 | -9.9 |

| BC2GN Gene | -2.6 | -4.1 | -2.1 | 85.8 | -3.1 | -12.6 |

| TAC ADR | -8.9 | -5.7 | -13.0 | -13.3 | 83.2 | -31.7 |

| SMM4H ADR | -33.2 | -24.9 | -35.7 | -38.6 | -30.4 | 60.5 |

We have presented the first comparative evaluation of medical concept normalization (MCN) datasets, studying the NCBI Disease, BC5CDR Disease & Chemical, BC2GN Gene, TAC 2017 ADR, and SMM4H 2017 ADR corpora. We perform an extensive evaluation of two BERT-based models on six datasets in two setups: with official train/test splits and with the proposed test sets that represent refined samples of entity mentions. Our evaluation shows great divergence in performance between these two test sets, finding an average accuracy difference of 15% for the state-of-the-art model BioSyn. We also performed a quantitative evaluation of BioSyn in the cross-terminology MCN task where models were trained and evaluated on entity mentions of various types with concepts from different terminologies. Knowledge transfer can be effective between diseases, chemicals, and genes with an average drop of 2.53% accuracy in the performance on NCBI, BC5CDR, and BC2GN sets. For TAC and SMM4H sets with ADRs from drug labels and social media, BioSyn models trained on four other corpora show a substantial decrease in performance (-10.2% and -33.1% accuracy, respectively) compared to in-domain trained models. To our surprise, these models still outperformed the straightforward ranking baseline on BioBERT representations. We believe that refined datasets with cross-terminology evaluation can serve as a step toward reliable and large-scale evaluation of biomedical IE models.

$ pip install -r requirements.txtWe use the Huggingface version of BioBERT v1.1 so that the pretrained model can be run on the pytorch framework.

Datasets and the preprocessing procedures are used the same as in BioSyn. Additionally, we used SMM4H 2017 dataset. We made available all datasets except TAC ADR 2017. TAC2017ADR dataset cannot be shared because of the license issue. But we made available preprocessing scripts.

To get a refined test set from the test set simply run:

$ python process_data.py --train_data_folder /data/ncbi/processed_train \

--test_data_folder /data/ncbi/processed_test \

--save_to /data/ncbi/processed_test_refined

To train the BioSyn models follow the instructions. BERT ranking doesn't require any training procedure.

To eval BioSyn trained models follow the instructions. To eval the BERT ranking run the command:

$ python eval_bert_ranking.py --model_dir /data/pretrained_models/biobert_v1.1_pubmed_pytorch/ \

--data_folder /data/ncbi/processed_test \

--vocab /data/ncbi/test_dictionary.txt

Tutubalina E., Kadurin A., Miftahutdinov Z. Fair Evaluation in Concept Normalization: a Large-scale Comparative Analysis for BERT-based Models //Proceedings of the 28th International Conference on Computational Linguistics. – 2020. – С. 6710-6716.link

BibTex

@inproceedings{tutubalina-etal-2020-fair,

title = "Fair Evaluation in Concept Normalization: a Large-scale Comparative Analysis for {BERT}-based Models",

author = "Tutubalina, Elena and

Kadurin, Artur and

Miftahutdinov, Zulfat",

booktitle = "Proceedings of the 28th International Conference on Computational Linguistics",

month = dec,

year = "2020",

address = "Barcelona, Spain (Online)",

publisher = "International Committee on Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.coling-main.588",

pages = "6710--6716",

}