By Chong Xiang, Saeed Mahloujifar, Prateek Mittal

Code for "PatchCleanser: Certifiably Robust Defense against Adversarial Patches for Any Image Classifier" in USENIX Security Symposium 2022.

Update 04/2023: (1) Check out this 35-min presentation and this short survey paper for a quick introduction to certifiable patch defenses. (2) Added support for MAE.

Update 08/2022: added notes for "there is no attack code in this repo" here .

Update 04/2022: We earned all badges (available, functional, reproduced) in USENIX artifact evaluation! The camera ready version is available here. We released the extended technical report here.

Update 03/2022: released the leaderboard for certifiable robust image classification against adversarial patches.

Update 02/2022: made some minor changes for the model loading script and updated the pretrained weights

Takeaways:

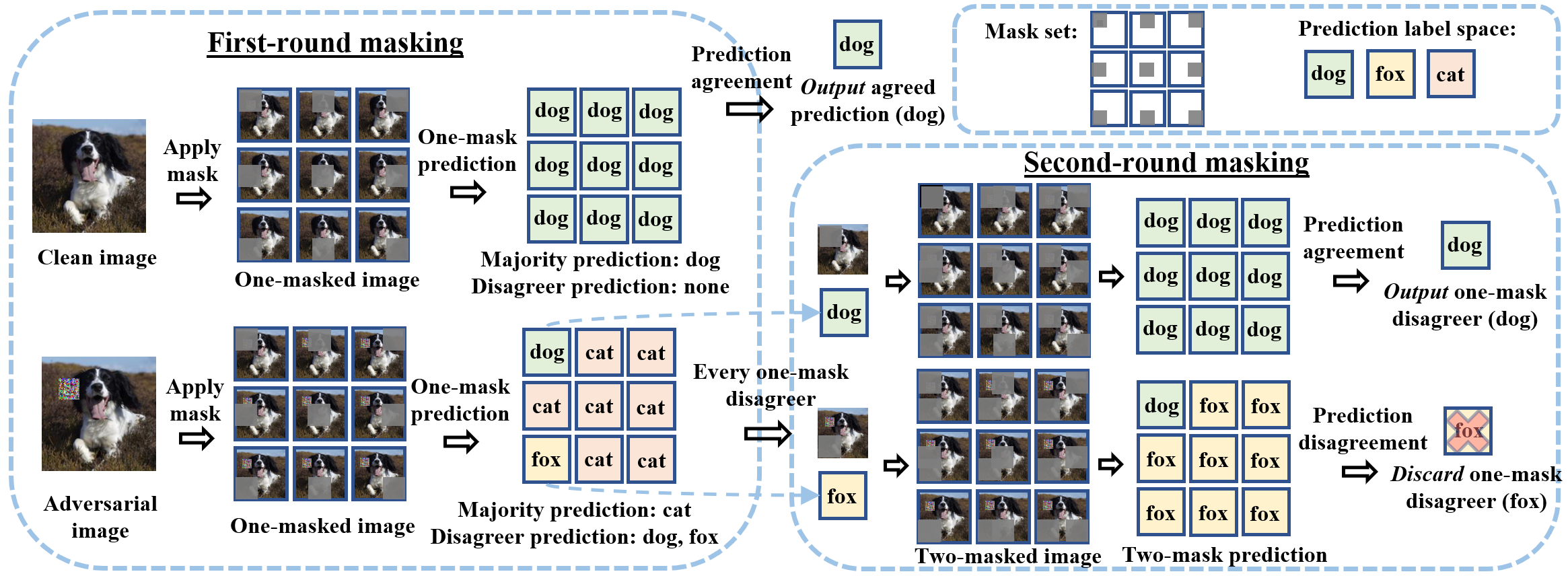

- We design a certifiably robust defense against adversarial patches that is compatible with any state-of-the-art image classifier.

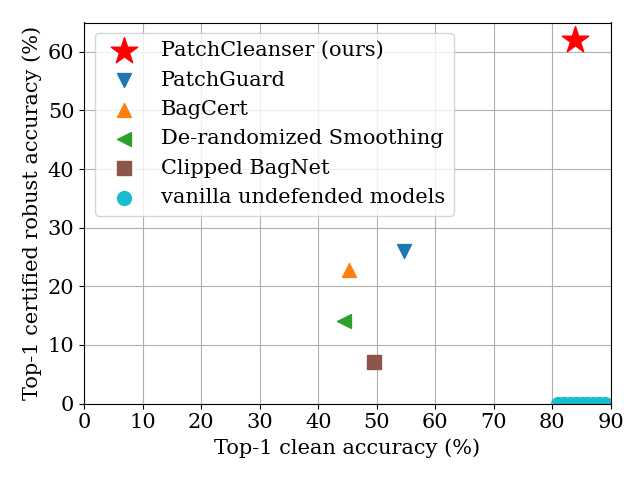

- We achieve clean accuracy that is comparable to state-of-the-art image classifier and improves certified robust accuracy by a large margin.

- We visualize our defense performance for 1000-class ImageNet below!

Check out our paper list for adversarial patch research and leaderboard for certifiable robust image classification for fun!

Experiments were done with PyTorch 1.7.0 and timm 0.4.12. The complete list of required packages are available in requirement.txt, and can be installed with pip install -r requirement.txt. The code should be compatible with newer versions of packages. Update 04/2023: tested with torch==1.13.1 and timm=0.6.13; the code should work fine.

├── README.md #this file

├── requirement.txt #required package

├── example_cmd.sh #example command to run the code

|

├── pc_certification.py #PatchCleanser: certify robustness via two-mask correctness

├── pc_clean_acc.py #PatchCleanser: evaluate clean accuracy and per-example inference time

|

├── vanilla_clean_acc.py #undefended vanilla models: evaluate clean accuracy and per-example inference time

├── train_model.py #train undefended vanilla models for different datasets

|

├── utils

| ├── setup.py #utils for constructing models and data loaders

| ├── defense.py #utils for PatchCleanser defenses

| └── cutout.py #utils for masked model training

|

├── misc

| ├── reproducibility.md #detailed instructions for reproducing paper results

| ├── pc_mr.py #script for minority report (Figure 9)

| └── pc_multiple.py #script for multiple patch shapes and multiple patches (Table 4)

|

├── data

| ├── imagenet #data directory for imagenet

| ├── imagenette #data directory for imagenette

| ├── cifar #data directory for cifar-10

| ├── cifar100 #data directory for cifar-100

| ├── flower102 #data directory for flower102

| └── svhn #data directory for svhn

|

└── checkpoints #directory for checkpoints

├── README.md #details of checkpoints

└── ... #model checkpoints- ImageNet (ILSVRC2012)

- ImageNette (Full size)

- CIFAR-10/CIFAR-100

- Oxford Flower-102

- SVHN

- See Files for details of each file.

- Download data in Datasets to

data/. - (optional) Download checkpoints from Google Drive link and move them to

checkpoints. - (optional) Download pre-computed two-mask predictions for ImageNet from Google Drive link and move them to

dump. Computing two-mask predictions for other datasets shouldn't take too long. - See

example_cmd.shfor example commands for running the code. - See

misc/reproducibility.mdfor instructions to reproduce all results in the main body of paper.

If anything is unclear, please open an issue or contact Chong Xiang (cxiang@princeton.edu).

If you find our work useful in your research, please consider citing:

@inproceedings{xiang2022patchcleanser,

title={PatchCleanser: Certifiably Robust Defense against Adversarial Patches for Any Image Classifier},

author={Xiang, Chong and Mahloujifar, Saeed and Mittal, Prateek},

booktitle = {31st {USENIX} Security Symposium ({USENIX} Security)},

year={2022}

}