Variational Auto Encoders (VAEs), Generative Adversarial Networks (GANs) and Generative Normalizing Flows (NFs) and are the most famous and powerful deep generative models. With this reposetory, I attempt to gather many deep generative model architectures, within a clean structured code enviroment. Lastly, I also attempt to analyzed both from theoretical and practical spectrum, with mathematical formulas and annimated pictures.

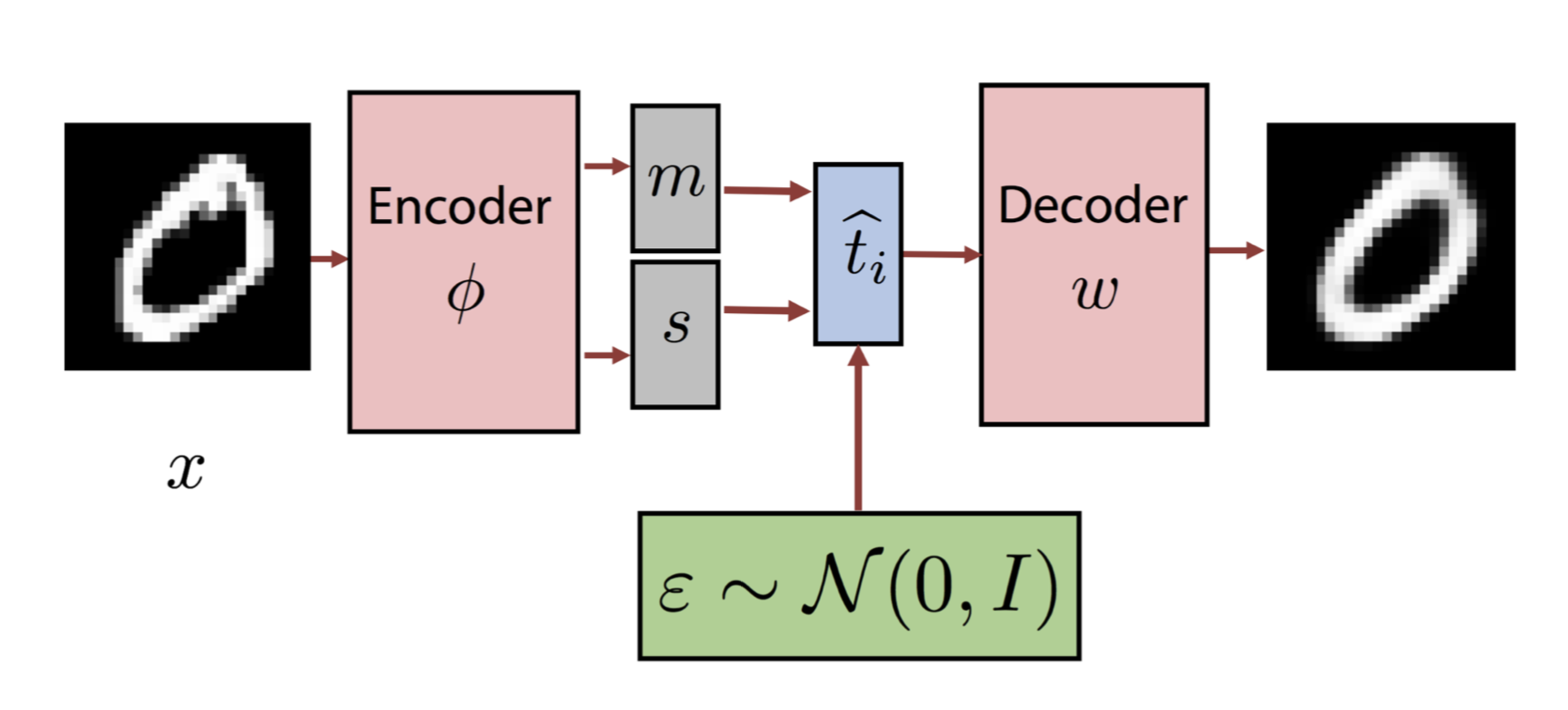

A VAE is a latent variable model that leverages the flexibility of Neural Networks (NN) in order to learn/specify a latent variable model.

Auto-Encoding Variational Bayes

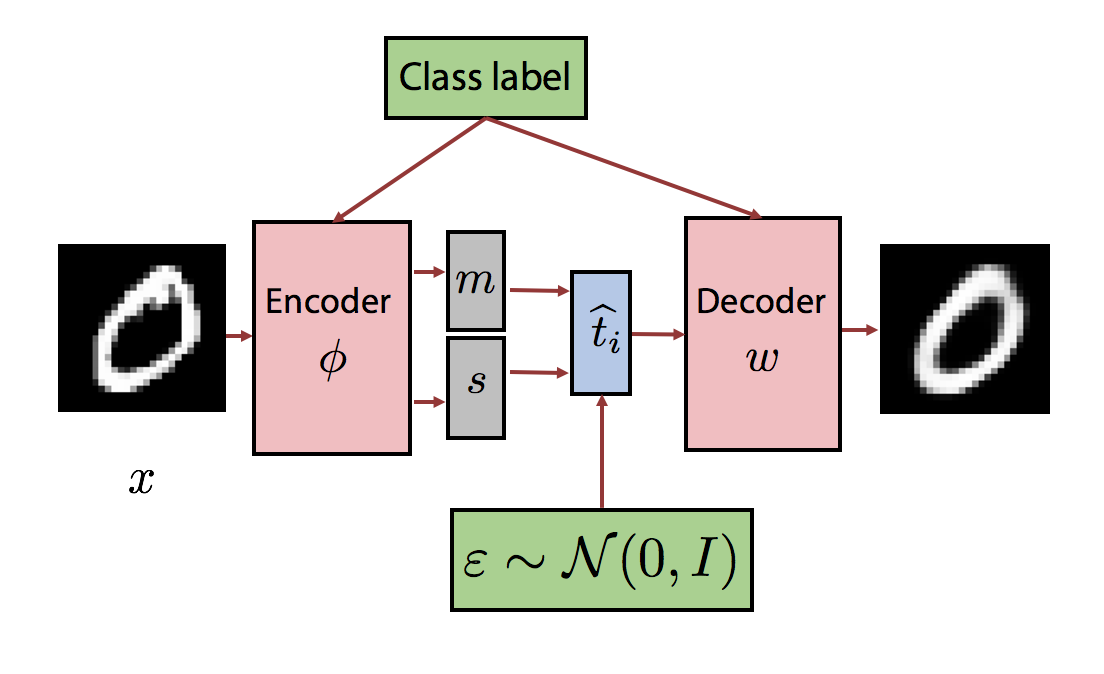

Learning Structured Output Representation using Deep Conditional Generative Models

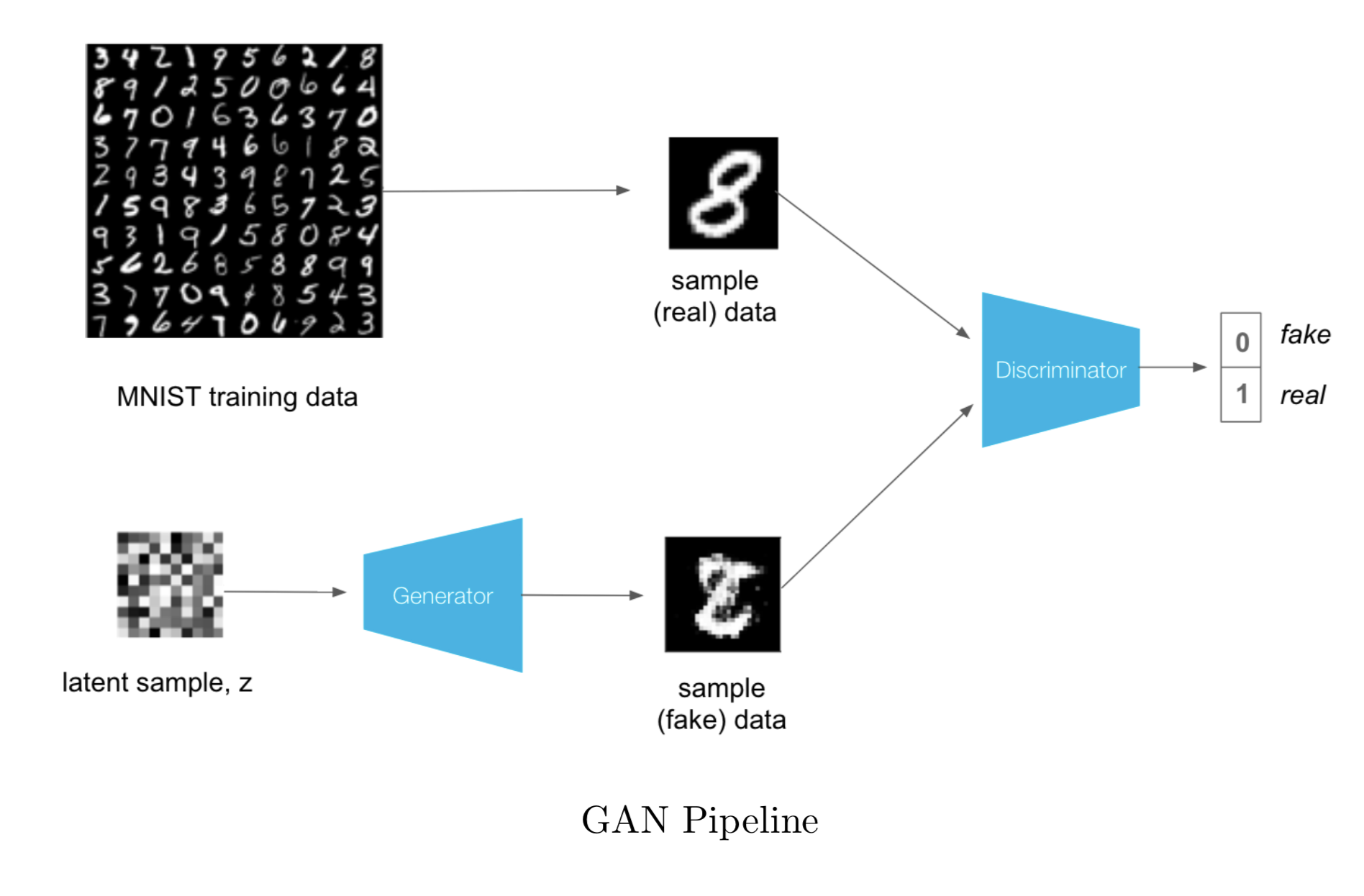

Generative Adversarial Networks (GAN) are a type of deep generative models. Similar to VAEs, GANs can generate images that mimick images from the dataset by sampling an encoding from a noise distribution. In constract to VAEs, in vanilla GANs there is no inference mechanism to determine an encoding or latent vector that corresponds to a given data point (or image).

Generative Adversarial Networks

From left to right; Vanilla VAE on 2-dimentional space, Conditional VAE on 20-dimentional space.

Vanilla GAN training progress.

All the results can be found the folder vae/logs (or gan/logs) with tensorboard:

tensorboard --logdir=vae/logs

python vae/main.py --model="cvae"

- Python 3.x: PyTorch, NumPy, Tensorboard

Copyright © 2019 Ioannis Gatopoulos.