This library provides easily replicate tables from JDBC databases (MySQL, PostgreSQL, etc.) to iomete

Note: It requires you have SSH Tunnel between iomete if your database in a private network (see: https://docs.iomete.com/docs/database-connection-options)

You can define sync mode for each table. Currently, supported sync modes are:

FullLoad: Read everything in the source and overwrites whole table at the destination at each syncIncrementalSnapshot: It creates the snapshot of table in the destination and only move the newly inserted and updated records. While writing to iomete it uses merge statement. This mode requires 2 parameters:identification_columnwill be used on merge statement, andtracking_columnto track the where it should continue to get data from the source table

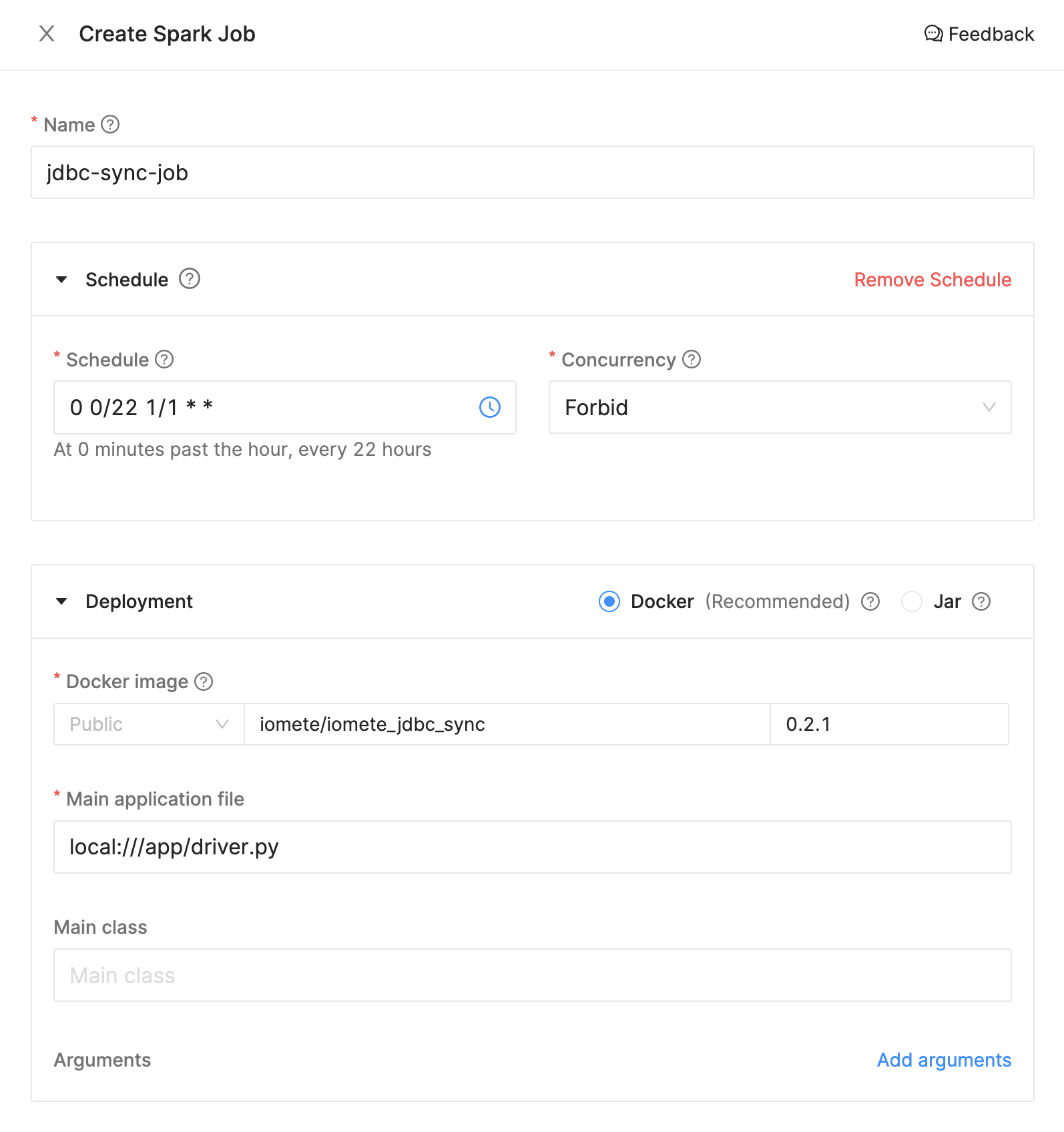

- Go to

Spark Jobs. - Click on

Create New.

Specify the following parameters (these are examples, you can change them based on your preference):

- Name:

jdbc-sync-job - Schedule:

0 0/22 1/1 * * - Docker Image:

iomete/iomete_jdbc_sync:0.3.0 - Main application file:

local:///app/driver.py - Environment Variables:

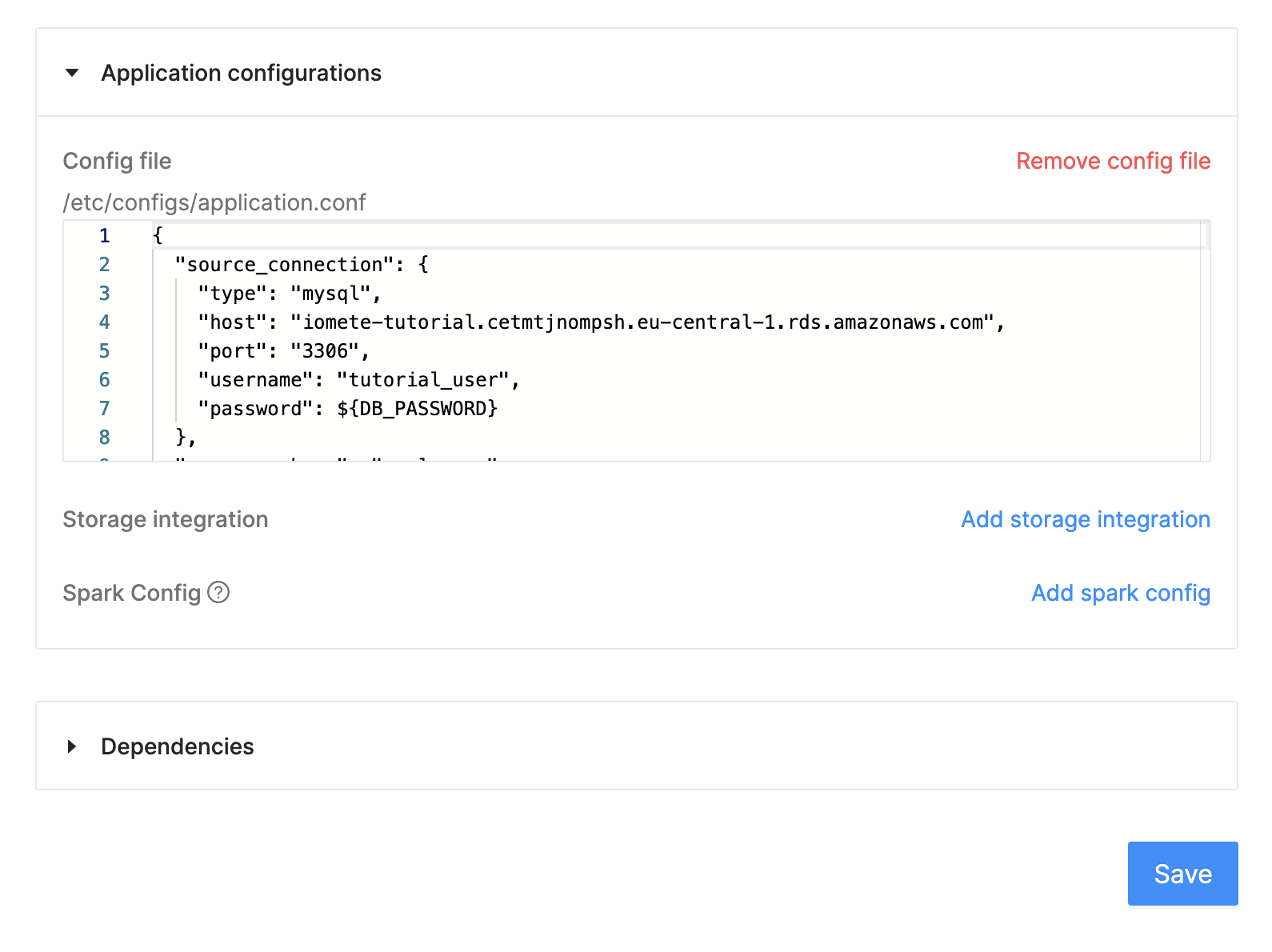

DB_PASSWORD:9tVDVEKp - Config file:

{

source_connection: {

type: mysql,

host: "iomete-tutorial.cetmtjnompsh.eu-central-1.rds.amazonaws.com",

port: 3306,

username: tutorial_user,

password: "9tVDVEKp"

},

syncs: [

{

source.schema: employees

source.tables: ["*"]

source.exclude_tables: [departments, dept_manager, salaries]

destination.schema: employees_raw

sync_mode.type: full_load

},

{

source.schema: employees

source.tables: [ departments, dept_manager ]

destination.schema: employees_raw

sync_mode.type: full_load

},

{

source.schema: employees

source.tables: [

"""

(SELECT emp_no, sum(salary) total_salary FROM salaries group by emp_no)

as total_salaries

"""

]

destination.schema: employees_raw

sync_mode.type: full_load

}

]

}| Property | Description | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

source_connection |

Required properties to connect source.

|

||||||||||

syncs |

|

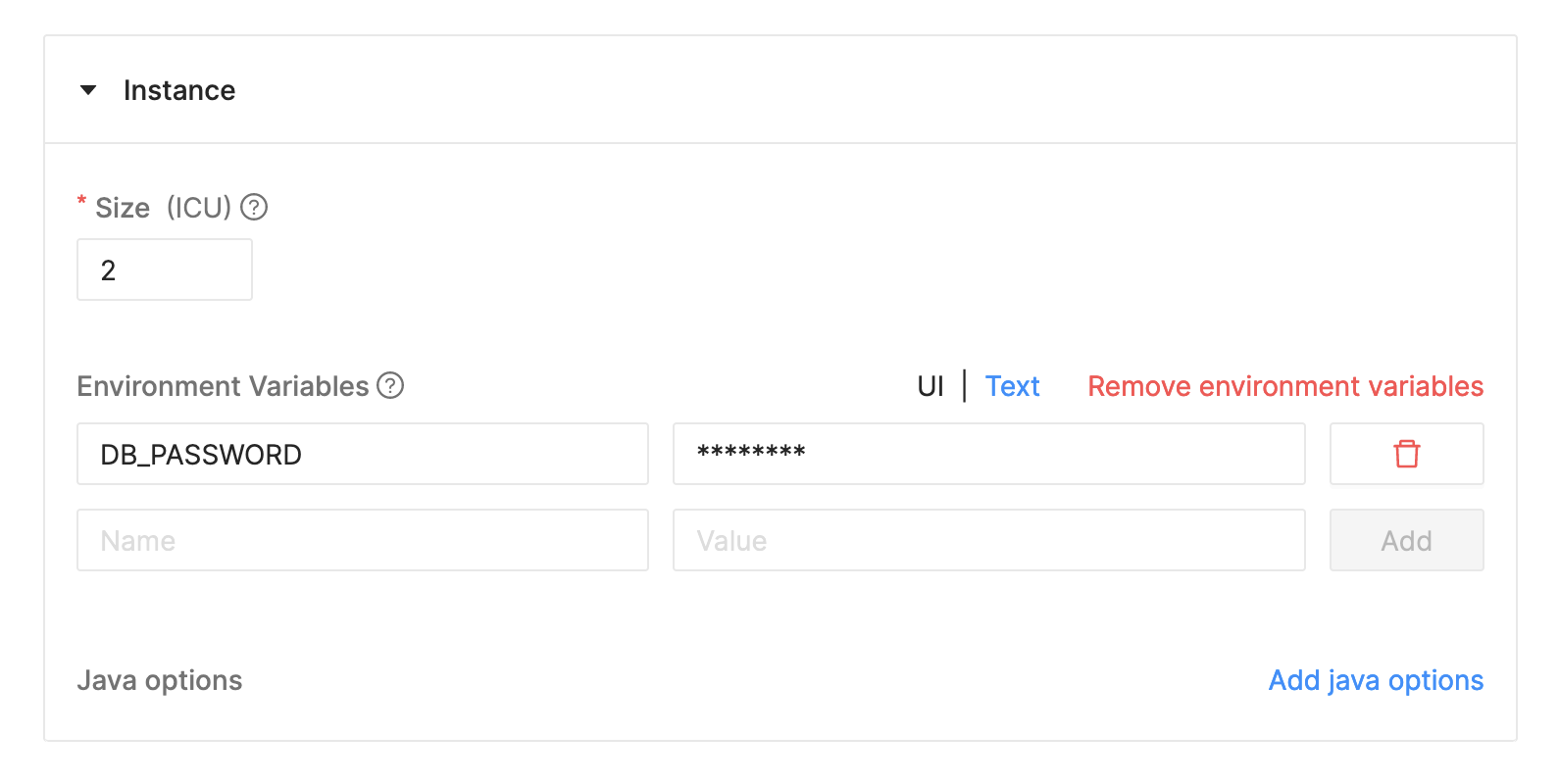

Create Spark Job - Instance

You can use Environment Variables to store your sensitive data like password, secrets, etc. Then you can use these variables in your config file using the

${DB_PASSWORD}syntax.

Create Spark Job - Application Config

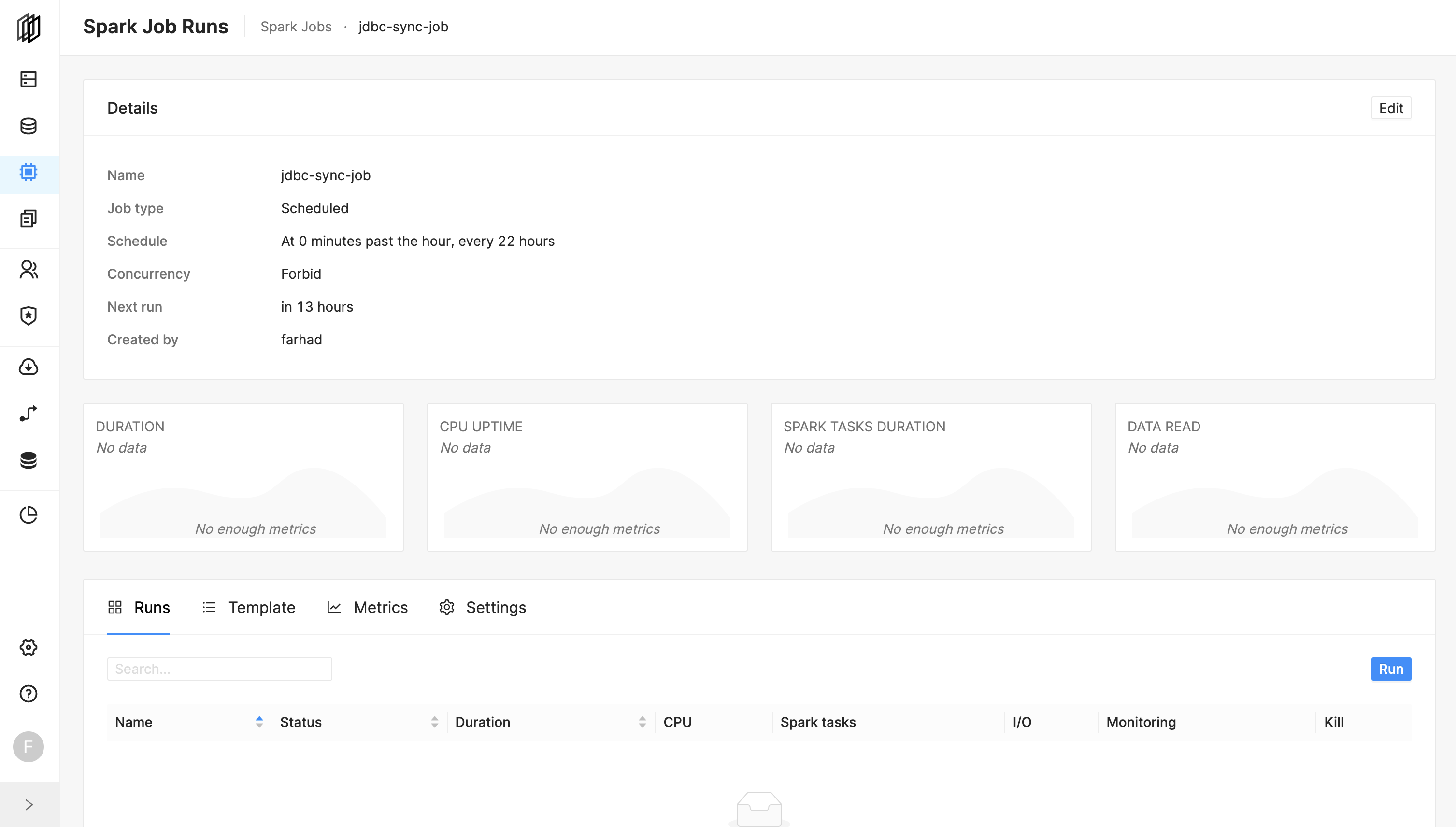

And, hit the create button.

The job will be run based on the defined schedule. But, you can trigger the job manually by clicking on the Run button.

Prepare the dev environment

virtualenv .env #or python3 -m venv .env

source .env/bin/activate

pip install -e ."[dev]"Run test

pytest