- Python3

- PyTorch

- OpenCV

- NumPy

- SciPy

Paper: Adversarial examples in the physical world

- Run the script

$ python3 fgsm.py --img images/goldfish.jpg --model inception_v3-

Control keys

- use trackbar to change

epsilon(max norm) escclosessave perturbation and adversarial image

- use trackbar to change

-

Demo

Paper: Adversarial examples in the physical world

- Run the script

$ python3 iterative.py --img images/goldfish.jpg --model resnet18 --y_target 4-

Control keys

- use trackbar to change

epsilon(max norm of perturbation) anditer(number of iterations) escclose andspaceto pausessave perturbation and adversarial image

- use trackbar to change

From one of the first papers on Adversarial examples - Explaining and Harnessing Adversarial Examples by Ian Goodfellow,

The direction of perturbation, rather than the specific point in space, matters most. Space is not full of pockets of adversarial examples that finely tile the reals like the rational numbers.

To test this, I've written explore_space.py.

This code adds to the input image (img), a randomly generated perturbation (vec1) which is subjected to a max norm constraint eps. To explore a region (a hypersphere) around this adversarial image (img + vec1) , we add to it another perturbation (vec2) which is constrained by L2 norm rad. By pressing keys e and r, new vec1 and vec2 are generated respectively.

- Random perturbations

The classifier is robust to these random perturbations even though they have significantly higher max norm.

|

|

|

|---|---|---|

| horse | automobile | : truck : |

- Generated perturbation by FGSM

A properly directed perturbation with max norm as low as 3, which is almost imperceptible, can fool the classifier.

|

|

|

|---|---|---|

| horse | predicted - dog | perturbation (eps = 6) |

Paper: One pixel attack for fooling deep neural networks

- Run the script

$ python3 one_pixel.py --img images/airplane.jpg --d 3 --iters 600 --popsize 10Here, d is number of pixels to change (L0 norm). Finding successful attacks was difficult. I noticed that only images with low confidence are giving successful attacks.

|

|

|---|---|

| frog [0.8000] | bird [0.8073] |

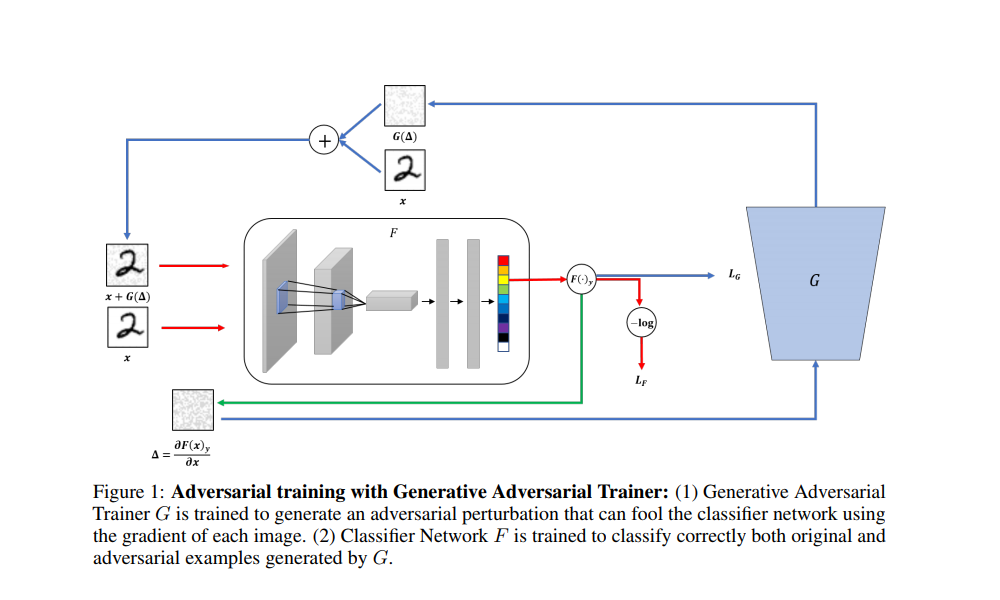

Paper: Generative Adversarial Trainer: Defense to Adversarial Perturbations with GAN

- To train

python3 gat.py - Trainig has not been done as generator doesn't converge. Also, I'm a bit skeptical about this, they did not show any adverarial images in paper.

Paper: Adversarial Spheres

Paper: Generating Adversarial Examples with Adversarial Networks

Paper: Fast Feature Fool: A data independent approach to universal adversarial perturbations

-

Synthesizing Robust Adversarial Examples — adversarial examples that are robust across any chosen distribution of transformations

-

The Space of Transferable Adversarial Examples — novel methods for estimating dimensionality of the space of adversarial inputs

-

On Detecting Adversarial Perturbations — augment Neural Networks with a small detector subnetwork which performs binary classification on distinguishing genuine data from data containing adversarial perturbations

-

Towards Robust Deep Neural Networks with BANG — Batch Adjusted Network Gradients

-

Networks to generate adversarial examples — AdvGAN, ATNs

-

Deepfool, JSMA, CW — math intensive