This project involves porting the lightweight RAG implementation of AnythingLLM + Ollama to LazyCat MicroServer.

So, please do not expect this project to help you run this RAG implementation on platforms other than LazyCat MicroServer.

- AnythingLLM:1.2.4

- Ollama:0.4.1

-

How to Use AnythingLLM

Please refer to AnythingLLM Official Documentation

-

How to Manage the Models with Ollama

Please refer to Ollama API Documentation

For example, the command below is to pull a model.

curl http://anythingllm.${YourLazyCatMicroServerName}.heiyu.space:11434/api/pull -d '{

"name": "llama3.2"

}Due to the LazyCat MicroServer's limited memory and CPU, it is highly not recommended to run LLMs with 10s of billions of parameters for real-time chat on the LazyCat MicroServer.

- Chat Model: API

- Agent Model: API

- Embedder: API

- Vector DB: LanceDB

- Chat Model: API

- Agent Model: API

- Embedding Model: Ollama - mxbai-embed-large

- Vector DB: LanceDB

- Chat Model: Ollama - llama3.2

- Agent Model: Ollama - llama3.2

- Embedding Model: Ollama - mxbai-embed-large

- Vector DB: LanceDB

Here are the commands for pulling llama3.2 and mxbai-embed-large models.

curl http://anythingllm.${YourLazyCatMicroServerName}.heiyu.space:11434/api/pull -d '{

"name": "llama3.2"

}

curl http://anythingllm.${YourLazyCatMicroServerName}.heiyu.space:11434/api/pull -d '{

"name": "mxbai-embed-large"

}Due to the LazyCat MicroServer's limited memory and CPU, it is highly not recommended to run LLMs with 10s of billions of parameters for real-time chat on the LazyCat MicroServer.

- Access http://anythingllm.${YourLazyCatMicroServerName}.heiyu.space:11434/ to check whether "Ollama is running" is shown.

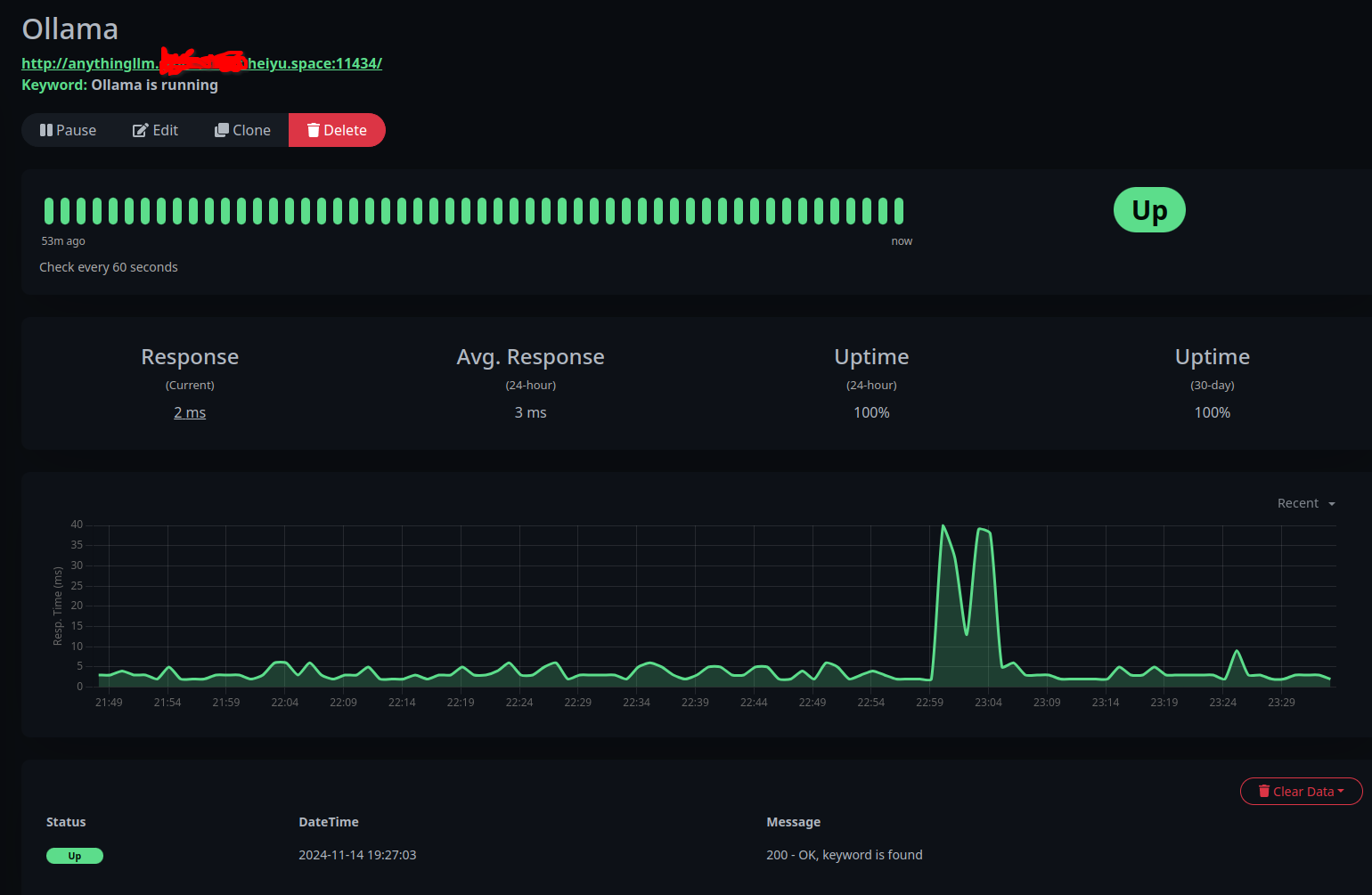

- Alternatively, you can install and leverage the Uptime Kuma to track Ollama's status easily.