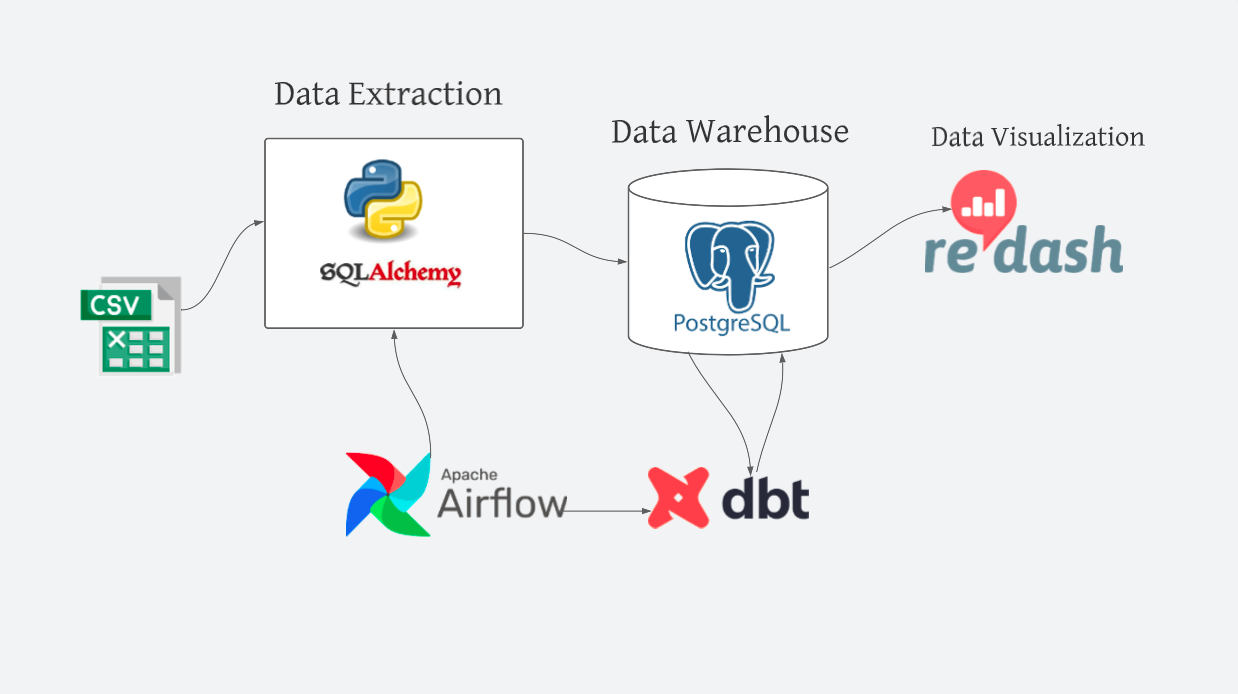

A fully dockerized ELT pipeline project, using PostgreSQL, dbt, Apache Airflow, and Redash.

Explore the docs »

-

[Data Engineering](#Data warehouse tech stack with PostgreSQL, DBT and Airflow)

images/the folder where all snapshot for the project are stored.

logs/the folder where script logs are stored.

*.csv.dvcthe folder where the dataset versioned csv files are stored.

.dvc/: the folder where dvc is configured for data version control.

.github/: the folder where github actions and CML workflow is integrated.

models: the folder where DBT model queries are stored.

eda.ipynb: a jupyter notebook for exploring the data.

requirements.txt: a text file lsiting the projet's dependancies.setup.py: a configuration file for installing the scripts as a package.README.md: Markdown text with a brief explanation of the project and the repository structure.Dockerfile: build users can create an automated build that executes several command-line instructions in a container.docker-compose.yaml: Integrates the various docker containers and run them in a single environment.

git clone https://github.com/isaaclucky/data-warehousing.git

cd data-warehousing

sudo python3 setup.py installTech Stack used in this project

Make sure you have docker installed on local machine.

- Docker

- DockerCompose

- Clone the repo

git clone https://github.com/isaaclucky/data-warehousing.git

- Run

docker-compose build docker-compose up

- Open Airflow web browser

Navigate to `http://localhost:8000/` on the browser activate and trigger dbt_dag activate and trigger migrate

- Access the DBT models and docks

dbt docs serve --port 8081 Navigate to `http://localhost:8081/` on the browser - Access redash dashboard

docker-compose up Navigate to `http://localhost:3500/` on the browser - Access your PostgreSQL database using adminar

Navigate to `http://localhost:8080/` on the browser choose PostgreSQL databse use `airflow` for username use `airflow` for password

Distributed under the MIT License. See LICENSE for more information.