👋 Hello and welcome to the Looking_Fruit 🍎 code repository. This is a short and simple computer vision project. In this project, I replicate and try to improve the models used to classify images of fruits. You can see the research paper here. The original code repository can be found here. This is my first independant paper replication project 😄.

If you encounter any errors while running the notebook yourself, make sure to add it as an issue in the Issues tab of the repository's Github page.

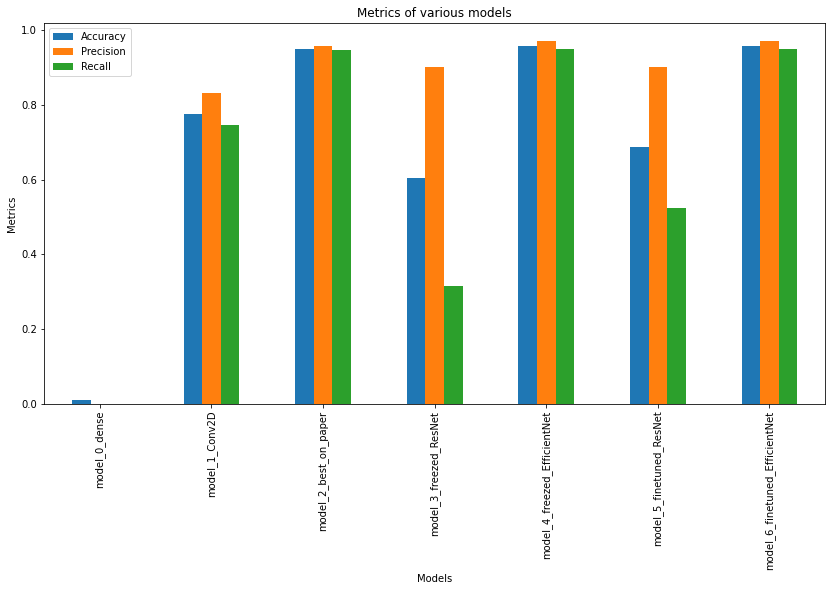

The objective of the research paper was to classify images various fruits correctly. The researchers made a total of 10 models based and documented there results. The main idea of this project is to beat and/or achieve the highest score on the test set when compared to their best model. In the process to do this, I made a total of 7 models (including the best model researchers made), hoping each model iteration will get a better result.

Abstract of the paper:

In this paper we introduce a new, high-quality, dataset of images

containing fruits. We also present the results of some numerical experiment for training a neural network to detect fruits. We discuss the

reason why we chose to use fruits in this project by proposing a few

applications that could use such classifier.

The original data for the paper was published and created by the researchers themselves. The dataset contains a total of around 90,000 images of 131 fruits and vegetables. The data is then split into two sets: training and testing sets. This test set contains almost 25% of the total dataset.

The training data has around 400+ images for each class (except Ginger Root). Although, the model would get generalised more on more data, but due to unnecessarily huge size of the dataset, it was trimmed for training the models better. Further, data augmentation was performed to make the model make better predictions on new images.

The test data was kept separately untouched, and was used just to evaluate the models on unknown data. Each class had around 100+ images. This data was not augmented.

For my ease-of-use I uploaded the original dataset available on Github to Kaggle, and used this for the project. Check out the dataset here and do try making models yourself! During the modelling phase, it was observed that this much data was a little too excessive. Training data was then trimmed so that, each class only has 100 images.

Note: You can also check out the original dataset on Github or Kaggle

- Model 0 : This is a simple model with fully connected multiple Dense layers; this model acts as a baseline. This model is made using only Dense layer. As known, an ANN is weak in classifying images. But, this acts as a good baseline. The model was trained for 10 epochs and used Adam optimizer to update weights. The model got an accuracy 1%, with precision and recall as 0. The model has performed worse than imagined. See architecture.

- Model 1 : This model has multiple pairs of CNN and MaxPool layers with a Flatten layer and 2 Dense layers in the end for classification. The model was trained for 10 epochs and used Adam optimizer to update weights. This model was expected to get much better results than the previous model.. The model was expected to get way better results than the previous model. It got an accuracy of 77.5%, along with 0.83 as precision and 0.74 as recall score. See architecture

- Model 2 : This is the exact same model that researchers used in their paper. This was suppossed to get outstanding results on test set. The model included multiple pairs of

Conv2DandMaxPoollayers to perform convolutional operations. In the bottom layers, it has 2Denselayers, each followed by aDropoutlayer. This was added to further add randomness to predictions. A finalDenselayer is used to classify the inputs. The model (as seen in the paper) performed the best amongst others. It got an accuracy of 94% with precision being 0.95, and recall score being 0.94. It got substantially better results than other previous models. See architecture. - Model 3 : This model uses ResNet50 as its base. Using transfer learning, to exploit other models parameters can be a useful tool. ResNet model required to the image tensors to be scaled between 0 and 1. The model consists of 5 layers. The

Inputlayer confirms and transforms the tensors into the specified shape. TheRescalinglayer scales the numbers into 0 to 1. Then the ResNet model does its work. AGlobalAveragePoolinglayer is used to decrease the count of numbers. A finalDenselayer then classifies the tensors into categories. This model used transfer learning to get the ResNet50 as its base. Although, transfer learning works better on little data, the model was trained on the whole training set. It was observed (during training), that the model had a gradual decrease in the validation loss, inferring that if given more epochs to train on, the model could perform better. The model got an accuracy of 60%, precision of 0.901 and recall as 0.314. See architecture. - Model 4 : Unlike the previous model, this one uses EfficientNetB0 under the hood. Using a different architecture could be benefitial. By default,

EfficientNetB0does not need the input tensors to be scaled between 0 and 1. The model has a total of 4 layers. TheInputlayer confirms and transforms the input tensors into the required shape. Then comes theEfficientNetB0model and does various convolutional operations. TheGlobalAveragePoolinglayer then condenses the tensors into a (1,3) tensor by taking average. The finalDenselayer then classifies the tensors into categories. This model quickly adapted to the dataset and got better results than the previous model using ResNet. The model got an accuracy of 95%, precision of 0.97 and recall score of 0.949. See architecture. - Model 5 : This model uses a fine-tuned ResNet50 as its base. Model 3 used ResNet50 as its base. Although, the model didn't actually learn anything. The parameters were frozen i.e. these did not change when the model was training. Fine-tuning these models make the models train and learn the features in our dataset. The bottom ten layers were set so that their parameters can be changed and can adapt to the dataset. This model is same as model 3, the only difference being that now the ResNet model can also change its parameters and adapt on the training examples to give better and more accuracte predictions. The other model which used ResNet, performed rather poorly on the test set. Although, it was taking more time to adapt to the dataset. Using fine-tuning, method it was expected to get better results. The model achieved an accuracy of 68%, with precision score as 0.902 and recall as 0.52. See architecture.

- Model 6 : This model uses a fine-tuned EfficientNetB0 under its hood. The model's last ten layers have been set in such a way that their parameters can be trained and changed according to the training examples. This helps to give better and more accurate predictions. This model is same as model 4, the only difference being that now the parameters within the base model can now be trained too. Model 4 performed really well on the test set. Fine-tuning would only better the results. The model got an accuracy of 95%, precision of 0.97 and recall as 0.95. See architecture.

Looking_Fruit/

├─ assets/

├─ models/

├─ notebooks/

├─ scripts/

├─ .gitignore

├─ LICENSE.md

├─ README.md

- assets : Contains various media materials which were helpful for the project and its documentation.

- models : Contains all the models created during experimentation.

- notebooks : Contains all the notebooks used for the analysis of the dataset and making models for predictions.

- scripts : Contains various program scripts required during modelling experiments and analysis.

- .gitignore : File was used to make

gitnot track certain private files.

Although this is not a conventional open-source project, if you find any errors (even a typo!) and/or want to improve something in the repository, and/or want to help document the project, feel free to create a pull request! 😄

A special thanks to all the researchers behind this paper.