Official PyTorch repository for Hypercomplex Multimodal Emotion Recognition from EEG and Peripheral Physiological Signals, ICASSPW 2023.

Eleonora Lopez, Eleonora Chiarantano, Eleonora Grassucci and Danilo Comminiello

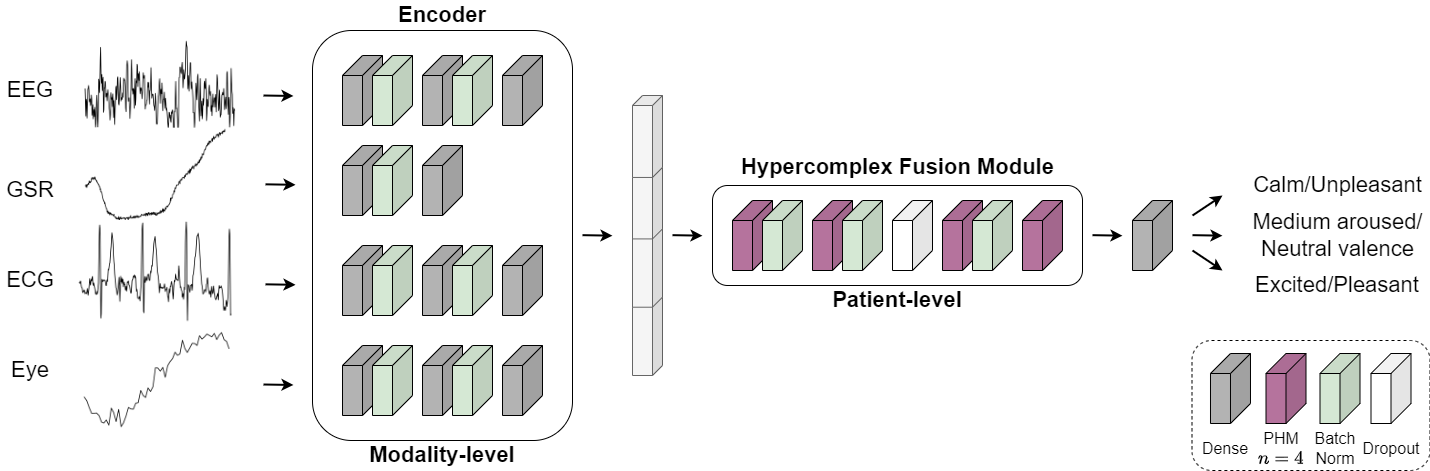

Multimodal emotion recognition from physiological signals is receiving an increasing amount of attention due to the impossibility to control them at will unlike behavioral reactions, thus providing more reliable information. Existing deep learning-based methods still rely on extracted handcrafted features, not taking full advantage of the learning ability of neural networks, and often adopt a single-modality approach, while human emotions are inherently expressed in a multimodal way. In this paper, we propose a hypercomplex multimodal network equipped with a novel fusion module comprising parameterized hypercomplex multiplications. Indeed, by operating in a hypercomplex domain the operations follow algebraic rules which allow to model latent relations among learned feature dimensions for a more effective fusion step. We perform classification of valence and arousal from electroencephalogram (EEG) and peripheral physiological signals, employing the publicly available database MAHNOB-HCI surpassing a multimodal state-of-the-art network.

pip install -r requirements.txt

-

Download the data from the official website.

-

Preprocess the data:

python data/preprocessing.py- This will create a folder for each subject with CSV files containing the preprocessed data and save everything inside

args.save_path.

- This will create a folder for each subject with CSV files containing the preprocessed data and save everything inside

-

Create torch files with augmented and split data:

python data/create_dataset.py- This performs data splitting and augmentation from the preprocessed data in step 2.

- You can specify which label to consider by setting the parameter

label_kindto eitherArslorVlnc. - The data is saved as .pt files which are used for training.

- To train with specific hyperparameters run:

python main.py- Optimal hyperparameters for arousal and valence can be found in the arguments descriprion.

- To run a sweep run:

python sweep.py

Experiments will be directly tracked on Weight&Biases.

Please, cite our work if you found it useful.

@inproceedings{lopez2023hypercomplex,

title={Hypercomplex Multimodal Emotion Recognition from EEG and Peripheral Physiological Signals},

author={Lopez, Eleonora and Chiarantano, Eleonora and Grassucci, Eleonora and Comminiello, Danilo},

booktitle={2023 IEEE International Conference on Acoustics, Speech, and Signal Processing Workshops (ICASSPW)},

pages={1--5},

year={2023},

organization={IEEE}

}

Check out:

- Multi-View Breast Cancer Classification via Hypercomplex Neural Networks, under review at TPAMI, 2023 [Paper][GitHub]

- PHNNs: Lightweight neural networks via parameterized hypercomplex convolutions, IEEE Transactions on Neural Networks and Learning Systems, 2022 [Paper][GitHub].

- Hypercomplex Image-to-Image Translation, IJCNN, 2022 [Paper][GitHub]