Code and data for paper "SWE-bench: Can Language Models Resolve Real-World GitHub Issues?".

Please refer our website for the public leaderboard and the change log for information on the latest updates to the SWE-bench benchmark.

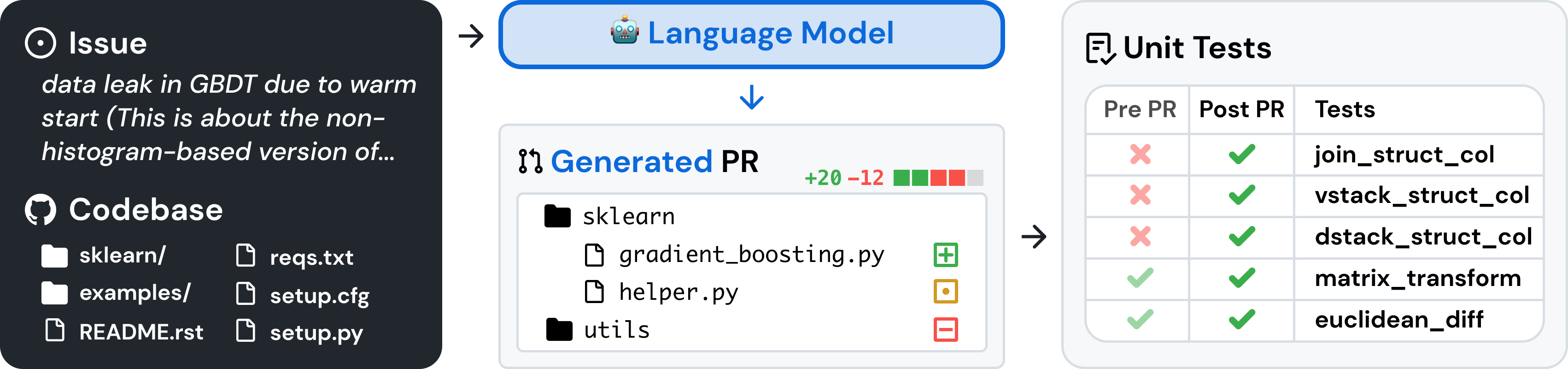

SWE-bench is a benchmark for evaluating large language models on real world software issues collected from GitHub. Given a codebase and an issue, a language model is tasked with generating a patch that resolves the described problem.

To build SWE-bench from source, follow these steps:

- Clone this repository locally

cdinto the repository.- Run

conda env create -f environment.ymlto created a conda environment namedswe-bench - Activate the environment with

conda activate swe-bench

You can download the SWE-bench dataset directly (dev, test sets) or from HuggingFace.

To use SWE-Bench, you can:

- Train your own models on our pre-processed datasets

- Run inference on existing models (either models you have on-disk like LLaMA, or models you have access to through an API like GPT-4). The inference step is where you get a repo and an issue and have the model try to generate a fix for it.

- Evaluate models against SWE-bench. This is where you take a SWE-Bench task and a model-proposed solution and evaluate its correctness.

- Run SWE-bench's data collection procedure on your own repositories, to make new SWE-Bench tasks.

We've also written the following blog posts on how to use different parts of SWE-bench. If you'd like to see a post about a particular topic, please let us know via an issue.

- [Nov 1. 2023] Collecting Evaluation Tasks for SWE-Bench (🔗)

- [Nov 6. 2023] Evaluating on SWE-bench (🔗)

We would love to hear from the broader NLP, Machine Learning, and Software Engineering research communities, and we welcome any contributions, pull requests, or issues! To do so, please either file a new pull request or issue and fill in the corresponding templates accordingly. We'll be sure to follow up shortly!

Contact person: Carlos E. Jimenez and John Yang (Email: {carlosej, jy1682}@princeton.edu).

If you find our work helpful, please use the following citations.

@misc{jimenez2023swebench,

title={SWE-bench: Can Language Models Resolve Real-World GitHub Issues?},

author={Carlos E. Jimenez and John Yang and Alexander Wettig and Shunyu Yao and Kexin Pei and Ofir Press and Karthik Narasimhan},

year={2023},

eprint={2310.06770},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

MIT. Check LICENSE.md.