⚠️ The library is no longer being actively maintained, please consider this before using it for your project⚠️

NeuPy is a python library for prototyping and building neural networks. NeuPy uses Tensorflow as a computational backend for deep learning models.

$ pip install neupy- Install NeuPy

- Check the tutorials

- Learn more about NeuPy in the documentation

- Explore lots of different neural network algorithms.

- Read articles and learn more about Neural Networks.

- Open Issues and ask questions.

|

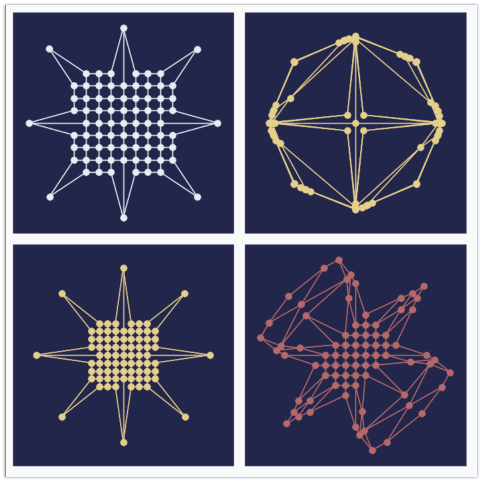

Growing Neural Gas is an algorithm that learns topological structure of the data. Code that generates animation can be found in this ipython notebook |

|

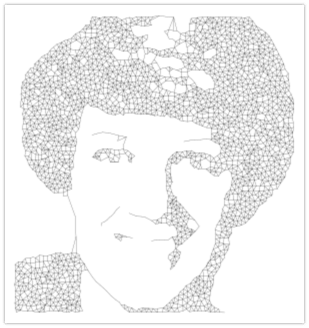

Growing Neural Gas is another example of the algorithm that follows simple set of rules that on a large scale can generate complex patterns. Image on the left is a great example of the art style that can be generated with simple set fo rules. The main notebook that generates image can be found here |

|

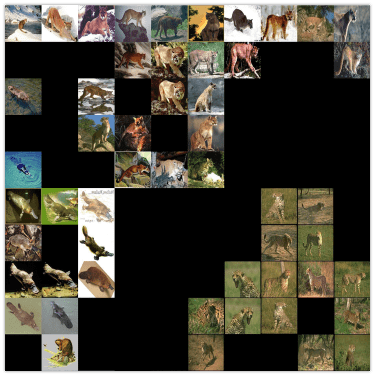

Self-Organazing Maps (SOM or SOFM) is a very simple and powerful algorithm that has a wide variety of applications. This articles covers some of them, including:

|

|

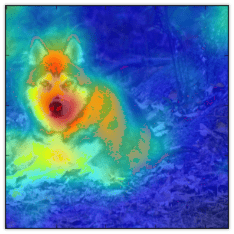

This notebook shows how you can easely explore reasons behind convolutional network predictions and understand what type of features has been learned in different layers of the network. In addition, this notebook shows how to use neural network architectures in NeuPy, like VGG19, with pre-trained parameters. |

|

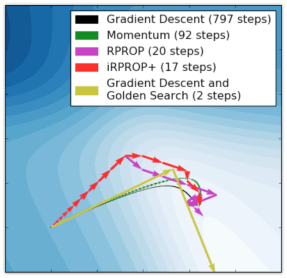

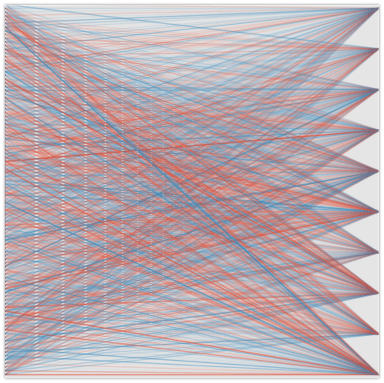

Image on the left shows comparison between paths that different algorithm take along the descent path. It's interesting to see how much information about the algorithm can be extracted from simple trajectory paths. All of this covered and explained in the article. |

|

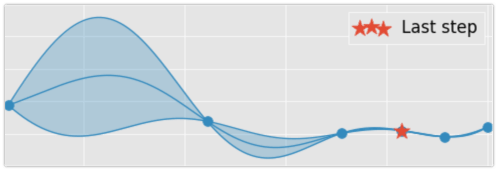

This article covers different approaches for hyperparameter optimization.

|

|

In this article, I just want to show how beautiful sometimes can be a neural network. I think, it’s quite rare that algorithm can not only extract knowledge from the data, but also produce something beautiful using exactly the same set of training rules without any modifications. |

|

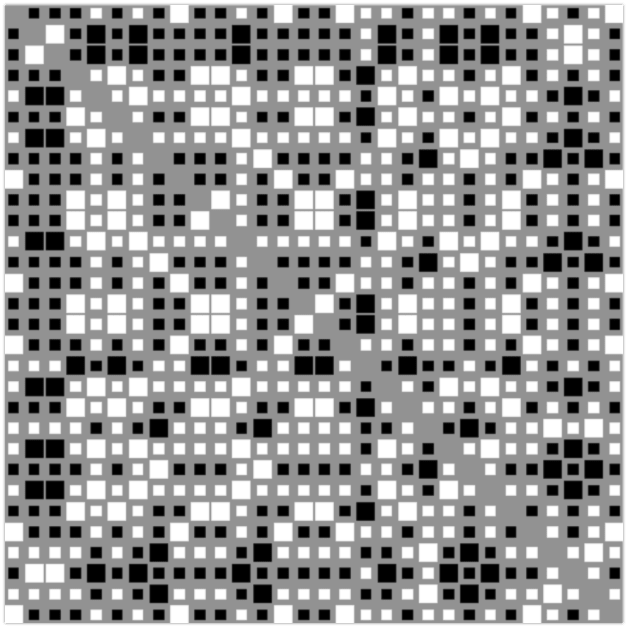

Article with extensive theoretical background about Discrete Hopfield Network. It also has example that show advantages and limitations of the algorithm. Image on the left is a visulatization of the information stored in the network. This picture not only visualizes network's memory, ot shows everything network knows about the world. |

|

This article describes step-by-step solution that allow to generate unique styles with arbitrary text. |

|

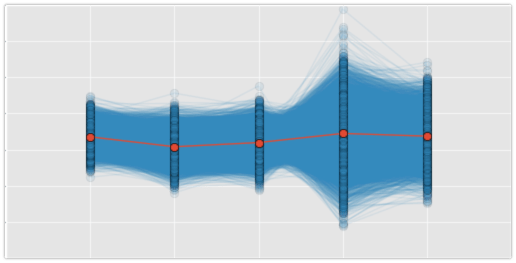

This notebook shows interesting ways to look inside of your MLP network. |

|

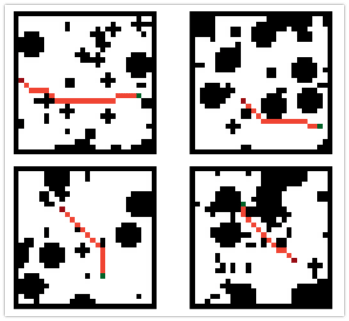

One of the basic applications of the Value Iteration Network that learns how to find an optimal path between two points in the environment with obstacles. |

|

Set of examples that use and explore knowledge extracted by Restricted Boltzmann Machine |