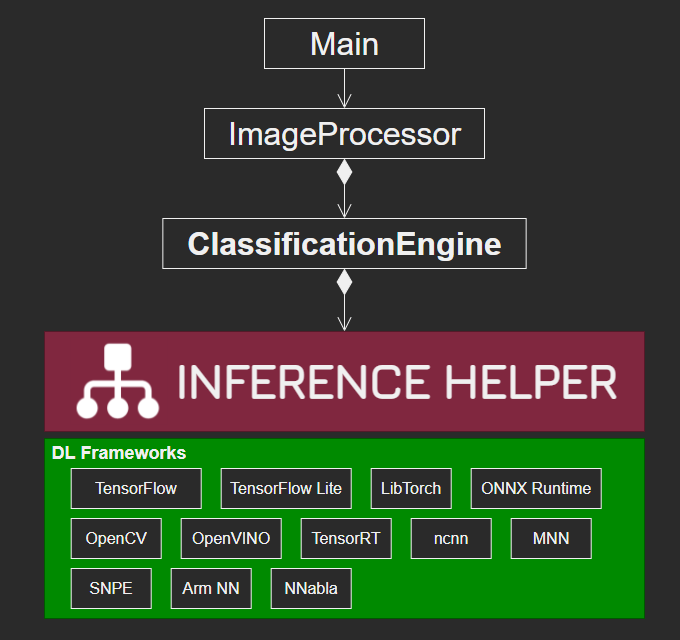

- Sample project for Inference Helper (https://github.com/iwatake2222/InferenceHelper )

- Run a simple classification model (MobileNetv2) using several deep leraning frameworks

./main [input]

- option description: [input]

- blank

- use the default image file set in source code (main.cpp)

- e.g. `./main`

- *.mp4, *.avi, *.webm

- use video file

- e.g. `./main test.mp4`

- *.jpg, *.png, *.bmp

- use image file

- e.g. `./main test.jpg`

- number (e.g. 0, 1, 2, ...)

- use camera

- e.g. `./main 0`

- OpenCV 4.x

- Get source code

git clone https://github.com/iwatake2222/InferenceHelper_Sample cd InferenceHelper_Sample git submodule update --init sh InferenceHelper/third_party/download_prebuilt_libraries.sh- If you have a problem, please refer to https://github.com/iwatake2222/InferenceHelper#installation

- If your host PC is Windows but you want to build/run on Linux (like WSL2), it's better to run this script on the target OS(Linux). Otherwise, symbolic link will broken.

- Download models

sh ./download_resource.sh

cd pj_cls_mobilenet_v2

mkdir -p build && cd build

cmake .. -DINFERENCE_HELPER_ENABLE_MNN=on

make

./main- Configure and Generate a new project using cmake-gui for Visual Studio 2019 64-bit

Where is the source code: path-to-InferenceHelper_Sample/pj_cls_mobilenet_v2Where to build the binaries: path-to-build (any)- Check one of the liseted InferenceHelperFramework (e.g.

INFERENCE_HELPER_ENABLE_MNN)

- Open

main.sln - Set

mainproject as a startup project, then build and run!

sudo apt install g++-arm-linux-gnueabi g++-arm-linux-gnueabihf g++-aarch64-linux-gnu

export CC=aarch64-linux-gnu-gcc

export CXX=aarch64-linux-gnu-g++

cmake .. -DBUILD_SYSTEM=aarch64 -DINFERENCE_HELPER_ENABLE_MNN=on

export CC=arm-linux-gnueabi-gcc

export CXX=arm-linux-gnueabi-g++

cmake .. -DBUILD_SYSTEM=armv7 -DINFERENCE_HELPER_ENABLE_MNN=on

You need to link appropreate OpenCV.

-

Requirements

- Android Studio

- Compile Sdk Version

- 30

- Build Tools version

- 30.0.0

- Target SDK Version

- 30

- Min SDK Version

- 24

- With 23, I got the following error

bionic/libc/include/bits/fortify/unistd.h:174: undefined reference to__write_chk'`- android/ndk#1179

- Compile Sdk Version

- Android NDK

- 23.1.7779620

- OpenCV

- opencv-4.3.0-android-sdk.zip

- *The version is just the version I used

- Android Studio

-

Configure NDK

- File -> Project Structure -> SDK Location -> Android NDK location (before Android Studio 4.0)

- C:\Users\abc\AppData\Local\Android\Sdk\ndk\21.3.6528147

- Modify

local.propertiesto specifysdk.dirandndk.dir(after Android Studio 4.1)sdk.dir=C\:\\Users\\xxx\\AppData\\Local\\Android\\Sdk ndk.dir=C\:\\Users\\xxx\\AppData\\Local\\Android\\sdk\\ndk\\23.1.7779620

- File -> Project Structure -> SDK Location -> Android NDK location (before Android Studio 4.0)

-

Import OpenCV

- Download and extract OpenCV android-sdk (https://github.com/opencv/opencv/releases )

- File -> New -> Import Module

- path-to-opencv\opencv-4.3.0-android-sdk\OpenCV-android-sdk\sdk

- FIle -> Project Structure -> Dependencies -> app -> Declared Dependencies -> + -> Module Dependencies

- select sdk

- In case you cannot import OpenCV module, remove sdk module and dependency of app to sdk in Project Structure

-

Note: To avoid saving modified settings, use the following command

git update-index --skip-worktree ViewAndroid/app/build.gradle ViewAndroid/settings.gradle ViewAndroid/.idea/gradle.xml

-

Copy

resourcedirectory to/storage/emulated/0/Android/data/com.iwatake.viewandroidinferencehelpersample/files/Documents/resource- the directory will be created after running the app (so the first run should fail because model files cannot be read)

-

Modify

ViewAndroid\app\src\main\cpp\CMakeLists.txtto select a image processor you want to useset(ImageProcessor_DIR "${CMAKE_CURRENT_LIST_DIR}/../../../../../pj_cls_mobilenet_v2/image_processor")- replace

pj_cls_mobilenet_v2to another

- Choose one of the following options.

- Note : InferenceHelper itself supports multiple frameworks (i.e. you can set

onfor several frameworks). However, in this sample project the selected framework is also used tocreateInferenceHelper instance for the sake of ease. - Note : When you change an option, it's safer to clean the project before you re-run cmake

- Note : InferenceHelper itself supports multiple frameworks (i.e. you can set

cmake .. -DINFERENCE_HELPER_ENABLE_OPENCV=on

cmake .. -DINFERENCE_HELPER_ENABLE_TFLITE=on

cmake .. -DINFERENCE_HELPER_ENABLE_TFLITE_DELEGATE_XNNPACK=on

cmake .. -DINFERENCE_HELPER_ENABLE_TFLITE_DELEGATE_GPU=on

cmake .. -DINFERENCE_HELPER_ENABLE_TFLITE_DELEGATE_EDGETPU=on

cmake .. -DINFERENCE_HELPER_ENABLE_TFLITE_DELEGATE_NNAPI=on

cmake .. -DINFERENCE_HELPER_ENABLE_TENSORRT=on

cmake .. -DINFERENCE_HELPER_ENABLE_NCNN=on

cmake .. -DINFERENCE_HELPER_ENABLE_MNN=on

cmake .. -DINFERENCE_HELPER_ENABLE_SNPE=on

cmake .. -DINFERENCE_HELPER_ENABLE_ARMNN=on

cmake .. -DINFERENCE_HELPER_ENABLE_NNABLA=on

cmake .. -DINFERENCE_HELPER_ENABLE_NNABLA_CUDA=on

cmake .. -DINFERENCE_HELPER_ENABLE_ONNX_RUNTIME=on

cmake .. -DINFERENCE_HELPER_ENABLE_ONNX_RUNTIME_CUDA=on

cmake .. -DINFERENCE_HELPER_ENABLE_LIBTORCH=on

cmake .. -DINFERENCE_HELPER_ENABLE_LIBTORCH_CUDA=on

cmake .. -DINFERENCE_HELPER_ENABLE_TENSORFLOW=on

cmake .. -DINFERENCE_HELPER_ENABLE_TENSORFLOW_GPU=on- You may need something like the following commands to run the app

cp libedgetpu.so.1.0 libedgetpu.so.1 sudo LD_LIBRARY_PATH=./ ./main

- Build for Android

- In case you encounter

error: use of typeid requires -frttierror, modifyViewAndroid\sdk\native\jni\include\opencv2\opencv_modules.hpp//#define HAVE_OPENCV_FLANN

- In case you encounter

- InferenceHelper_Sample

- https://github.com/iwatake2222/InferenceHelper_Sample

- Copyright 2020 iwatake2222

- Licensed under the Apache License, Version 2.0

- This project utilizes OSS (Open Source Software)