To use this project we need some tools to be running first.

First we need Kafka server to be running on port 9094 and we need a topic named insurance-fl. We use this topic to broadcast our messages.

You can use provided Docker stack file in docker-stacks directory to run a local Kafka server using Docker:

docker stack deploy -c ./kafka-docker-stack.yml kafkaOne of our consumer apps need to connect to MongoDB server to save its data. So we should provide a MongoDB server available at port 27017.

For credentials I am using a dummy username and password which is hesam and secret for a database named insurance. But you may probably change these values which are available at the Main.scala file in database-saver project.

You can use provided Docker stack file in docker-stacks directory to run a local MongoDB server using Docker:

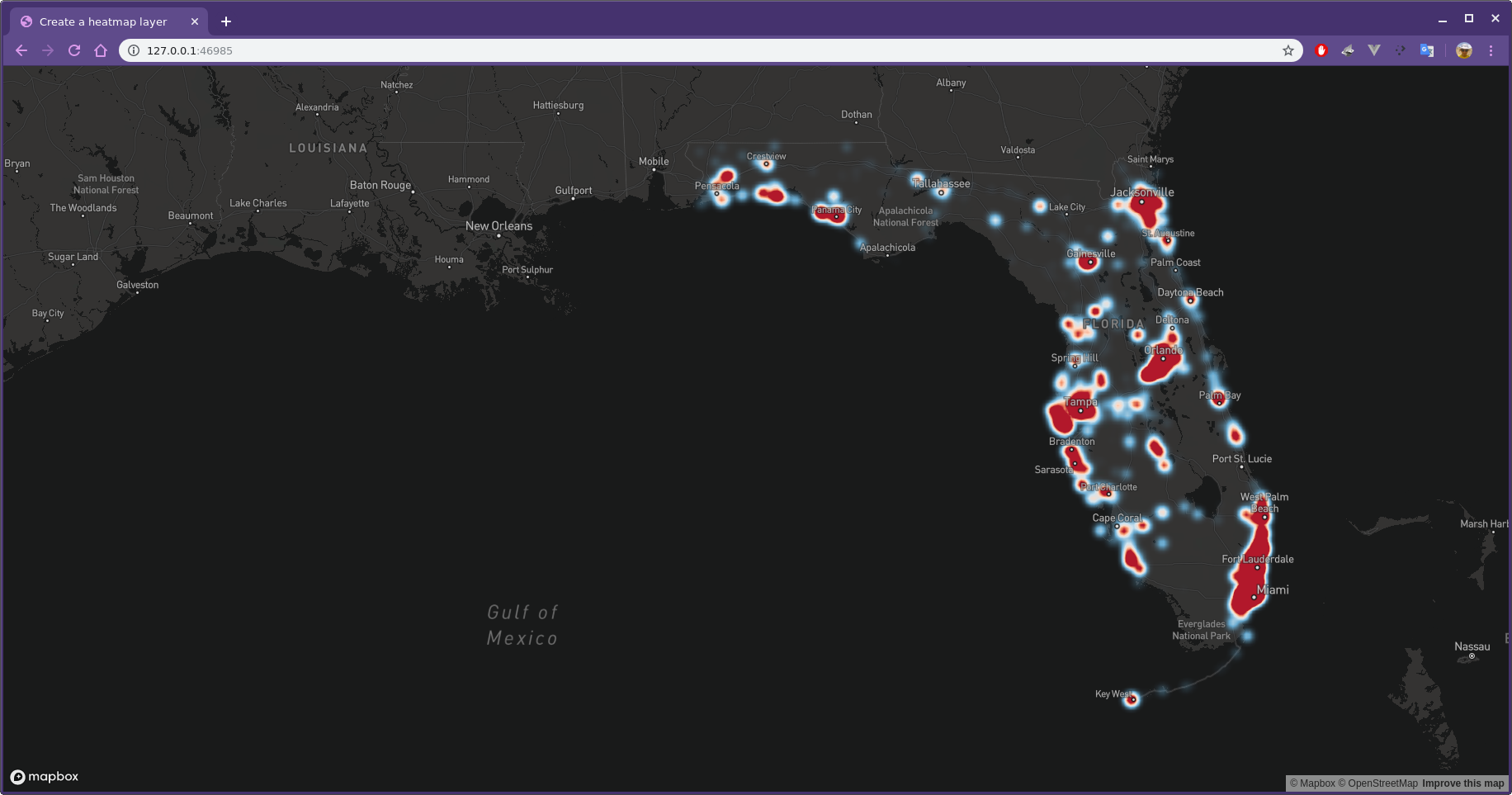

docker stack deploy -c ./mongodb-docker-stack.yml mongoIn order to using the web view functionaly which will show a heatmap in browser, we need to run a local server. Also you need to provide a Mapbox API key to enable this functionality.

To obtain Mapbox API key head up to https://mapbox.com to create an account and generate API key. You should place this key in the web/eqSiteLimit-map-view/index.html file.

Then we need a local server that you can use provided Docker stack file in docker-stacks directory to run a local Nginx server at port 46985 using Docker:

docker stack deploy -c ./nginx-docker-stack.yml nginxWe have a sample CSV file which is in data folder and the producer app will use it so broadcast data to Kafka broker.

To run the project, first we need to start Kafka server, then MongoDB server. Then we can start all consumer apps respectively.

|

Note

|

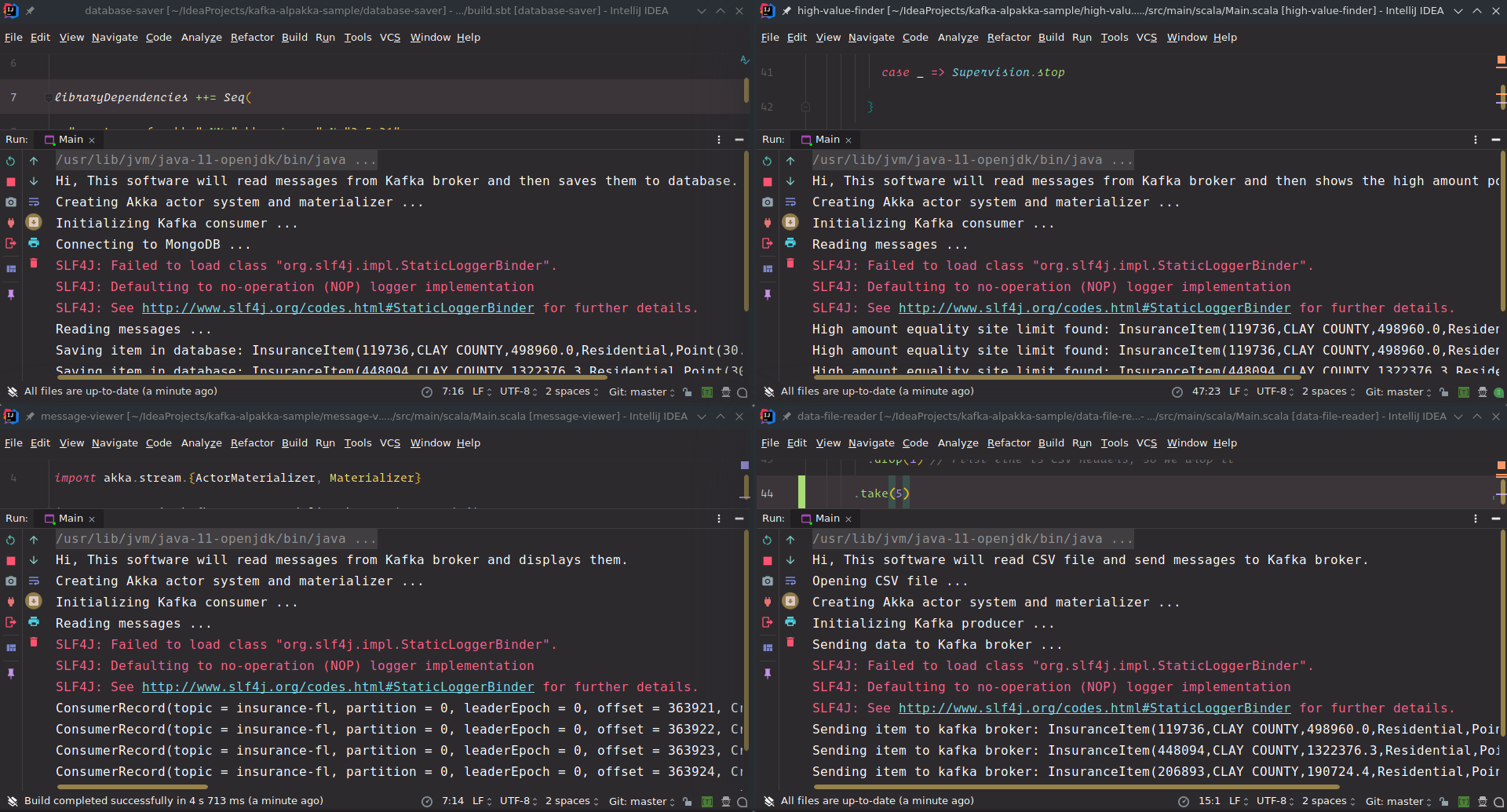

We can run projects through terminal or through IDE. I am usin Intellij IDEA to run projects. |

-

message-viewerwill show any message that it will receive. -

high-value-finderwill show any insurance item that have aneqSiteLimitvalue of more than 50000. -

database-saverwill save everything that it will receive, to database in a collection namedfl. -

heatmap-data-calculatorwill generate a .geojson file atweb/eqSiteLimit-map-view/fl-insurance-locations.geojsonwhich will be used by Mapbox to show a heatmap in browser.

All of these consumers will receive their data from a topic named insurance-fl from Kafka broker.

Now we can run producer app which will read or CSV file and send the data to the insurance-fl topic in Kafka broker.

-

data-file-readerwill readFL-insurance-sample.csvfile and send data to Kafka broker.

For managing stream of data between applications, we use Kafka. So it can handle high amuont of messages, passing through applications and it will guarantee the order of messages as well.

By using Kafka we can also use other tools and languages that are compatible with Kafka API.

Akka stream is used to handle back-pressure in applications and it will use a Source → Flow → Sink mechanism to manage the streams of high amount data.

This is the fundamendal base of all of our applications.

We use Alpakka extensions to connect our tools such as Kafka and MongoDB to Akka Streams.

This way we have a general mechanism to work with al of our tools and they will have the same API for us to develop.

Using this extension we can connect Akka Streams to Kafka server and produce and consume messages using Kafka broker. This extension will handle the back-pressure for us and let us to control the flow as the way we want.

So we don’t even negotiate with dedicated Kafka library and nogotiation with that library will be done with this extension.