We are in an early-release beta. Expect some adventures and rough edges.

- Introduction

- Motivation

- Questions and Discussions

- Quick Start Guide

- Related Posts and Repos

- Citation

The learning rate warmup for Adam is a must-have trick for stable training in certain situations. But the underlying mechanism is largely unknown. In our study, we suggest one fundamental cause is the large variance of the adaptive learning rates, and provide both theoretical and empirical support evidence.

In addition to explaining why we should use warmup, we also purpose RAdam, a theoretically sound variant of Adam.

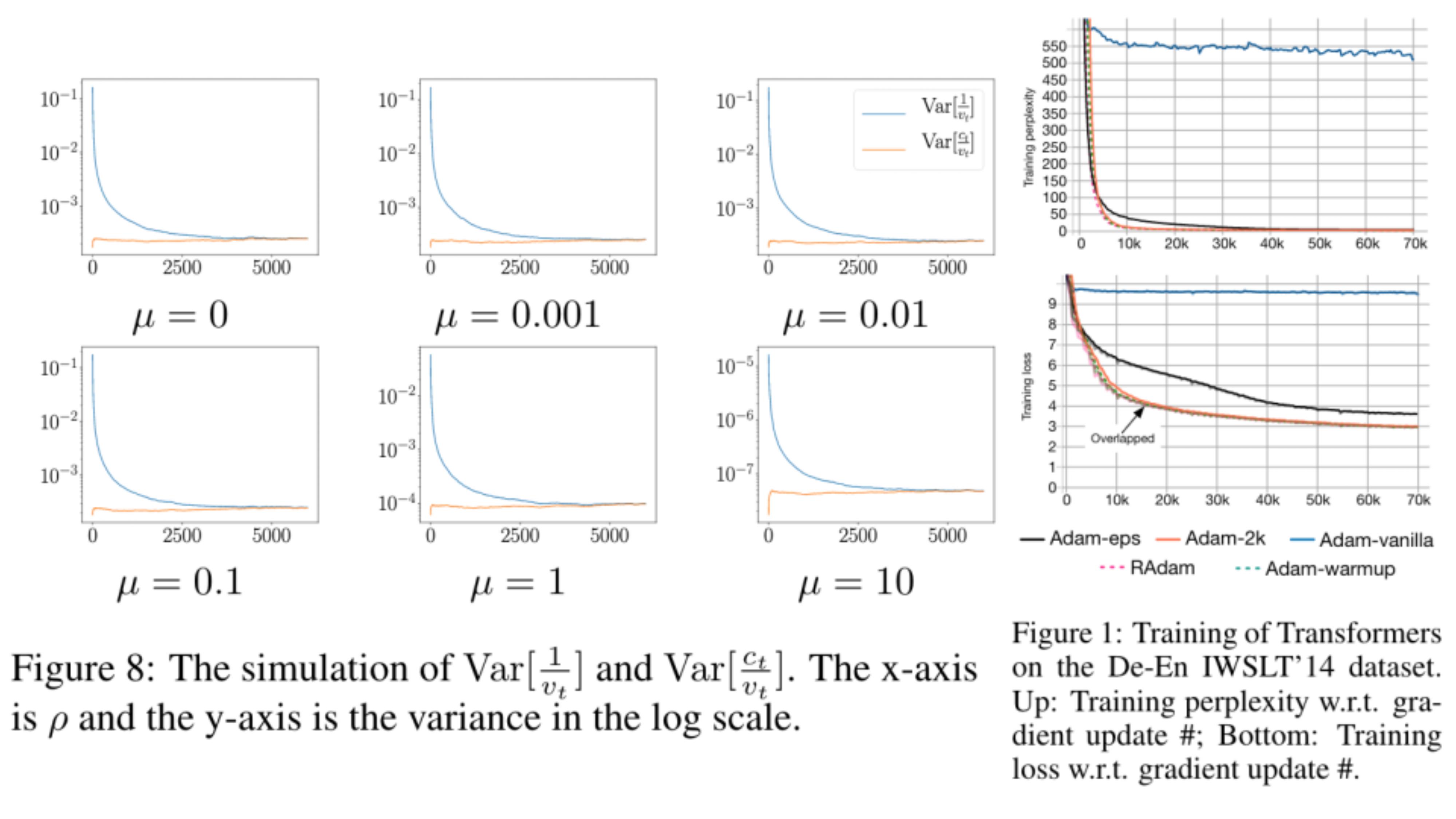

As in Figure 8, we assume gradients follows a normal distribution (mean: \mu, variance: 1). The variance of the adaptive learning rate is simulated and plotted in Figure 8 (blue curve). We can see that the adaptive learning rate has a large variance in the early stage of training.

When using the Transformer for NMT, a warmup stage is usually required to avoid convergence problems (Adam-vanilla converges around 500 PPL in Figure 1, while Adam-warmup successfully converges under 10 PPL). In further explorations, we notice that, if we use additional 2000 samples to estimate the adaptive learning rate, the convergence problems are avoided (Adam-2k); or, if we increase the value of eps, the convergence problems are also relieved (Adam-eps).

Therefore, we conjecture that the large variance in the early stage causes the convergence problem, and further propose Rectified Adam by analytically reducing the large variance. More details can be found in our paper.

Yes, the robustness of RAdam is not infinity. In our experiments, it works for a broader range of learning rates, but not all learning rates.

Choice of the Original Transformer

We choose the original Transformer as our main study object because, without warmup, it suffers from the most serious convergence problems in our experiments. With such serious problems, our controlled experiments can better verify our hypothesis (i.e., we demonstrate that Adam-2k / Adam-eps can avoid spurious local optima by minimal changes).

Sensitivity

We observe the Transformer is sensitive to the architecture configuration, despite its efficiency and effectiveness. For example, by changing the position of the layer norm, the model may / may not require the warmup to get a good performance. Intuitively, since the gradient of the attention layer could be more sparse and the adaptive learning rates for smaller gradients have a larger variance, they are more sensitive. Nevertheless, we believe this problem deserves more in-depth analysis and is beyond the scope of our study.

Although the adaptive learning rate has a larger variance in the early stage, the exact magnitude is subject to the model design. Thus, the convergent problem could be more serious for some models/tasks than others. In our experiments, we observe RAdam achieves consistent improvements over the vanilla Adam. It verifies the variance issue widely exists (since we can get better performance by fixing it).

To the best of our knowledge, the warmup heuristic is originally designed for large minibatch SGD [0], based on the intuition that the network changes rapidly in the early stage. However, we find that it does not explain why Adam requires warmup. Notice that, Adam-2k uses the same large learning rate but with a better estimation of the adaptive learning rate can also avoid the convergence problems.

The reason why sometimes warmup also helps SGD still lacks of theoretical support. FYI, when optimizing a simple 2-layer CNN with gradient descent, the thoery of [1] could be used to show the benifits of warmup. Specifically, the lr must be

[0] Goyal et al, Accurate, Large Minibatch SGD: Training Imagenet in 1 Hour, 2017

[1] Du et al, Gradient Descent Learns One-hidden-layer CNN: Don’t be Afraid of Spurious Local Minima, 2017

- Directly replace the vanilla Adam with RAdam without changing any settings.

- Further tune hyper-parameters (including the learning rate) for a better performance.

Note that in our paper, our major contribution is to identify why we need the warmup for Adam. Although some researchers successfully improve their model performance (user comments), directly plugging in RAdam may not result in an immediate performance boost. Based on our experience, replacing the vanilla Adam with RAdam usually results in a better performance; however, if warmup has already been employed and tuned in the baseline method, it is necessary to tune hyper-parameters for RAdam.

RAdam is very easy to implement, we provide PyTorch implementations here, while third party ones can be found at:

We provide a simple introduction in Motivation, and more details can be found in our paper. There are some unofficial introductions available (with better writings), and they are listed here for reference only (contents/claims in our paper are more accurate):

We are happy to see that our algorithms are found to be useful by some users : -)

"...I tested it on ImageNette and quickly got new high accuracy scores for the 5 and 20 epoch 128px leaderboard scores, so I know it works... https://forums.fast.ai/t/meet-radam-imo-the-new-state-of-the-art-ai-optimizer/52656

— Less Wright August 15, 2019

Thought "sounds interesting, I'll give it a try" - top 5 are vanilla Adam, bottom 4 (I only have access to 4 GPUs) are RAdam... so far looking pretty promising! pic.twitter.com/irvJSeoVfx

— Hamish Dickson (@_mishy) August 16, 2019

RAdam works great for me! It’s good to several % accuracy for free, but the biggest thing I like is the training stability. RAdam is way more stable! https://medium.com/@mgrankin/radam-works-great-for-me-344d37183943

— Grankin Mikhail August 17, 2019

Please cite the following paper if you found our model useful. Thanks!

Liu, Liyuan, Haoming Jiang, Pengcheng He, Weizhu Chen, Xiaodong Liu, Jianfeng Gao, and Jiawei Han. "On the Variance of the Adaptive Learning Rate and Beyond." arXiv preprint arXiv:1908.03265 (2019).

@article{liu2019radam,

title={On the Variance of the Adaptive Learning Rate and Beyond},

author={Liu, Liyuan and Jiang, Haoming and He, Pengcheng and Chen, Weizhu and Liu, Xiaodong and Gao, Jianfeng and Han, Jiawei},

journal={arXiv preprint arXiv:1908.03265},

year={2019}

}