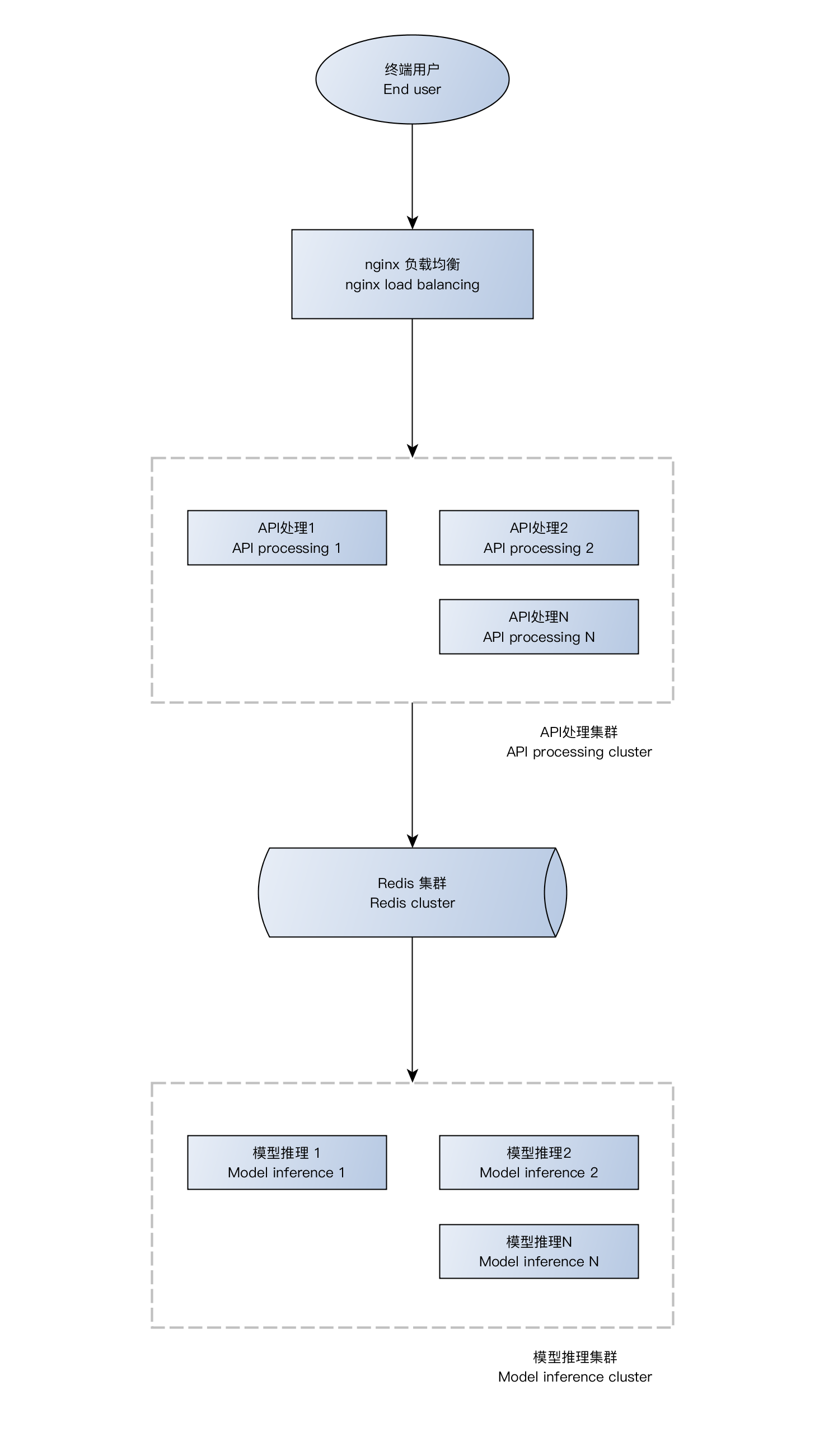

Deep learning models are usually deployed in the cloud and the inference services are provided through APIs. This framework provides the basic architectural components for deploying APIs and achieves several goals:

- The API processing module is decoupled from the model inference module to reduce the risk of network and computing blocking caused by high concurrency.

- The API processing module and model inference module can be deployed as a distributed architecture, and can achieve horizontal expansion.

- The framework implemented using Go language to achieve execution efficiency and simplify deployment and maintenance.

- Custom logic is implemented using callback, hiding common logic. Developers only need to focus on custom logic

Other features:

- Yaml is used for server-side configuration, which can be configured separately during distributed deployment.

- API signature supports SHA256 and SM2 algorithms.

- Model examples: