Finetuning the SSIW model from the paper: Wei Yin, Yifan Liu, Chunhua Shen, Anton van den Hengel, Baichuan Sun, The devil is in the labels: Semantic segmentation from sentences.

This fork contains additional code for training, evaluation and loss computation using the CMP Facade Dataset, with credit to previous work done by Bartolo1024.

I have also added more suitable descriptions for the CMP Facade labels.

- Original Label Embedding: https://cloudstor.aarnet.edu.au/plus/s/gXaGsZyvoUwu97t

- Original Model CKPT: https://cloudstor.aarnet.edu.au/plus/s/AtYYaVSVVAlEwve

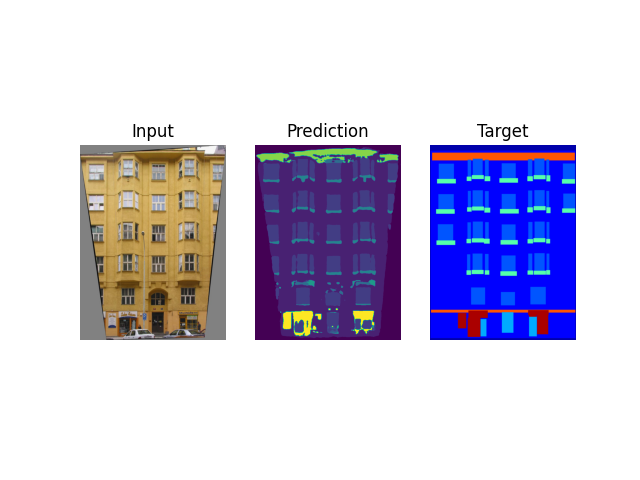

The model has been finetuned on the CMP Facade Dataset. The base version has been used for training while the extended version is reserved for testing.

Training and testing can be carried out in this Kaggle notebook.

The following experiments wer carried out:

-

A base model was trained with the frozen layers using the pretrained model and original HD Loss.

-

An almost identical model to 1 was trained, but this time a learnable temperature paramter was added to the HD Loss function.

-

Due to the tendency for large models such as the Segformer to overfit on small datasets, all parameters in the model have been frozen apart from the patch embedding layer.

-

Model 2 was trained with early stopping now watching the dice loss metric during validation.

-

Model 4 was used with frozen model parameters.

Batch size and image size are chosen to suit my available resources.

| Experiment | Dice Loss Mean | Dice Loss Std |

|---|---|---|

| 1 | 0.33610681215660615 | 0.10716346525468866 |

| 2 | 0.3210149120800851 | 0.10403830232390819 |

| 3 | 0.39679140630259846 | 0.12017719375905421 |

| 4 | 0.3386301629637417 | 0.10906255159950705 |

| 5 | 0.4029399963389886 | 0.11731406566119605 |

In all cases, the model performs similarly in terms of dice loss on the test set as it had on the validation set during training. Adding the learnable temperature parameter to the HD Loss function marginally improves perfromance. Freezing model parameters shows a negative impact on test performance.

Possible improvements could come from:

- Non-destructive image augmentations

- Combined Dice & HD Loss Function

- Hyperparameter search for learning rate