DEMO Project Page Paper HuggingFace

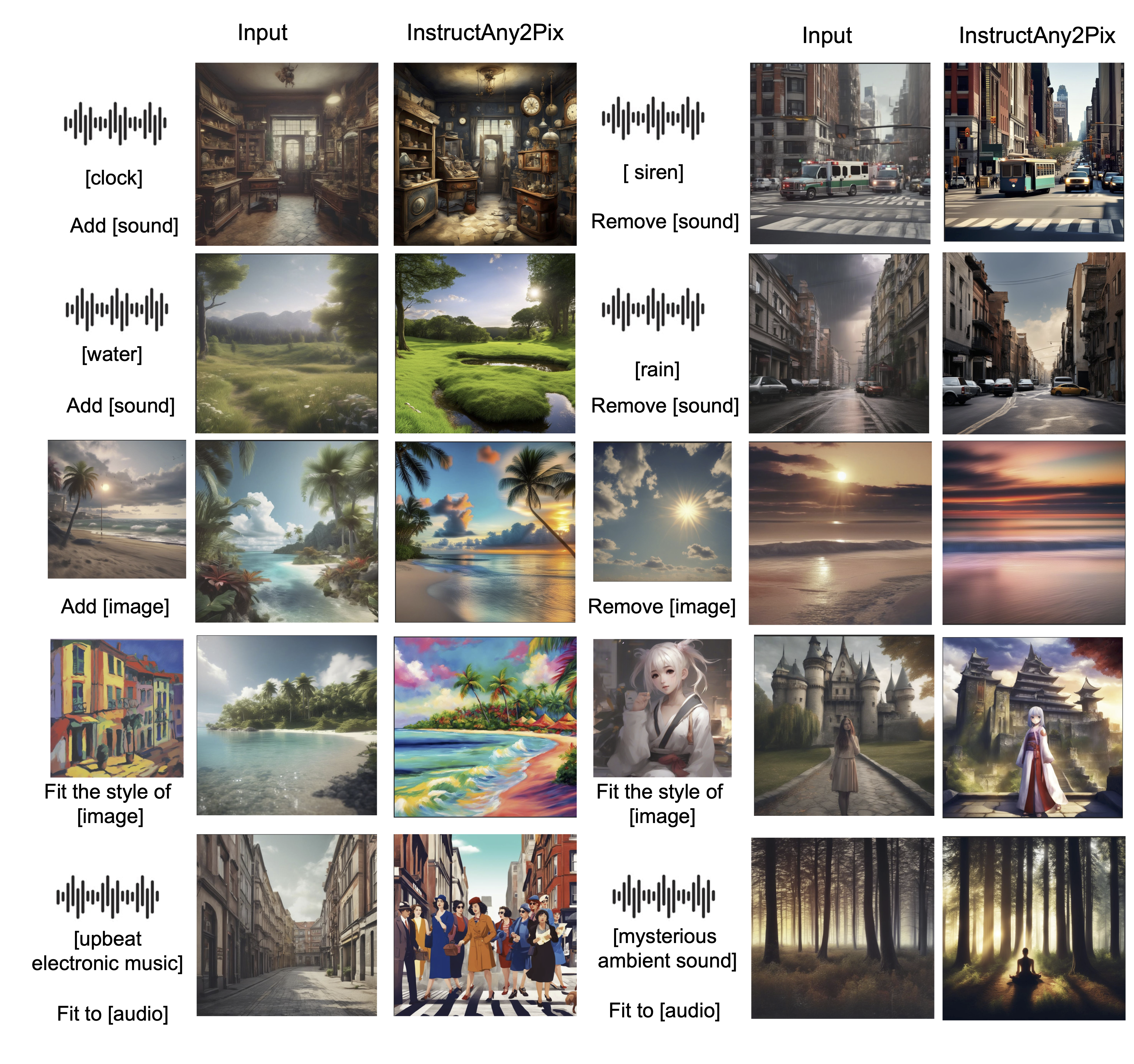

PyTorch implementation of InstructAny2Pix: Flexible Visual Editing via Multimodal Instruction Following

Shufan Li, Harkanwar Singh, Aditya Grover

University of California, Los Angeles

conda create --name instructany2pix python=3.10

conda activate instructany2pix

conda install ffmpeg

pip3 install torch torchvision torchaudio

pip3 install -r requirements.txt

pip install git+https://github.com/facebookresearch/ImageBind.git --no-deps

- 2023:

- Dec 4: Added support for LCM and IP-Adapter

- Dec 30: Added support for Subject Consistency.

- 2024:

- Feb 3: Added support for Cfg Control .

- May 23: We temporally unlisted the model checkpoint due to safety concerns regarding LAION. We plan to retrain the model with clean data in near future.

- Jun 30: We released retrained model on filtered clean data. See New Demo Notebook for use cases

- Jul 20: We released Evaluation Dataset at Huggingface.

Checkpoints of Diffusion Model and LLM is available at [https://huggingface.co/jacklishufan/instructany2pix_retrained/].

To serve gradio app, run

python serve.py

Alternatively, one can check demo.ipynb for a notebook demo

See Huggingface Link at the top

We are working on improving the roboustness of the method. At the moment, there are ways you can contorl the output.

Try to Increase alpha, norm, decrease h_0

Try to decrese alpha, norm, decrese h_0

Try to Increase refinement, h_2, steps

Try to change seed and increase h_1

We make use of the following codebase for subject consistency: Segment Anything GroundingDINO