Status

About | Solution | Requirements | Startup | Improvements | License | Author

Explore Data Science Academy is an amazing company helping South Africa's youth do amazing things. This repository is a testament to that. Explore is an educational institution in the information systems development field.

Inquiries are an administrative burden on companies. A substantial amount of time is spent on drafting responses to each one. Further, These responses are often repetitive.

Hera aims to address this issue by creating an information retrieval assistant to act as the first point of contact before contacting a member of staff.

Hera helps you by freeing up time to focus on product delivery!

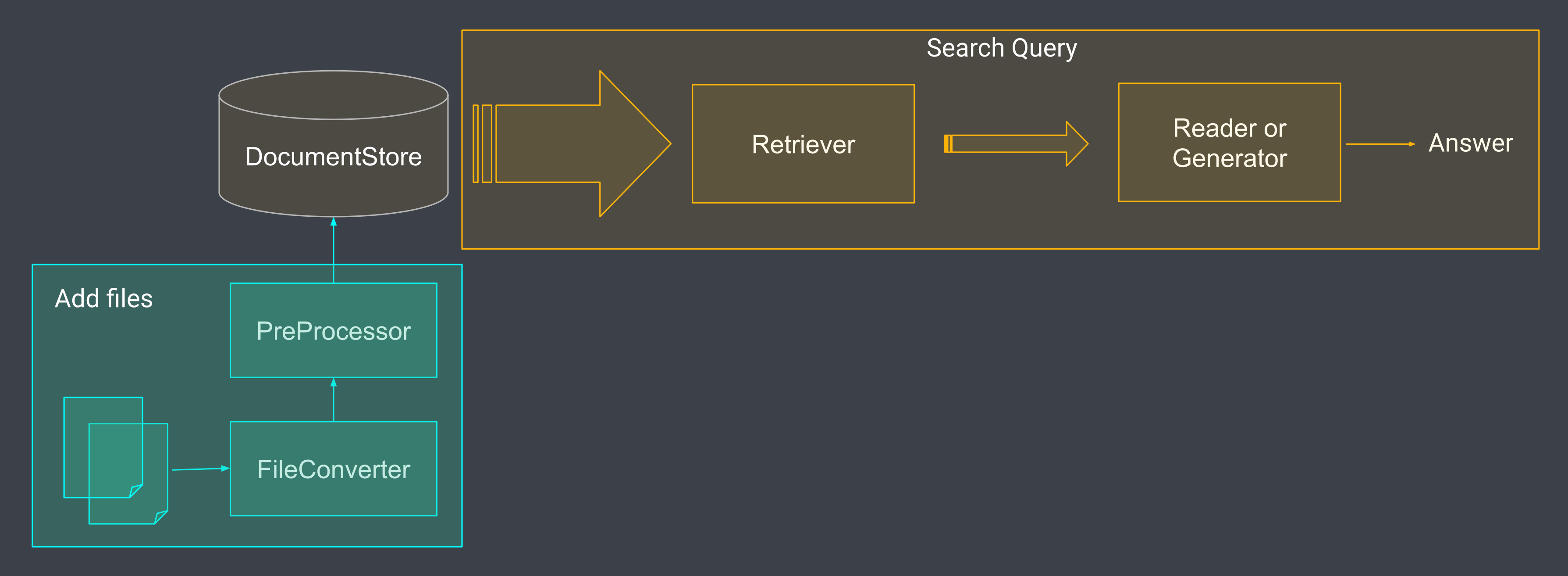

- Hera is built on a Haystack backend. Haystack is a tool that provides a pipeline for Closed Domain Question Answering using the latest pretrained models. This makes it ideal for information retrieval without the financial burden of training the a language model.

-

Hera scrapes your website and loads your documents to the DocumentStore for you. You can change the default url by editing the URL in

webscraper.py -

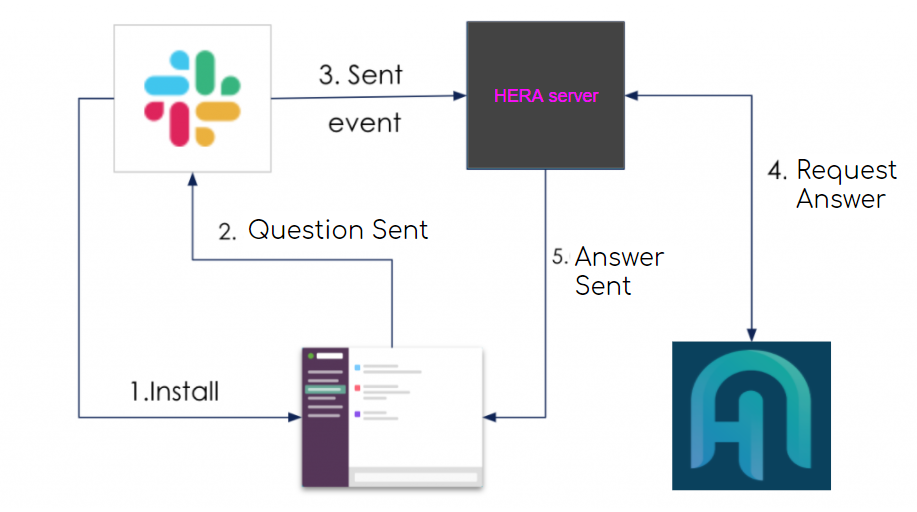

Hera is integrated into Slack as below. All that is left is to embed the conversation into your website with a tool like Chatwoot (coming soon)

The following tools were used in this project:

# Clone this project

$ git clone https://github.com/Bnkosi/odin.git

# Access

$ cd odin

# Install dependencies

$ pip install farm-haystack==0.4.9Before starting 🏁, you need to have a Slack App, Slack Signing Secret and Slack Bot Token. You cannot proceed without these. You will also need to install Docker to run the ElasticSearch container, and Ngrok to connect the bot to the internet.

# Install signing secret

$ export SLACK_SIGNING_SECRET="xxxxx"

# Install token

$ export SLACK_BOT_TOKEN="xoxb-xxxx"H.E.R.A works by scraping your website and loading this data into and ElasticSearch Document Store. It is possible to ask questions immediately but to improve accuracy, a model should be trained.

# A. Scrape Website

$ python3 pipeline.py

# B. If you have previously run the step above, start here

# 1. Start docker container

$ docker ps -a # view available containers

$ docker start hera # start container

# 2. Write documents to ElasticSearch

# Change Document location and run

$ python3 add_doc.py

# 3. Start the Question Answering API

$ gunicorn rest_api.application:app -b 0.0.0.0:8000 -k uvicorn.workers.UvicornWorker -t 300

# 4. Start Hera

$ python3 hera.pyYou will need to set up a HTTP tunneler to allow slack to communicate with your local server

# Navigate to ngrok installation folder and run the following.

$ ./ngrok http 3000Copy the resultant https forwarding address and update the Slack App Event Subscriptions Request URL.

eg: https://6fdef00d53b6.ngrok.io/slack/events

Open your Slack workspace, invite the bot to a channel and ask it a few questions. You can watch a demo of the bot here

From time to time you might need to ad documents that specifically address issues that are not covered by the website. In those cases, add your txt files to a folder and rund the script below. Remember to change the file paths.

# Change Document location and run

$ python3 add_doc.pyWhen you are ready to improve the models accuracy, you will need to train it on annotated data. Documents can be manually annotated using Deepset annotation tool.

The process of document annotation is tedious and requires a substantial investment in time to generate and label documents. To solve this, we have built twon in-progress scripts.

-

Generator - The generator reads every all the text files and attempts to generate a question for every word (token). Noteably, most of the questions will be useless due to being to general or having been generated on invalid tokens.

-

Pretrainer - The pretrainer then attempts to answer every question from the generator. It is important that we set the

NO_ANS_BOOSTfairly high in order to filter out useless questions. After answering the questions, a dataset is generated and the model is ready to be trained.

Once the dataset has been downloaded from the annotation tool, run the following to train the model

# Train

$ python3 trainer.py✔️ Deployment - Chatwoot has been identified as the prefered tool to integrate the Slack window into websites.

✔️ Retrieval-Augmented Generation - Currently the model works by selecting the most appropriate span of text and presenting it as the answer (extractive QA). The next step is the generation of novel answers from the same documents. This makes the bot more human-like and thus more trust-worthy.;

✔️ Generative Pretraining - The annotation tool is labour intesive and costly. Generative Pretraining aims to simulate a human asking and answerinig (annotating) documents. The code is present but still needs fine-tuning;

✔️ Context Management - Users ask ambiguous questions, such as: "How much is this course?". Context management allows the bot to know which course the user is talking about or ask for clarity where there is uncertainty.;

✔️ Feedback - Logging of questions still needs to be built in as well as notification when answers aren't found. Notifications must be balanced between always sending notifications and always answering the question.

This project is under an Apache-2.0 license. For more details, see the LICENSE file.

Made with ❤️ by Bulelani Nkosi and Caryn Pialt

Special Thanks ❤️❤️ Pfano Phungo