This repository provides the original implementation of Machine Unlearning Six-Way Evaluation for Language Models by Weijia Shi*, Jaechan Lee*, Yangsibo Huang*, Sadhika Malladi, Jieyu Zhao, Ari Holtzman, Daogao Liu, Luke Zettlemoyer, Noah A. Smith, and Chiyuan Zhang. (*Equal contribution)

Website | Leaderboard | MUSE-News Benchmark | MUSE-News Benchmark | MUSE-Books Benchmark

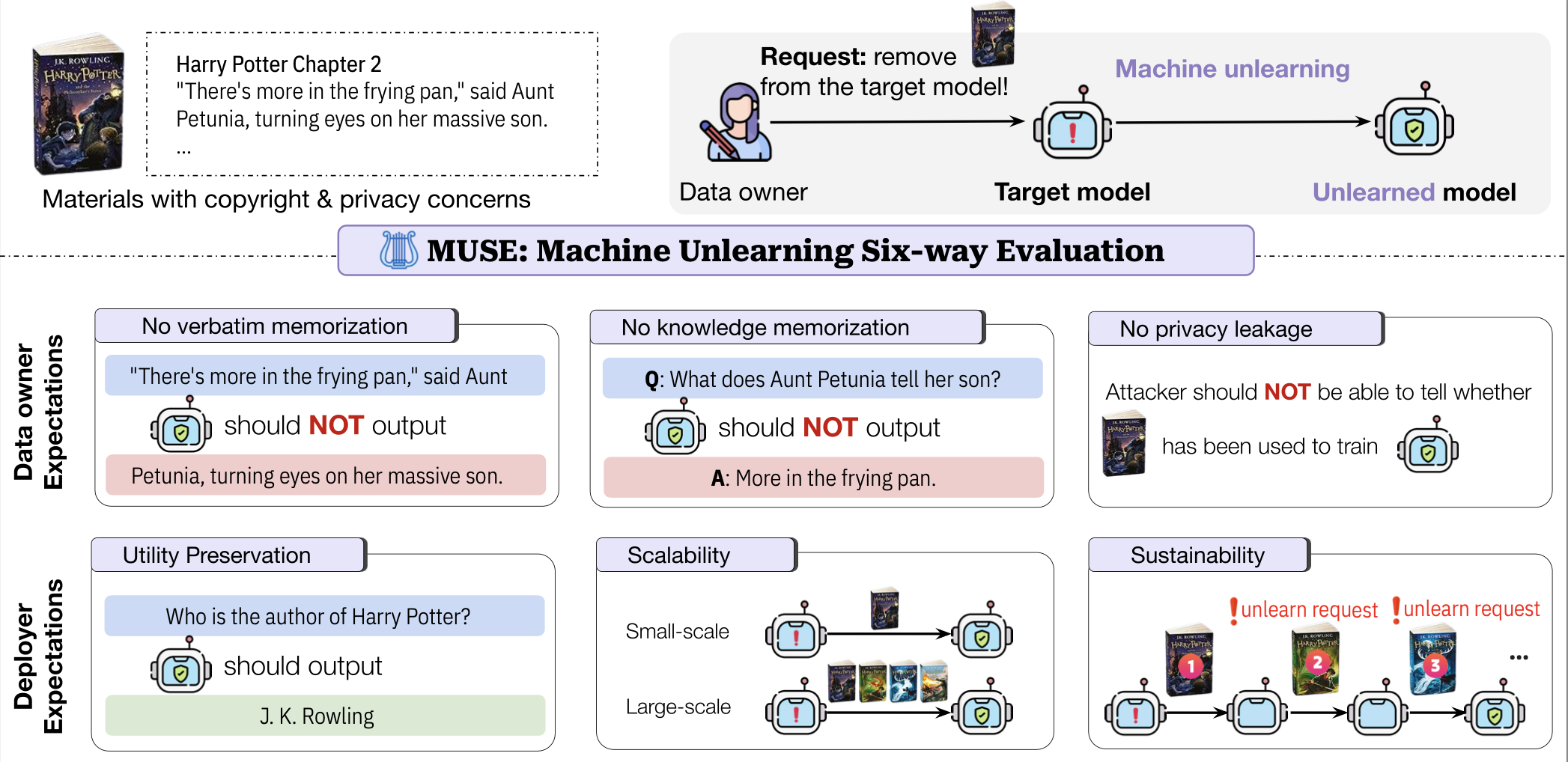

MUSE is a comprehensive machine unlearning evaluation benchmark that assesses six desirable properties for unlearned models: (1) no verbatim memorization, (2) no knowledge memorization, (3) no privacy leakage, (4) utility preservation for non-removed data, (5) scalability with respect to removal requests, and (6) sustainability over sequential unlearning requests.

⭐ If you find our implementation and paper helpful, please consider citing our work ⭐ :

@misc{shi2023detecting,

title={Detecting Pretraining Data from Large Language Models},

author={Weijia Shi and Anirudh Ajith and Mengzhou Xia and Yangsibo Huang and Daogao Liu and Terra Blevins and Danqi Chen and Luke Zettlemoyer},

year={2023},

eprint={2310.16789},

archivePrefix={arXiv},

primaryClass={cs.CL}

}To create a conda environment for Python 3.10, run:

conda env create -f environment.yml

conda activate muse_benchTwo corpora News and Books and the associated target models are available as follows:

| Domain | Target Model for Unlearning |

Dataset |

|---|---|---|

| News | Target model | Dataset |

| Books | Target model | Dataset |

Before proceeding, load all the data from HuggingFace to the root of this repostiory by running the following instruction:

python load_data.py

To unlearn the target model using our baseline method, run unlearn.py in the baselines folder. Example scripts baselines/scripts/unlearn_news.sh and scripts/unlearn_books.sh in the baselines folder demonstrate the usage of unlearn.py. Here is an example:

algo="ga"

CORPUS="news"

python unlearn.py \

--algo $algo \

--model_dir $TARGET_DIR --tokenizer_dir 'meta-llama/Llama-2-7b-hf' \

--data_file $FORGET --retain_data_file $RETAIN \

--out_dir "./ckpt/$CORPUS/$algo" \

--max_len $MAX_LEN --epochs $EPOCHS --lr $LR \

--per_device_batch_size $PER_DEVICE_BATCH_SIZEalgo: Unlearning algorithm to run (ga,ga_gdr,ga_klr,npo,npo_gdr,npo_klr, ortv).model_dir: Directory of the target model.tokenizer_dir: Directory of the tokenizer.data_file: Forget set.retain_data_file: Retain set for GDR/KLR regularizations if required by the algorithm.out_dir: Directory to save the unlearned model (default:ckpt).max_len: Maximum input length (default: 2048).per_device_batch_size,epochs,lr: Hyperparameters.

Resulting models are saved in the ckpt folder as shown:

ckpt

├── news/

│ ├── ga/

│ │ ├── checkpoint-102

│ │ ├── checkpoint-204

│ │ ├── checkpoint-306

│ │ └── ...

│ └── npo/

│ └── ...

└── books/

├── ga

└── ...

To evaluate your unlearned model(s), run eval.py from the root of this repository with the following command-line arguments:

--model_dirs: A list of directories containing the unlearned models. These can be either HuggingFace model directories or local storage paths.--names: A unique name assigned to each unlearned model in--model_dirs. The length of--namesshould match the length of--model_dirs.--corpus: The corpus to use for evaluation. Options arenewsorbooks.--out_file: The name of the output file. The file will be in CSV format, with each row corresponding to an unlearning method from--model_dirs, and columns representing the metrics specified by--metrics.--tokenizer_dir(Optional): The directory of the tokenizer. Defaults tometa-llama/Llama-2-7b-hf, which is the default tokenizer for LLaMA.--metrics(Optional): The metrics to evaluate. Options areverbmem_f(VerbMem Forget),privleak(PrivLeak),knowmem_f(KnowMem Forget), andknowmem_r(Knowmem Retain, i.e., Utility). Defaults to evaluating all these metrics.--temp_dir(Optional): The directory for saving intermediate computations. Defaults totemp.

Run the following command with placeholder values:

python eval.py \

--model_dirs "jaechan-repo/model1" "jaechan-repo/model2" \

--names "model1" "model2" \

--corpus books \

--out_file "out.csv"You may want to customize the evaluation for various reasons, such as:

- Your unlearning method is applied at test-time (e.g., interpolates the logits of multiple model outputs), so there is no saved checkpoint for your unlearned model.

- You want to use a different dataset for evaluation.

- You want to use an MIA metric other than the default one (

Min-40%) for the PrivLeak calculation, such asPPL(perplexity). - You want to change the number of tokens greedily decoded from the model when computing

VerbMemorKnowMem.

Click here if interested in customization:

The maximum amount of customization that we support is through the eval_model function implemented in eval.py. This function runs the evaluation for a single unlearned model and outputs a Python dictionary that corresponds to one row in the aforementioned CSV. Any additional logic—such as loading the model from a local path, evaluating multiple models, or locally saving an output dictionary—is expected to be implemented by the client.

The function accepts the following arguments:

model: An instance ofLlamaForCausalLM. Any HuggingFace model withself.forwardandself.generateimplemented should suffice.tokenizer: An instance ofLlamaTokenizer.metrics(Optional): Same as earlier.corpus(Optional): The corpus to run the evaluation on, eithernewsorbooks. Defaults toNone, which means the client must provide all the data required by the calculations ofmetrics.privleak_auc_key(Optional): The MIA metric to use for calculating PrivLeak. Defaults toforget_holdout_Min-40%. The first keyword (forget) corresponds to the non-member data (options:forget,retain,holdout), the second keyword (holdout) corresponds to the member data (options:forget,retain,holdout), and the last keyword (Min-40%) corresponds to the metric name (options:Min-20%,Min-40%,Min-60%,PPL,PPL/lower,PPL/zlib).verbmem_agg_key(Optional): The Rouge-like metric to use for calculating VerbMem. Defaults tomean_rougeL. Other options includemax_rougeL,mean_rouge1, andmax_rouge1.verbmem_max_new_tokens(Optional): The maximum number of new tokens for VerbMem evaluation. Defaults to 128.knowmem_agg_key(Optional): The Rouge-like metric to use for calculating KnowMem. Defaults tomean_rougeL.knowmem_max_new_tokens(Optional): The maximum number of new tokens for KnowMem evaluation. Defaults to 32.verbmem_forget_file,privleak_forget_file,privleak_retain_file,privleak_holdout_file,knowmem_forget_qa_file,knowmem_forget_qa_icl_file,knowmem_retain_qa_file,knowmem_retain_qa_icl_file(Optional): Specifying these file names overrides the corresponding data files. For example, settingcorpus='news'and specifyingprivleak_retain_filewould only overrideprivleak_retain_file; all other files default to those associated with thenewscorpus by default.temp_dir(Optional): Same as earlier.

Submit the output CSV file generated by eval.py to our HuggingFace leaderboard. You are additionally asked to specify the corpus name (either news or books) that your model(s) were evaluated on, the name of your organization, and your email.