Authors: Jaehong Yoon*, Shoubin Yu*, Mohit Bansal

- (Optional) Creating conda environment

conda create -n RACCooN python=3.10.13

conda activate RACCooN- build from source

pip install -r requirements.txtOur VPLM dataset is based on ROVI videos, please refer to ROVI project page to download raw videos and inpainted videos.

Visual Encoder: we adopt pre-trained ViT-G (1B), the codebase downloads the model automatically.

Video-LLM: we build our MLLM base on PG-Video-LLaVA, please refer to the project homepage to setup the Video-LLM

Diffusion Model: we fine-tune our video inpainting model based on StabelDiffusion2.0-inpainting, please download the model to further finetune the model as described in our paper.

| Dataset | Types |

|---|---|

| VPLM | Multi-object Description |

| VPLM | Single-Object Description |

| VPLM | Layout-Prediction |

| Dataset | Types |

|---|---|

| VPLM | Video Generation |

We test our model on:

We provide RACCooN training and inference script examples as follows.

cd v2p

sh scripts/v2p/finetune/vplm.shcd v2p

sh scripts/v2p/inference/vlpm.shWe provide RACCooN training and inference script examples as follows. Our code is buit upon MGIE. Please setup envoriment following MGIE instruction.

cd p2v

sh train.shwe provide jupynote scripts for P2V inference.

- Release ckpts and VPLM dataset

- Video Feature Extraction Examples

- V2P and P2V training

- Incorporate Grounding Modules

The code is built upon PG-Video-LLaVA, MGIE, GroundingDino, and LGVI.

Please cite our paper if you use our models in your works:

@article{yoon2024raccoon,

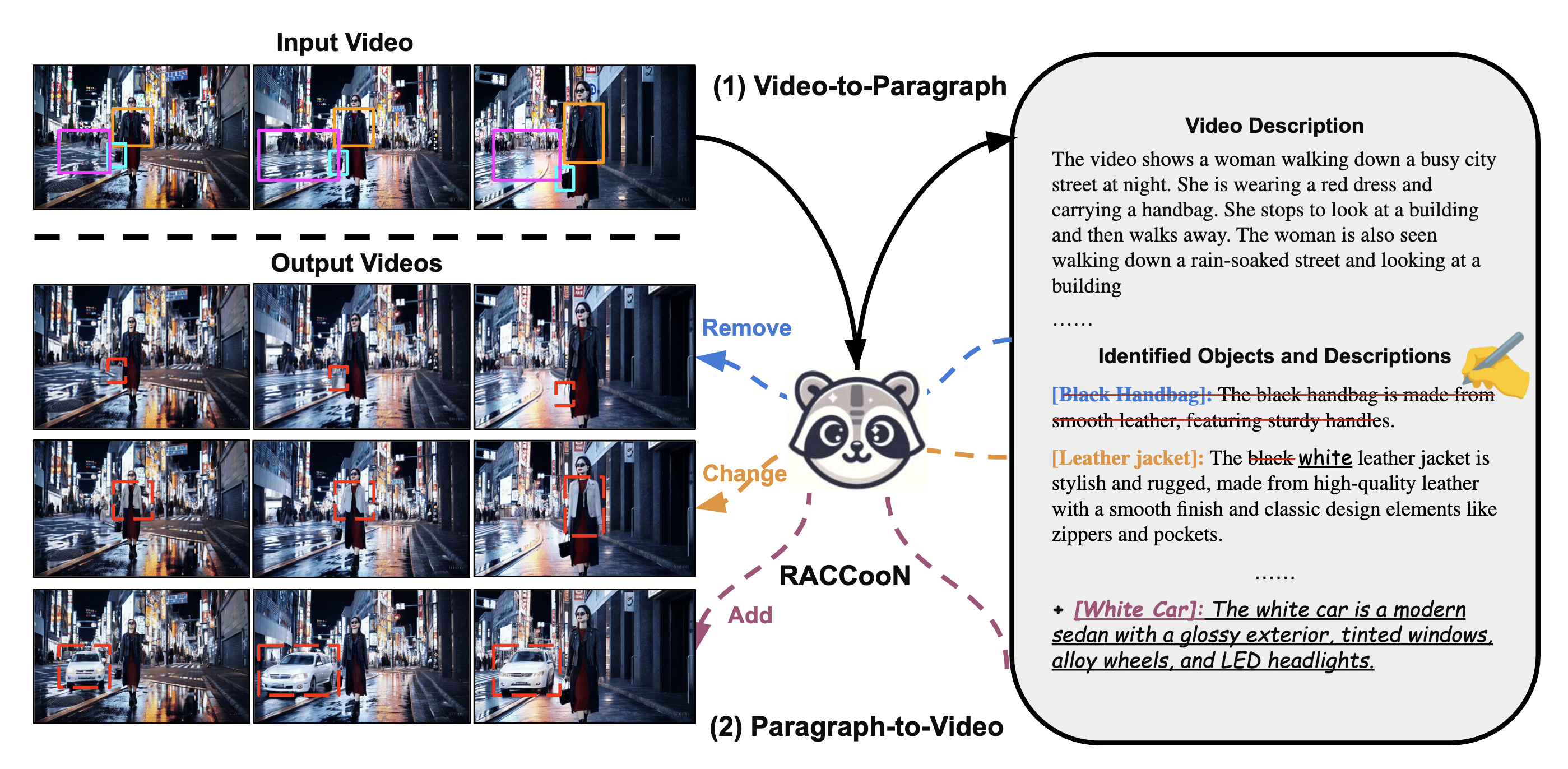

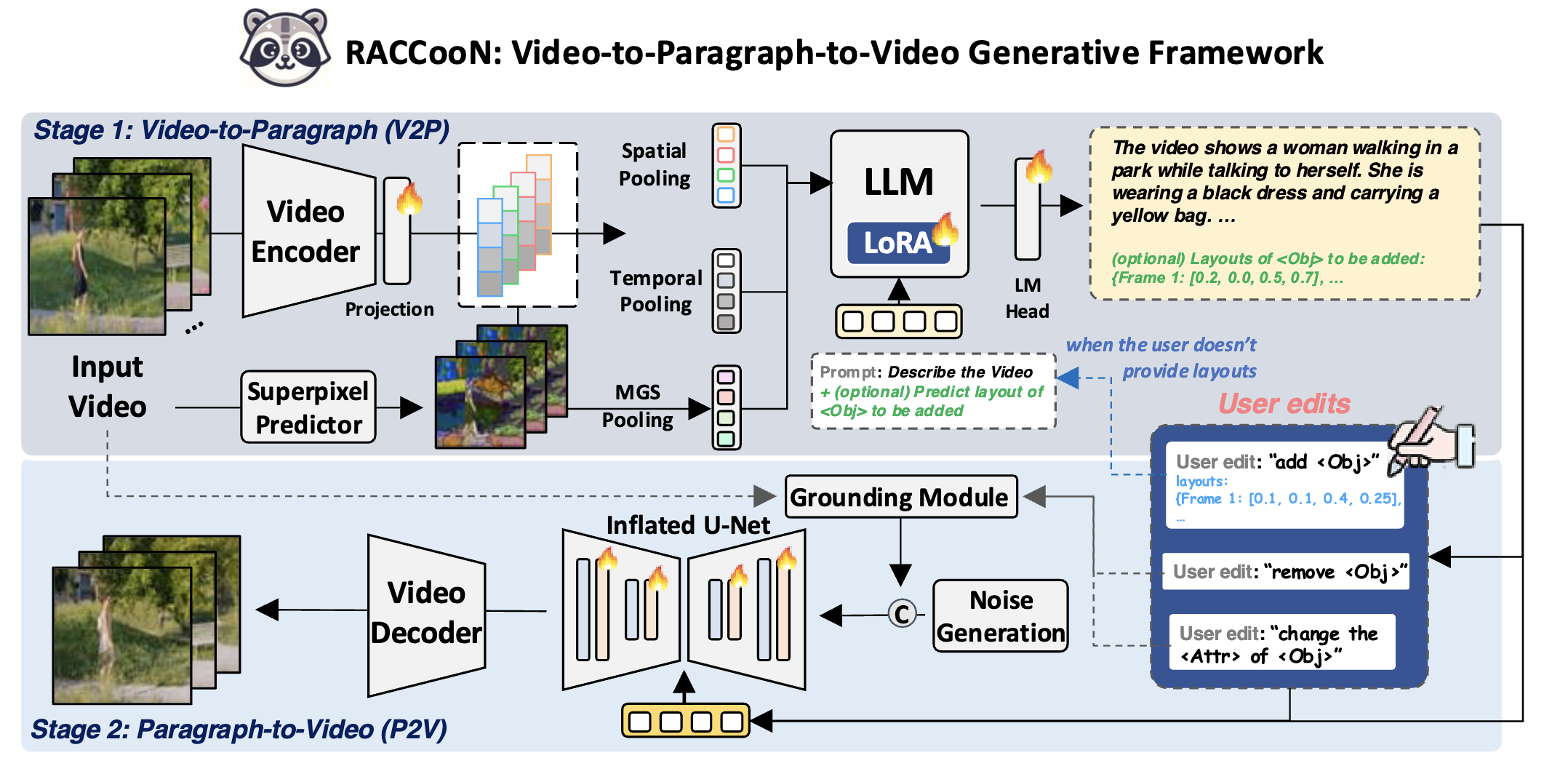

title={RACCooN: A Versatile Instructional Video Editing Framework with Auto-Generated Narratives},

author={Yoon, Jaehong and Yu, Shoubin and Bansal, Mohit},

journal={arXiv preprint arXiv:2405.18406},

year={2024}

}