This repository includes a Python-based procedure for the following tasks:

- 1) Convert Matlab MVIEW-compatible EMA (electromagnetic articulography) data (

.mat) into pickle.pklformat - 2) Convert from

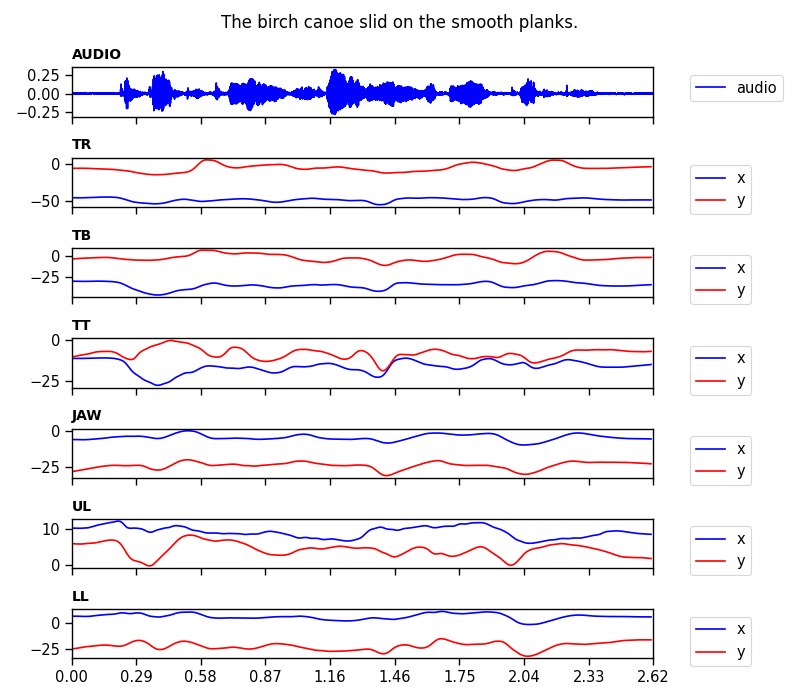

.pklback to MVIEW-compatible.mat. - 3) Visualize articulatory trajectories with the corresponding waveform.

Note that this procedure is based on Haskins IEEE rate comparison dataset (Link). The compatibility with other EMA data format from different machines (AG500, AG501) or OS (Windows) has not been test yet. You may need to configure mviewer.py for your own.

- (1) Convert

.matto.pkl

from mviewer import Viewer

mm = Viewer()

mm.load('example/F01_B01_S01_F01_N.mat') # load .mat file

mm.mat2py(save_file='example/F01_B01_S01_F01_N.pkl') # convert .mat to .pkl & save as .mat file

pprint(mm.data)

{'AUDIO': {...},

'JAW': {...},

'JAWL': {...},

'LL': {...},

'ML': {...},

'TB': {...},

'TR': {...},

'TT': {...},

'UL': {...}}- (2) Convert

.pklto.mat

f = open('example/F01_B01_S01_F01_N.pkl', 'rb') # load .pkl file

data = pickle.load(f); f.close()

from mviewer import Viewer

mm = Viewer()

mm.py2mat('example/F01_B01_S01_F01_N_new.mat', data, save=True) # convert .pkl to .mat & save as .pkl file- (3) Visualize

from mviewer import Viewer

mm = Viewer()

mm.load('example/F01_B01_S01_F01_N.mat') # load .mat file

mm.mat2py(save_file='example/F01_B01_S01_F01_N.pkl') # convert .mat to .pkl & save as .mat file

mm.plot(channel_list=['AUDIO','TR', 'TB', 'TT'], show=True)- (4) Update meta information (phone/word labels and time info in TextGrids)

from mviewer import Viewer

mm = Viewer()

mm.load('example/F01_B01_S01_F01_N.mat') # load .mat file

dictionary = mm.mat2py() # outputs dictionary instead of saving it

dictionary = mm.update_meta(dictionary, 'example/F01_B01_S01_F01_N.TextGrid')

# => label information updated!- (5) Update audio information (when you modified your audio signal; eg. amplitude normalization)

from mviewer import Viewer

mm = Viewer()

mm.load('example/F01_B01_S01_F01_N.mat') # load .mat file

dictionary = mm.mat2py() # outputs dictionary instead of saving it

dictionary = mm.update_audio(dictionary, 'example/F01_B01_S01_F01_N.wav')

# => AUDIO information updated!- If you want to specify meta information (channel names or field names in struct), you can do so (See

class Viewerinmviwer.py) - If you are now sure about the meta information, you can specify

ignore_meta=Truewhen intiaitingclass Viewer.

The structure of the re-organized python dictionary including the EMA and AUDIO data looks like following:

{'AUDIO': {

'NAME': {...},

'SRATE': {...},

'SIGNAL': {...},

'SOURCE': {...}, # eg. S07_sen01_HS01_B01_R01_0004_01

'SENTENCE': {...}, # eg. The birch canoe slid on the smooth planks.

'WORDS': {...}, # eg. {'LABEL': ..., 'OFFS': ... }

'PHONES': {...}, # eg. {'LABEL': ..., 'OFFS': ... }

'LABELS': {...}, # eg. {'NAME': ..., 'OFFSET': ..., 'VALUE': ..., 'HOOK': ... }

},

'JAW': {

'NAME': {...},

'SRATE': {...},

'SIGNAL': {...},

'SOURCE': [],

'SENTENCE': [],

'WORDS': [],

'PHONES': [],

'LABELS': [],

},

'JAWL': {

'NAME': {...},

'SRATE': {...},

'SIGNAL': {...},

'SOURCE': [],

'SENTENCE': [],

'WORDS': [],

'PHONES': [],

'LABELS': [],

},

'LL': {

'NAME': {...},

'SRATE': {...},

'SIGNAL': {...},

'SOURCE': [],

'SENTENCE': [],

'WORDS': [],

'PHONES': [],

'LABELS': [],

},

'TB': {

'NAME': {...},

'SRATE': {...},

'SIGNAL': {...},

'SOURCE': [],

'SENTENCE': [],

'WORDS': [],

'PHONES': [],

'LABELS': [],

},

'TR': {

'NAME': {...},

'SRATE': {...},

'SIGNAL': {...},

'SOURCE': [],

'SENTENCE': [],

'WORDS': [],

'PHONES': [],

'LABELS': [],

},

'TT': {

'NAME': {...},

'SRATE': {...},

'SIGNAL': {...},

'SOURCE': [],

'SENTENCE': [],

'WORDS': [],

'PHONES': [],

'LABELS': [],

},

'UL': {

'NAME': {...},

'SRATE': {...},

'SIGNAL': {...},

'SOURCE': [],

'SENTENCE': [],

'WORDS': [],

'PHONES': [],

'LABELS': [],

}}- This procedure was tested on macOS (xx) as of 2020-12-31

# Data

Haskins IEEE dataset

# Python

python==3.7.4

numpy==1.18.5

scipy==1.4.1

matplotlib==3.3.3

seaborn==0.11.0

tgt==1.4.4

# Matlab

(optional) mview (developed by Mark Tiede @ Haskins Labs)

# Misc

(optional) ffmpeg- Support for IEEE

- Fix

.animate()method - Support for XRMB

- Support for mngu0

- Support for MOCHA-TIMIT

- Provide support for data compression (bz2)

- The example files in

examplefolder (i.e.,F01_B01_S01_R01_N.matandM01_B01_S01_R01_N.mat) were retrieved from the original data repository (Link) without any modifications only for demonstration (version 3 of the GNU General Public License).

- 2020-12-31: first created