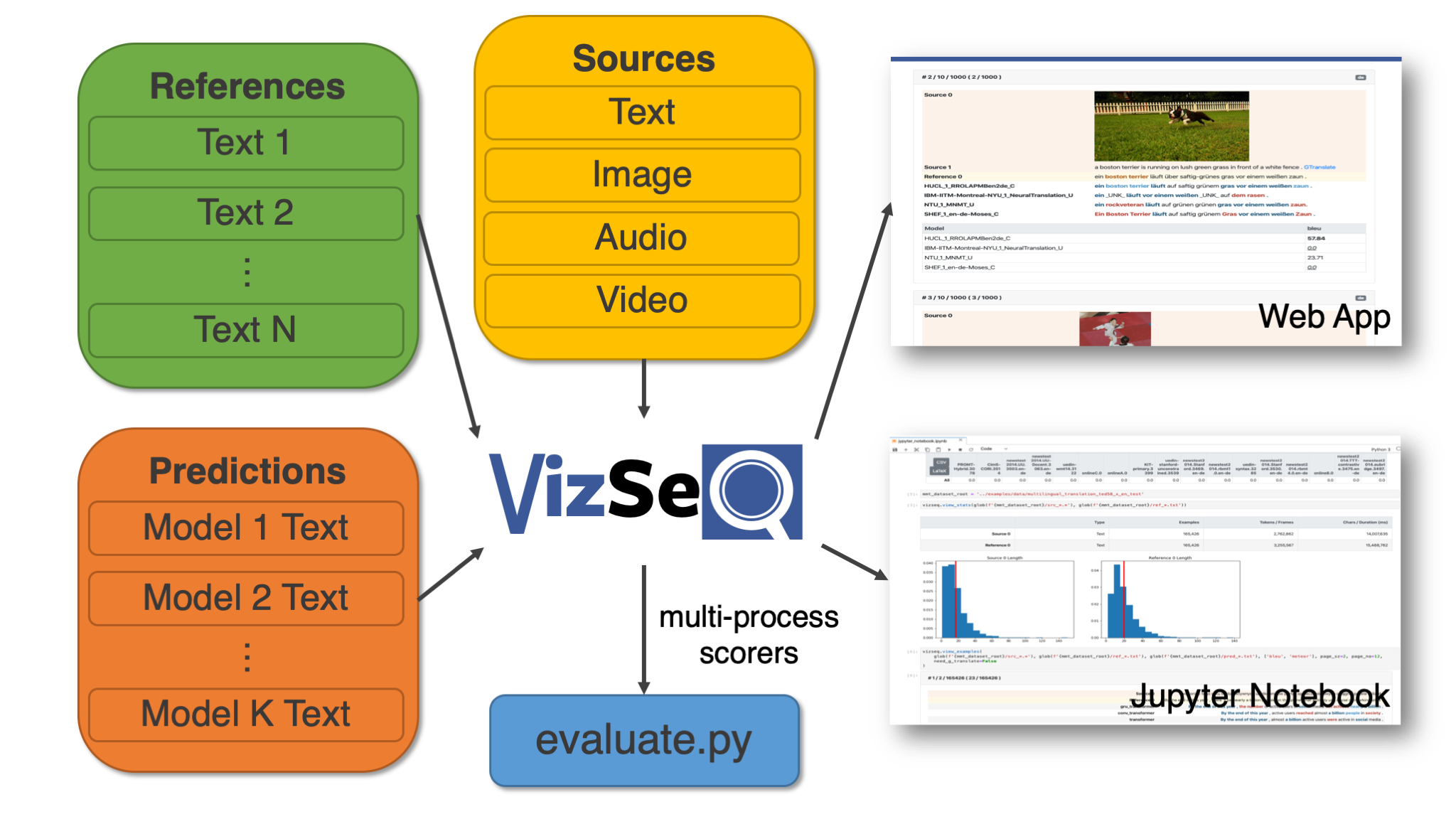

VizSeq is a Python toolkit for visual analysis on text generation tasks like machine translation, summarization, image captioning, speech translation and video description. It takes multi-modal sources, text references as well as text predictions as inputs, and analyzes them visually in Jupyter Notebook or a built-in Web App (the former has Fairseq integration). VizSeq also provides a collection of multi-process scorers as a normal Python package.

[Paper] [Documentation] [Blog]

| Source | Example Tasks |

|---|---|

| Text | Machine translation, text summarization, dialog generation, grammatical error correction, open-domain question answering |

| Image | Image captioning, image question answering, optical character recognition |

| Audio | Speech recognition, speech translation |

| Video | Video description |

| Multimodal | Multimodal machine translation |

Accelerated with multi-processing/multi-threading.

| Type | Metrics |

|---|---|

| N-gram-based | BLEU (Papineni et al., 2002), NIST (Doddington, 2002), METEOR (Banerjee et al., 2005), TER (Snover et al., 2006), RIBES (Isozaki et al., 2010), chrF (Popović et al., 2015), GLEU (Wu et al., 2016), ROUGE (Lin, 2004), CIDEr (Vedantam et al., 2015), WER |

| Embedding-based | LASER (Artetxe and Schwenk, 2018), BERTScore (Zhang et al., 2019) |

VizSeq requires Python 3.6+ and currently runs on Unix/Linux and macOS/OS X. It will support Windows as well in the future.

You can install VizSeq from PyPI repository:

$ pip install vizseqOr install it from source:

$ git clone https://github.com/facebookresearch/vizseq

$ cd vizseq

$ pip install -e .Download example data:

$ git clone https://github.com/facebookresearch/vizseq

$ cd vizseq

$ bash get_example_data.shLaunch the web server:

$ python -m vizseq.server --port 9001 --data-root ./examples/dataAnd then, navigate to the following URL in your web browser:

http://localhost:9001

VizSeq is licensed under MIT. See the LICENSE file for details.

Please cite as

@inproceedings{wang2019vizseq,

title = {VizSeq: A Visual Analysis Toolkit for Text Generation Tasks},

author = {Changhan Wang, Anirudh Jain, Danlu Chen, Jiatao Gu},

booktitle = {In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing: System Demonstrations},

year = {2019},

}

Changhan Wang (changhan@fb.com), Jiatao Gu (jgu@fb.com)