Our tweak of the Deep Reinforcement Learning algorithm IMPALA. We modify the original code to support:

- Distributed Multiple-learner-multiple-actor training (see here for a brief description)

- OpenAI Gym compatibility

- More Neural Network architectures

- Algorithm arguments (including: gradients clipping)

Install the following python packages:

Note: the original IMPALA code is written in python 2.x, so we recommend you make a virtual environment of python 2.x and pip install the above packages. Also, you can simply do everything in docker, see description here

We offer a couple of ways to run the training code, as described below.

First follow the distributed Tensorflow convention to run experiment.py as actors,

then follow the Horovod convention to run experiment.py as learner(s) with

mpirun.

See examples here.

Run the "frontend script" run_exeriment_mm_raw.py,

which wraps experiment.py by reading the learner_hosts and

actor_hosts from a separate csv file prepared beforehand.

Examples:

python run_experiment_mm_raw.py \

--workers_csv_path=sandbox/local_workers_example.csv \

--level_name=BreakoutNoFrameskip-v4 \

--agent_name=SimpleConvNetAgent \

--num_action_repeats=1 \

--batch_size=32 \

--unroll_length=20 \

--entropy_cost=0.01 \

--learning_rate=0.0006 \

--total_environment_frames=200000000 \

--reward_clipping=abs_one \

--gradients_clipping=40.0See sandbox/local_workers_example.csv for the CSV fields you must provide.

The field names should be self-explanatory.

If you have access to some cloud service where you can apply many cheap CPU machines, (Or you happen to be from internal Tencent and have access to the c.oa.com "compute sharing platform",) see the description here for how to prepare CSV file.

TODO

TODO

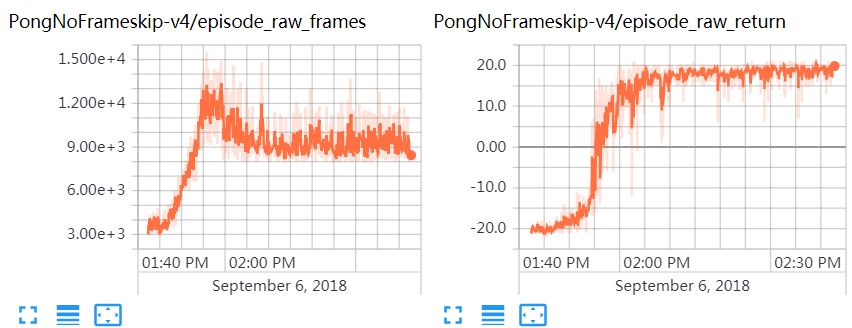

The training steps (in time) vs. episode return/length, over PongNoFrameskip-v4, with the hyperparameters: 1 learner, 256 actors, ResNetLSTM, unroll_length=40, lr=0.0003, max_steps=400M. It reaches ~21 points (the maximum score) in less than 20 mins.

The training steps (in time) vs. episode return/length, over BreakoutNoFrameskip-v4, with the hyperparameters: 1 learner, 256 actors, ResNetLSTM, unroll_length=40, entropy_cost=0.1, lr=0.0003, max_steps=400M. It reaches >800 points in a few hours.

For the training throughput and the scale-up when using multiple learners, see here.