3DV 2021: Synergy between 3DMM and 3D Landmarks for Accurate 3D Facial Geometry

Cho-Ying Wu, Qiangeng Xu, Ulrich Neumann, CGIT Lab at University of Souther California

[paper] [project page]

This paper supersedes the previous version of M3-LRN.

Advantages:

👍 SOTA on all 3D facial alignment, face orientation estimation, and 3D face modeling.

👍 Fast inference with 3000fps on a laptop RTX 2080.

👍 Simple implementation with only widely used operations.

(This project is built/tested on Python 3.8 and PyTorch 1.9)

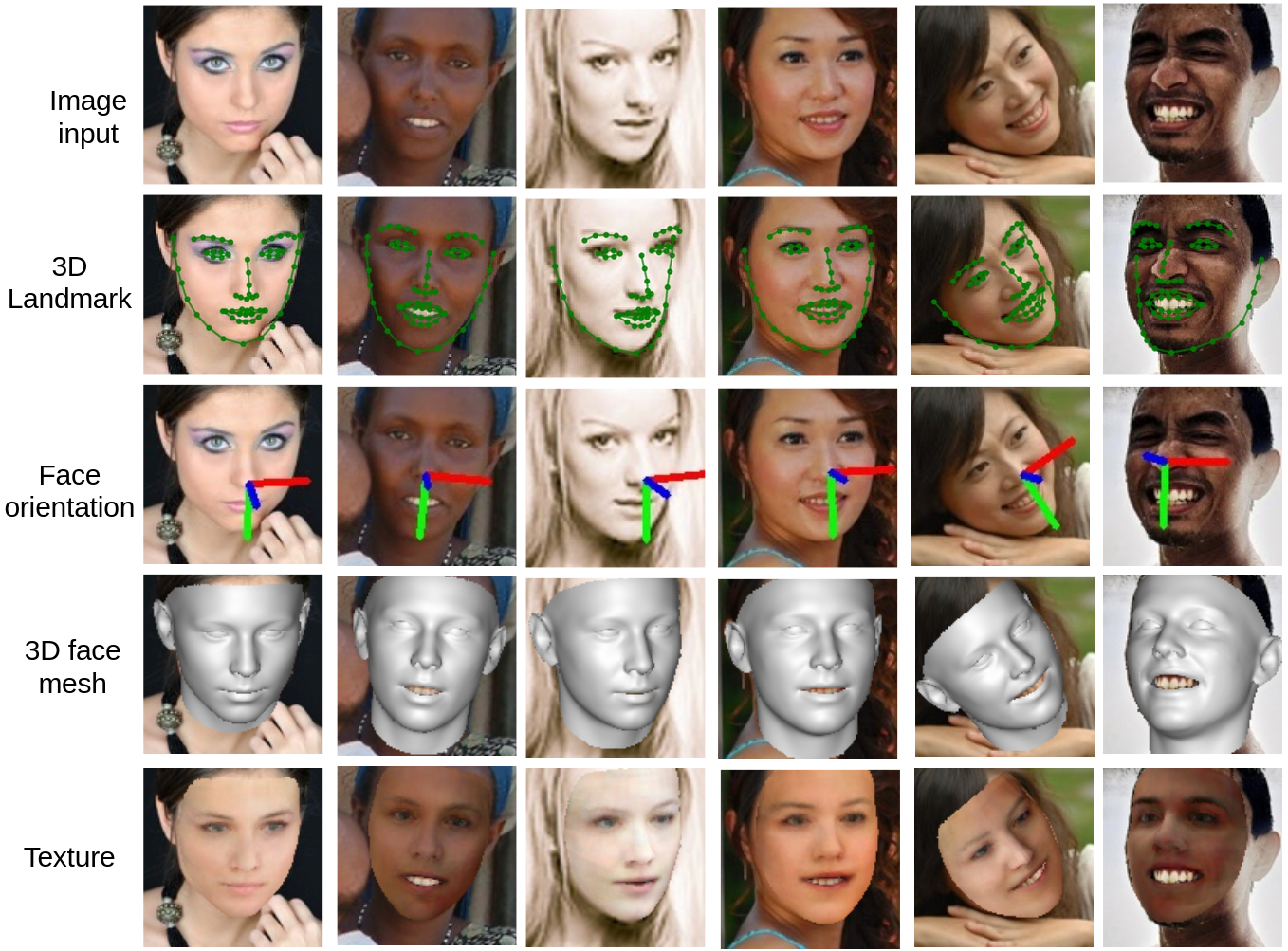

Single Image Inference Demo

-

Clone

git clone https://github.com/choyingw/SynergyNetcd SynergyNet -

Use conda

conda create --name SynergyNetconda activate SynergyNet -

Install pre-requisite common packages

PyTorch 1.9 (should also be compatiable with 1.0+ versions), Opencv, Scipy, Matplotlib -

Download data [here] and [here]. Extract these data under the repo root.

These data are processed from [3DDFA] and [FSA-Net].

Download pretrained weights [here]. Put the model under 'pretrained/'

-

Compile Sim3DR and FaceBoxes:

cd Sim3DR./build_sim3dr.shcd ../FaceBoxes./build_cpu_nms.shcd .. -

Inference

python singleImage.py -f img

Evaluation

-

Follow Single Image Inference Demo: Step 1-4

-

Benchmarking

python benchmark.py -w pretrained/best.pth.tar

Print-out results and visualization under 'results/' (see 'demo/' for some sample references) are shown.

Training

-

Follow Single Image Inference Demo: Step 1-4.

-

Download training data from [3DDFA]: train_aug_120x120.zip and extract the zip file under the root folder (Containing about 680K images).

-

bash train_script.sh -

Please refer to train_script for hyperparameters, such as learning rate, epochs, or GPU device. The default settings take ~19G on a 3090 GPU and about 6 hours for training. If your GPU is less than this size, please decrease the batch size and learning rate proportionally.

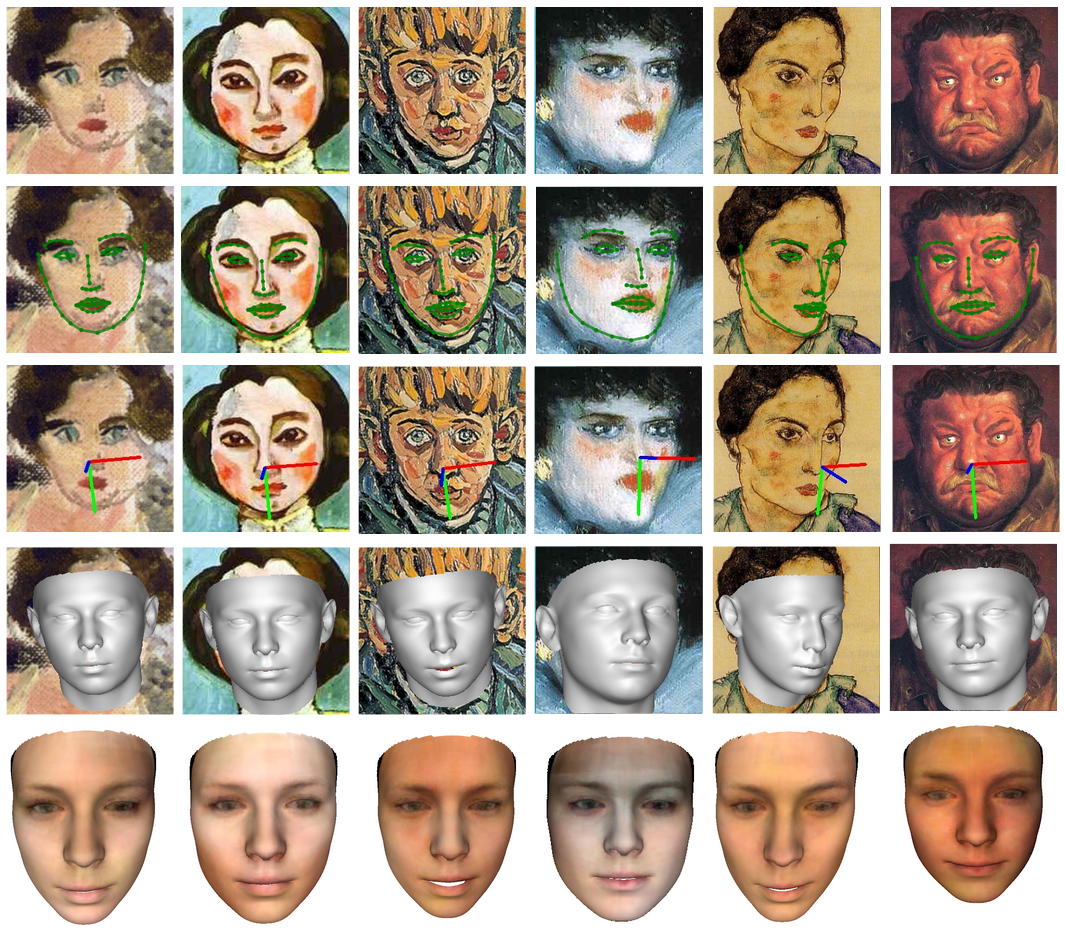

Textured Artistic Face Meshes

-

Follow Single Image Inference Demo: Step 1-5.

-

Download artistic faces data [here], which are from [AF-Dataset]. Download our inferred UV maps [here] by UV-texture GAN. Extract them under the root folder.

-

python artistic.py -f art-all/122.png

Note that this artistic face dataset contains many different level/style face abstration. If a testing image is close to real, the result is much better than those of highly abstract samples.

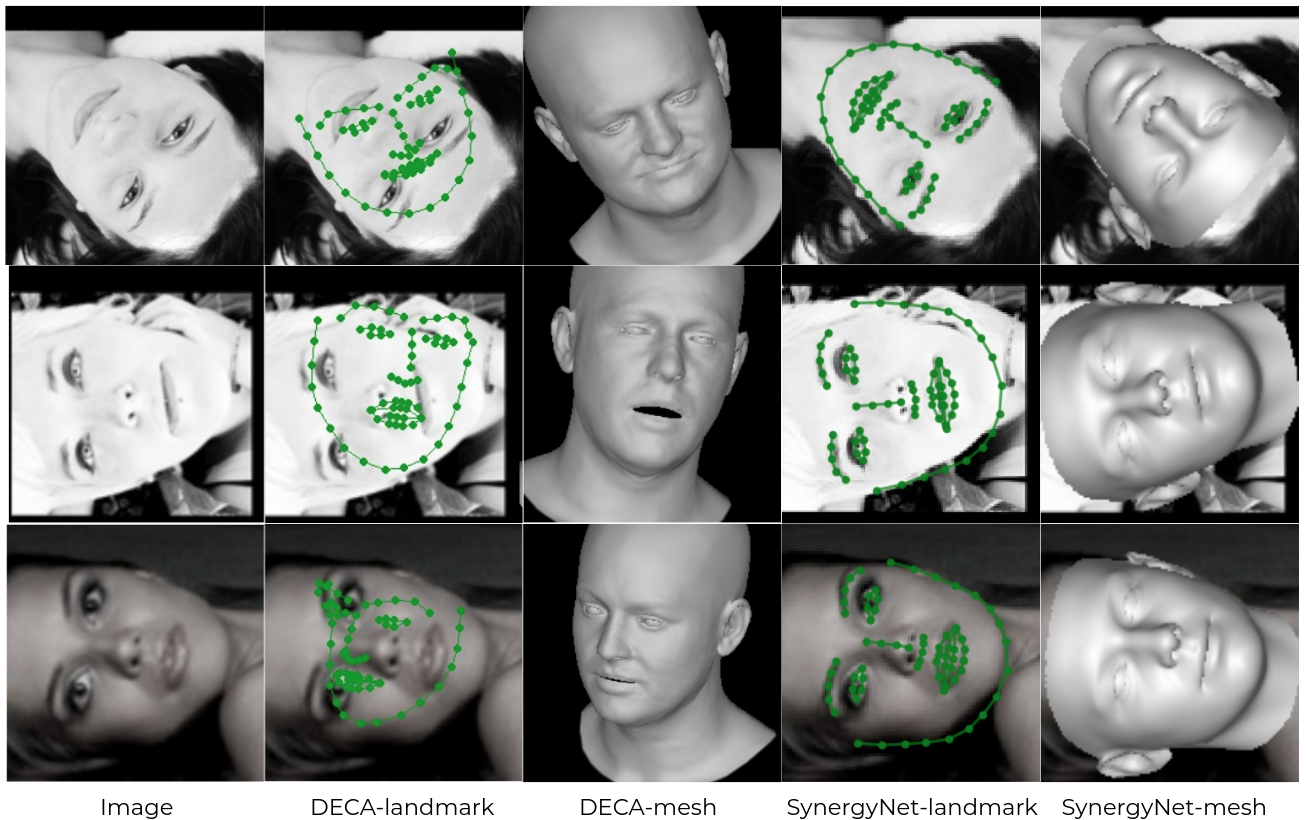

We show a comparison with [DECA] using the top-3 largest roll angle samples in AFLW2000-3D.

More Results

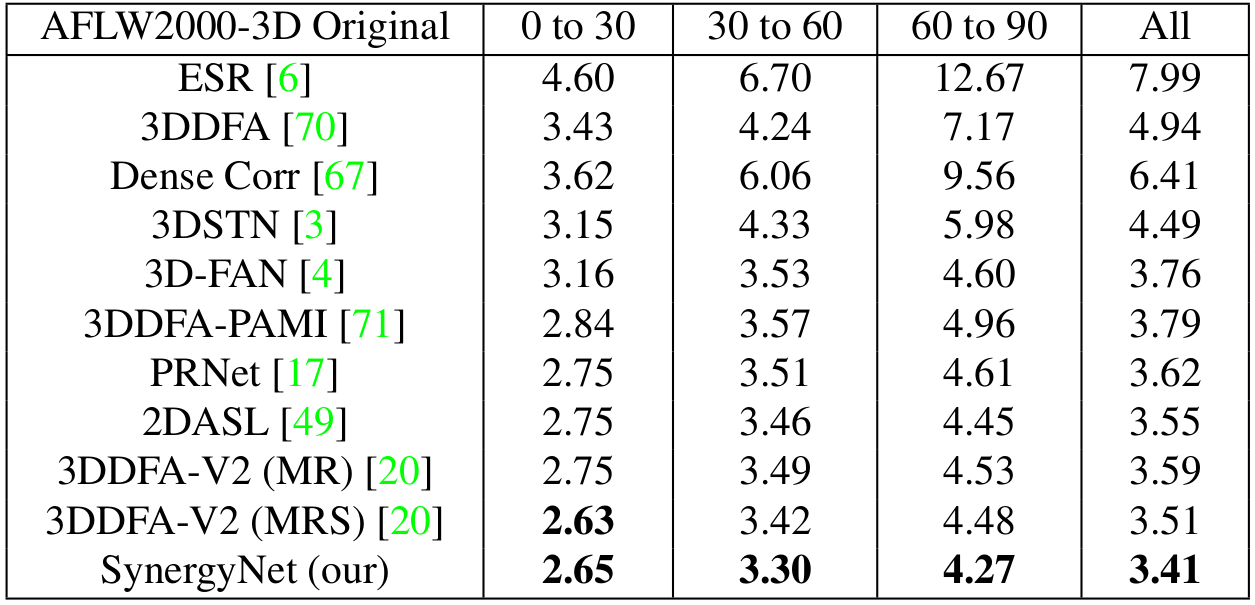

Facial alignemnt on AFLW2000-3D (NME of facial landmarks):

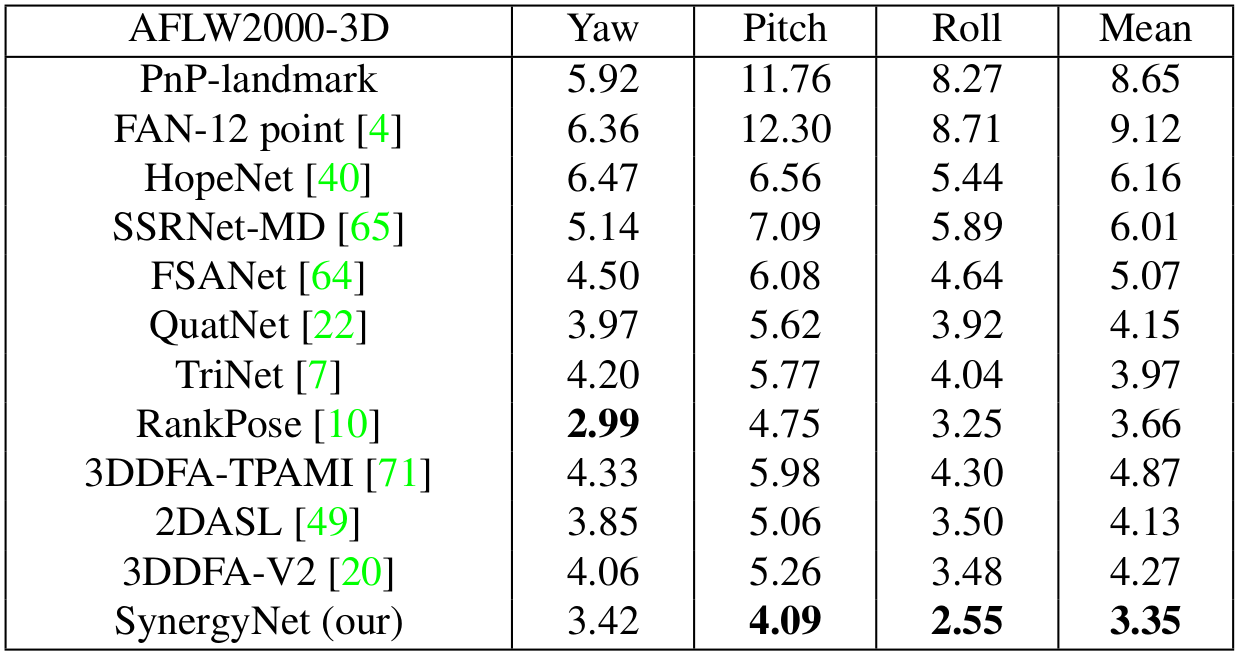

Face orientation estimation on AFLW2000-3D (MAE of Euler angles):

Results on artistic faces:

Related Project

[Voice2Mesh] (analysis on relation for voice and 3D face)

Acknowledgement

The project is developed on [3DDFA] and [FSA-Net]. Thank them for their wonderful work. Thank [3DDFA-V2] for the face detector and rendering codes.