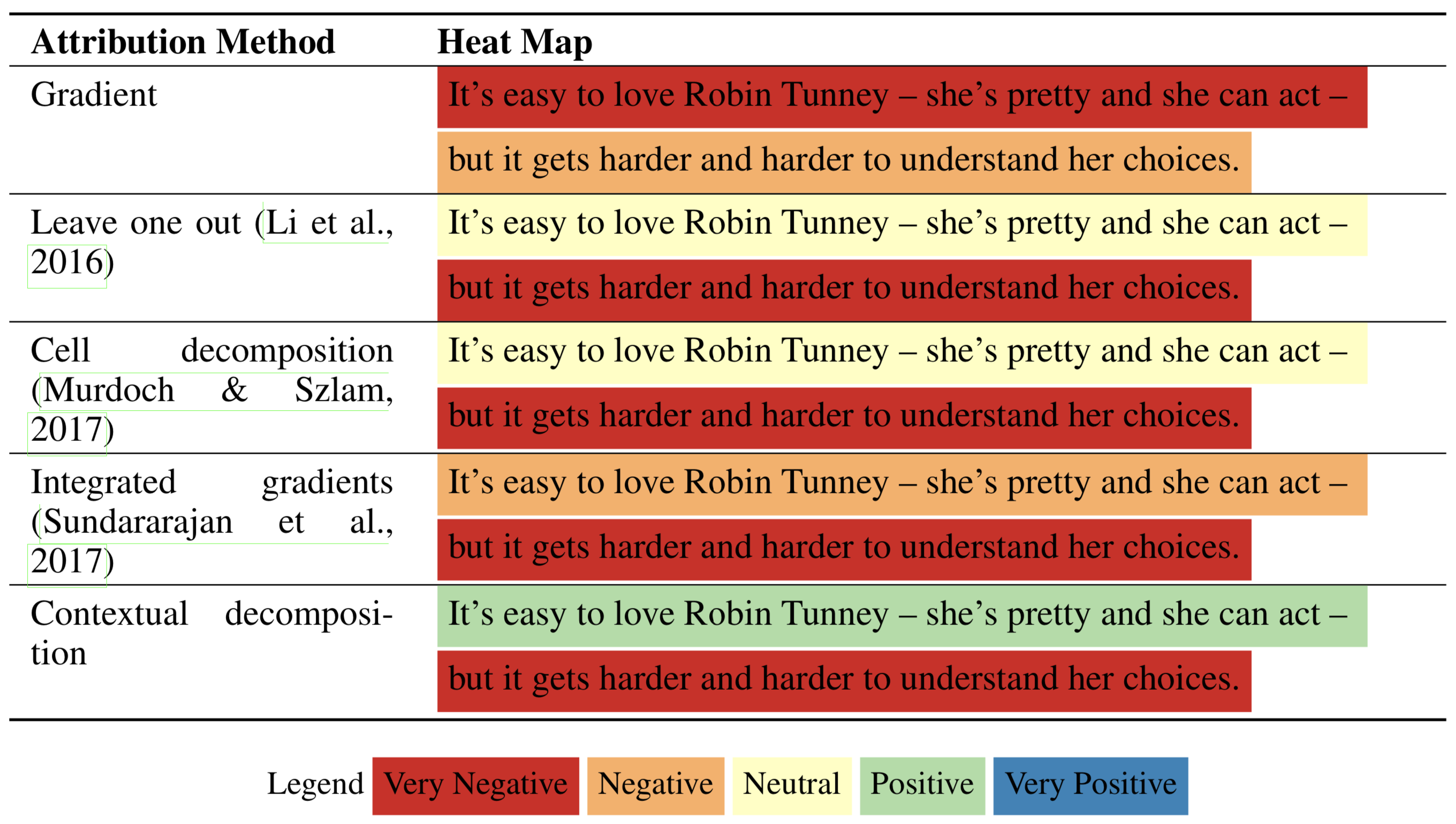

Demonstration of the methods introduced in "Beyond Word Importance: Contextual Decomposition to Extract Interactions from LSTMs" (ICLR 2018 Oral)

This repo is no longer actively maintained, but some of these related works are actively maintaining / extending the ideas here.

- ACD (ICLR 2019 pdf, github) - extends CD to CNNs / arbitrary DNNs, and aggregates explanations into a hierarchy

- CDEP (ICML 2020 pdf, github) - penalizes CD / ACD scores during training to make models generalize better

- TRIM (ICLR 2020 workshop pdf, github) - using simple reparameterizations, allows for calculating disentangled importances to transformations of the input (e.g. assigning importances to different frequencies)

- DAC (arXiv 2019 pdf, github) - finds disentangled interpretations for random forests

- PDR framework (PNAS 2019 pdf) - an overarching framewwork for guiding and framing interpretable machine learning

- feel free to use/share this code openly!